For an enriched reading experience you may visit the online version of this exegesis at https://do.meni.co/phd DOI 10.26180/5d4c35648c01a

This exegesis is submitted as partial fulfilment of the requirements for the degree of Doctor of Philosophy

Bridging the Virtual and Physical: from Screens to Costume

by

Domenico Mazza

Bachelor of Design (Visual Communication)(Hons), 2014

Faculty of Information Technology

Monash University, Caulfield, Melbourne

August 2019

DOI 10.26180/5d4c35648c01a

PDF DOI 10.26180/5d5a214fdf8aa

© Domenico Mazza (2019)

Under the Australian Copyright Act 1968, this exegesis must be used only under the normal conditions of scholarly fair dealing. In particular no results or conclusions should be extracted from it, nor should it be copied or closely paraphrased in whole or in part without the written consent of the author. Proper written acknowledgement should be made for any assistance obtained from this exegesis.

Any third-party content herein that has been reproduced without permissions comply with the fair dealing exemption in the Australian Copyright Act 1968 that permits reproduction of such material for the purposes of criticism and review.

I certify that I have made all reasonable efforts to secure copyright permissions for third-party content included in this exegesis and have not knowingly added copyright content to my work without the owner's permission.

Abstract

There are ways humans act in and experience the physical world that are not reflected in the design of digital media. The term 'digital media' in this case encompasses our televisions, desktop computers, laptops, tablets, smartphones, smartwatches and the like. People who engage in activities through digital media have the ability today to collect and display vast amounts of information from across time and space, almost anywhere. Yet the virtual information presented through digital media accommodates neither the full freedom of intangible human imagination nor the full familiarity or immediacy of engaging with the physical world. This creates a divide between experiences through digital media and those through the surrounding physical world. This research explores ways to conceptualise digital experiences in the physical world.

The outcome of the practice-based design research allows a wide range of design practitioners and researchers, from visual and interaction design to human–computer interaction and textile design, to engage in the conceptualisation, prototyping and presentation of new digital media that addresses the divide between the physical and virtual, through what this research terms Computational Costume. The work enables designers and audiences alike to imagine and experience future technological capabilities without being limited to today's technology or needing to engage advanced visual effects or technical skills. Instead, this practice-based design research has developed and refined the use of lo-fi physical materials, from exhibition to film-making.

Computational Costume has emerged from four investigations into bridging the divide between physical and virtual practices in digital media. Investigations began with supporting people's spatial memory of interactions on screen-based devices through a visual overlay for interfaces known as the Memory Menu. A 99-participant study of the Memory Menu did not find a significant improvement to usability. This result, paired with knowledge obtained from a variety of experienced designers and communicators across art, design, marketing and human–computer interaction, encouraged a shift in focus beyond screen-based digital media. This shift in focus led to a review of and research into ubiquitous and tangible computing, which seeks to engage more of people's surroundings and physical world. This review revealed that a specific focus on whole-body interaction designs was required to break dependence on screen-based devices. This focus led to speculation on how probable technologies centred around augmented reality could enable whole-body, wearable virtual identities to ground interactions through digital media. Computational Costume was conceived in this research from this speculation.

The practice-based research presented in this exegesis and through exhibition contributes a conceptual rationale and accompanying practical approach for developing speculative virtual wearables and objects that ground interactions with digital media in the physical world using lo-fi physical materials. This contribution is embodied by the design of Computational Costume proposed in this research: a speculative design setting and scenarios based on imagined probable technologies centred around augmented reality. This work is explored through lo-fi physical materials activated via exhibition and film-making. This method of exploration enables designers and audiences alike to be liberated from the constraints of today's technology.

Declaration

This document contains no material which has been accepted for the award of any other degree or diploma in any university or other institution and, to the best of my knowledge and belief, the document contains no material previously published or written by another person, except where due reference is made in the text of the documentation.

Domenico Mazza, 10 April 2019

Contents

List of figures

ATELIER allowing people to shift between modes of digital representation. Images from Embodied Interaction – designing beyond the physical–digital divide [25] by Ehn and Linde (2004). Used with permission.

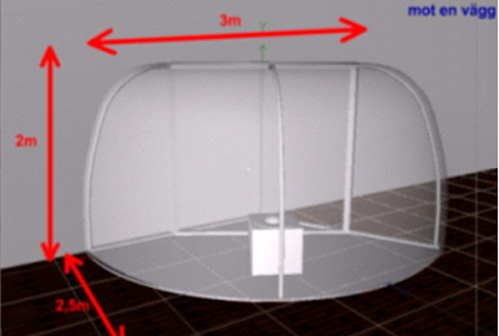

A rendition of a geographic information system (GIS) visualisation encountered in practice. Image is author's own, based upon a working design.

Screenshots of the Patina use-wear effect. Images from Patina: Dynamic Heatmaps for Visualizing Application Usage [66] by Matejka et al. (2013). Used with permission.

Screenshot of Data Mountain. Image from Data Mountain: Using Spatial Memory for Document Management [82] by Robertson et al. (1998). Used with permission.

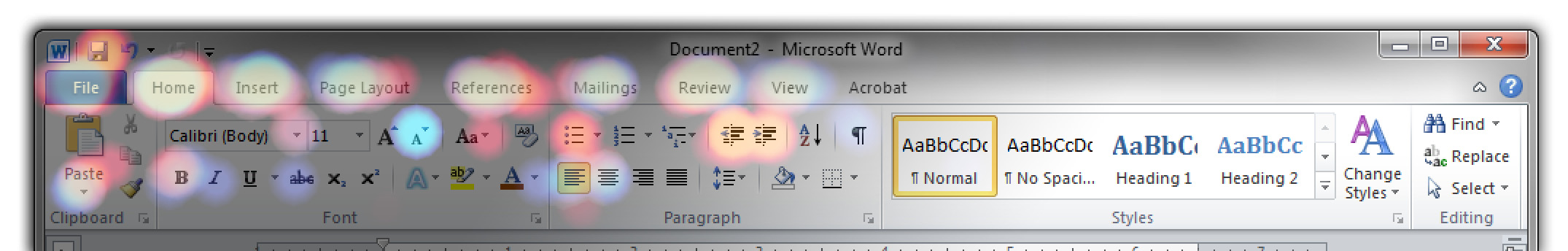

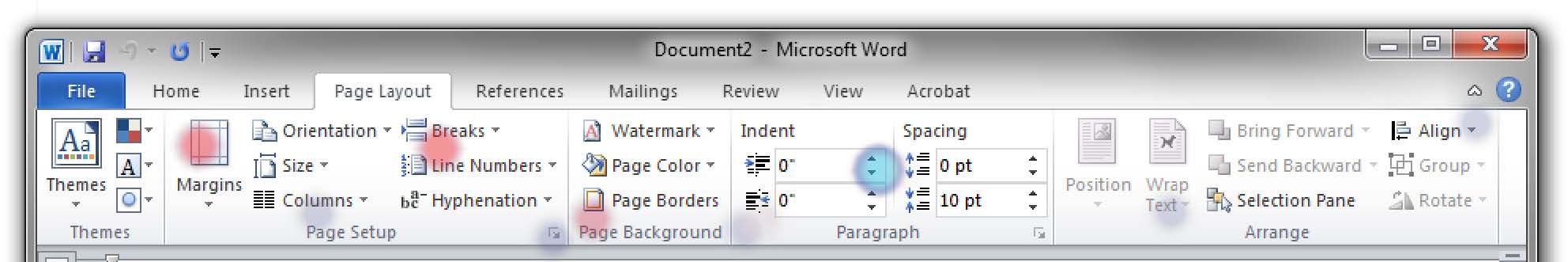

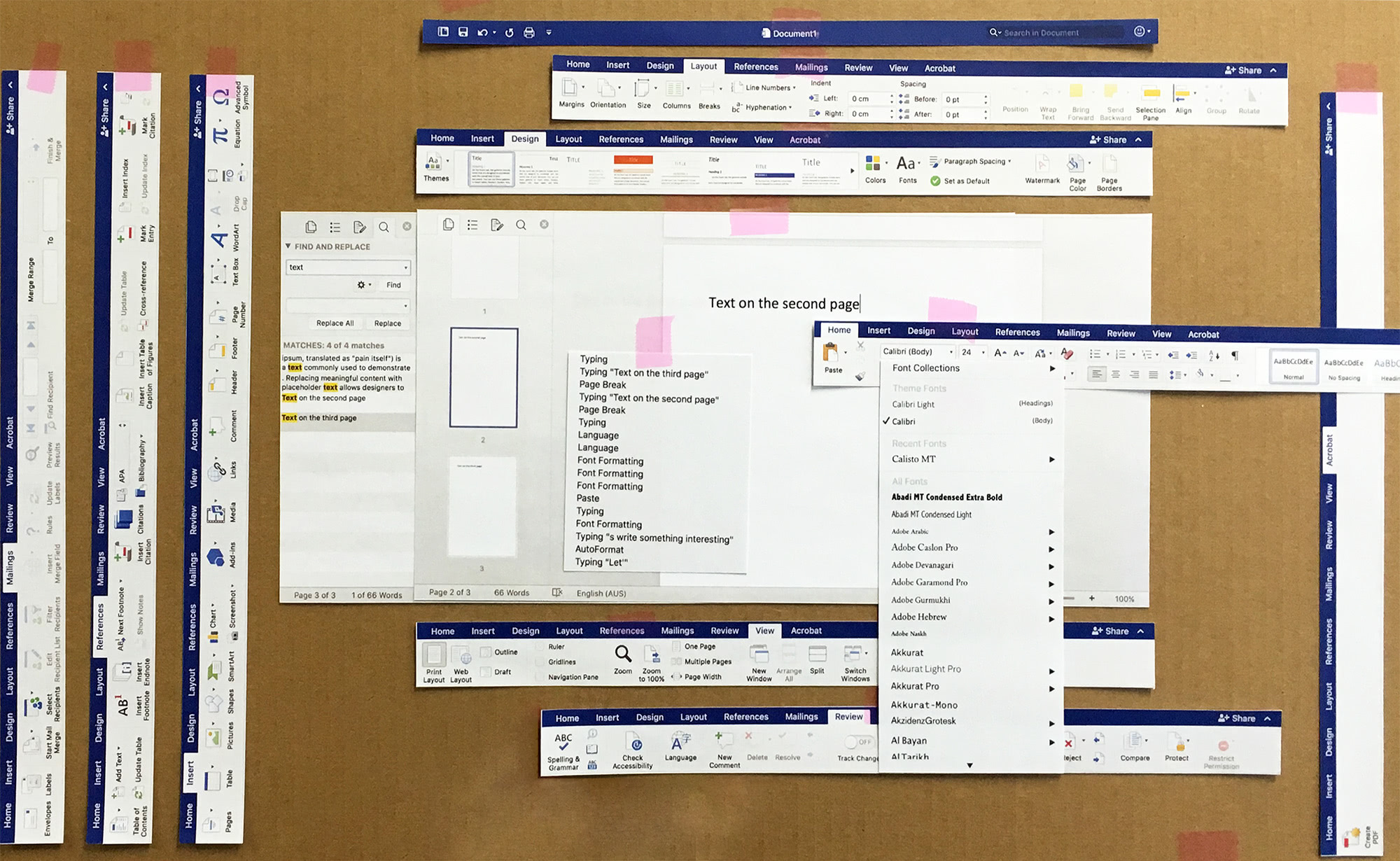

A commonly used word processor reconceptualised for a large surface accommodating people's wide range of practices and physical abilities. Image is author's own.

Different possible practices defined as areas on a reconfigured word processor design for a large surface. The practice of directly editing a document is highlighted. Arrows indicate connections between areas. Image is author's own.

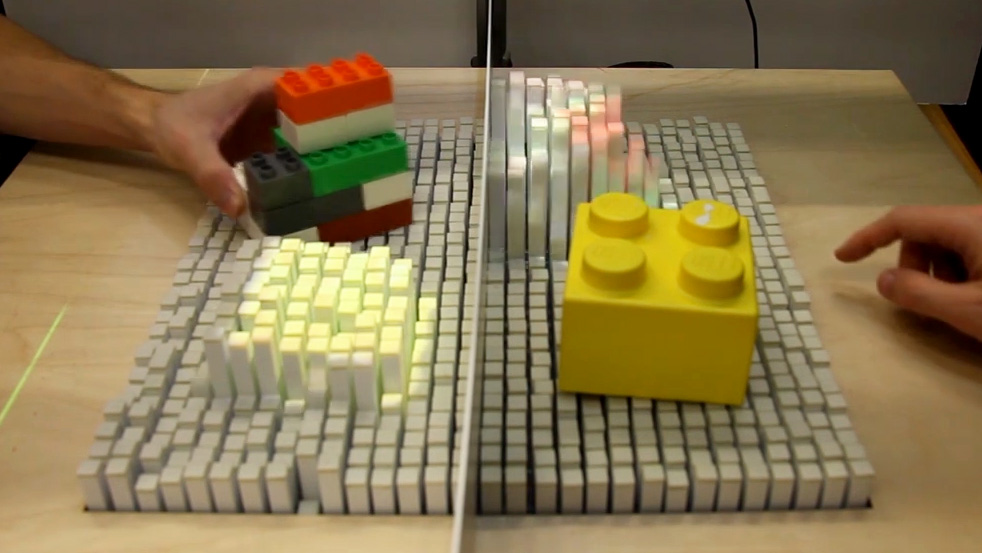

The Physical Telepresence system in use. Images from Physical Telepresence [63] video by Leithinger et al., MIT Media Lab, Tangible Media Group (2014).

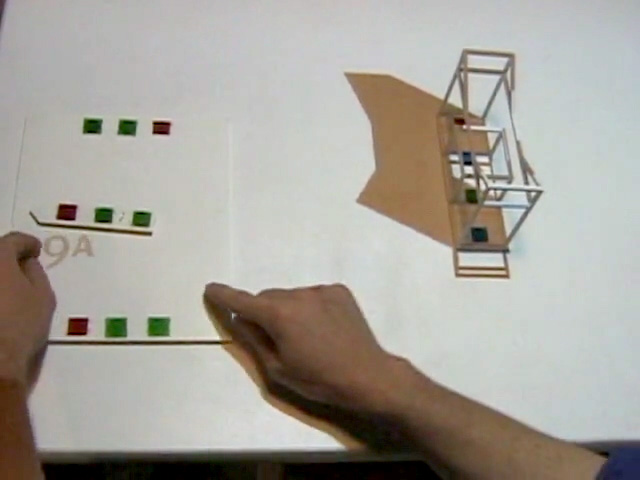

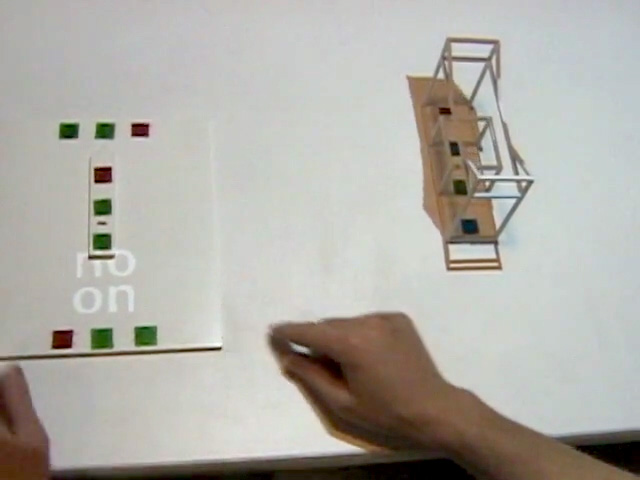

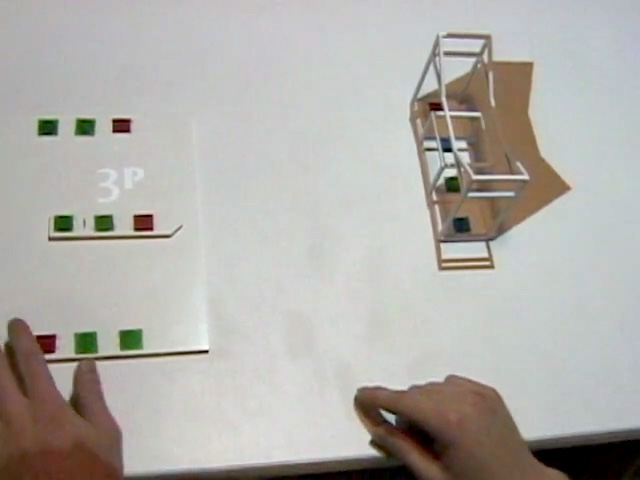

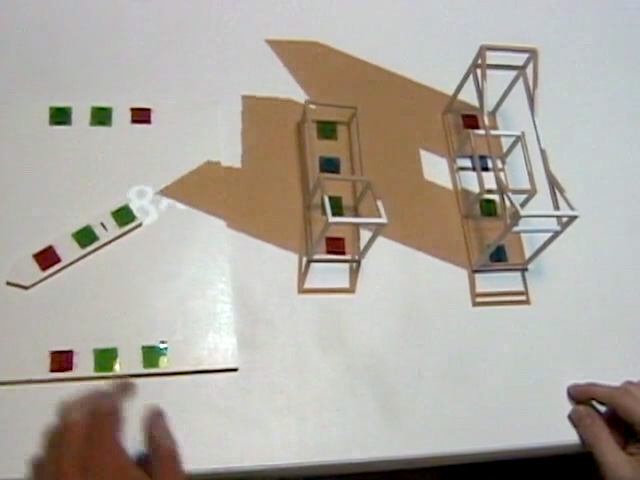

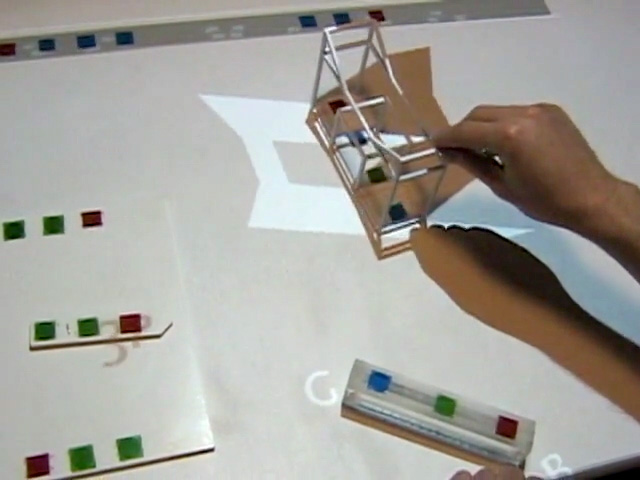

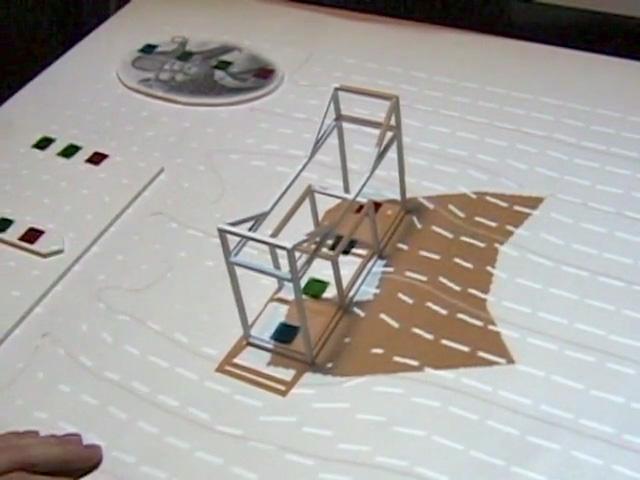

The Urp system in use. Video stills from Urp [98] video by Underkoffler and Ishii, MIT Media Lab, Tangible Media Group (1999).

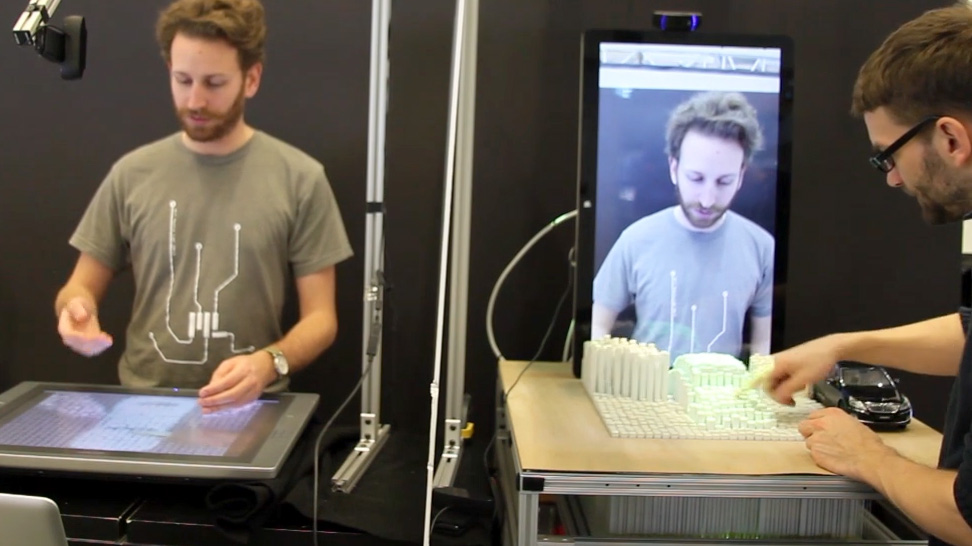

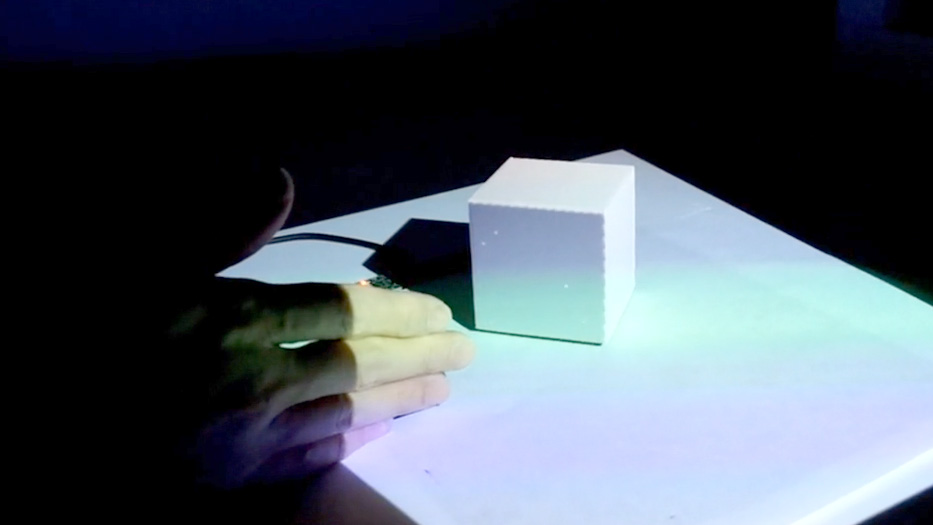

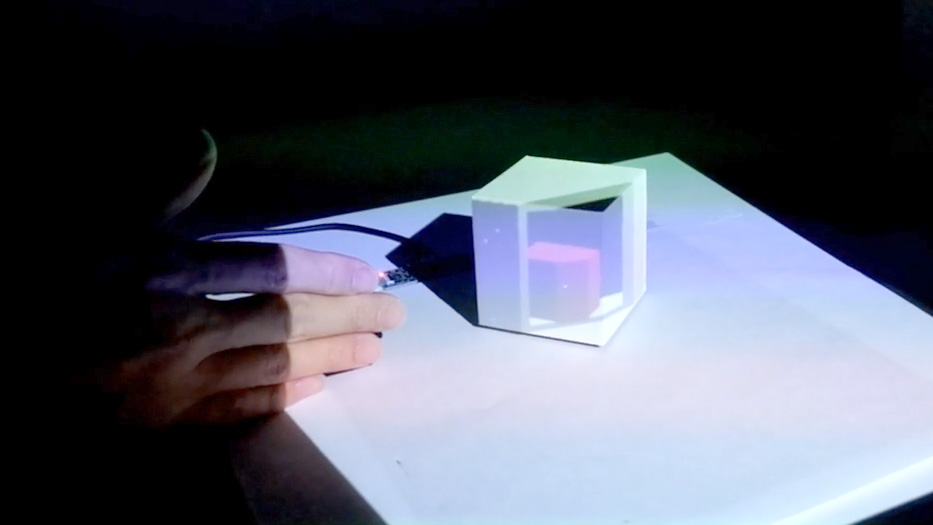

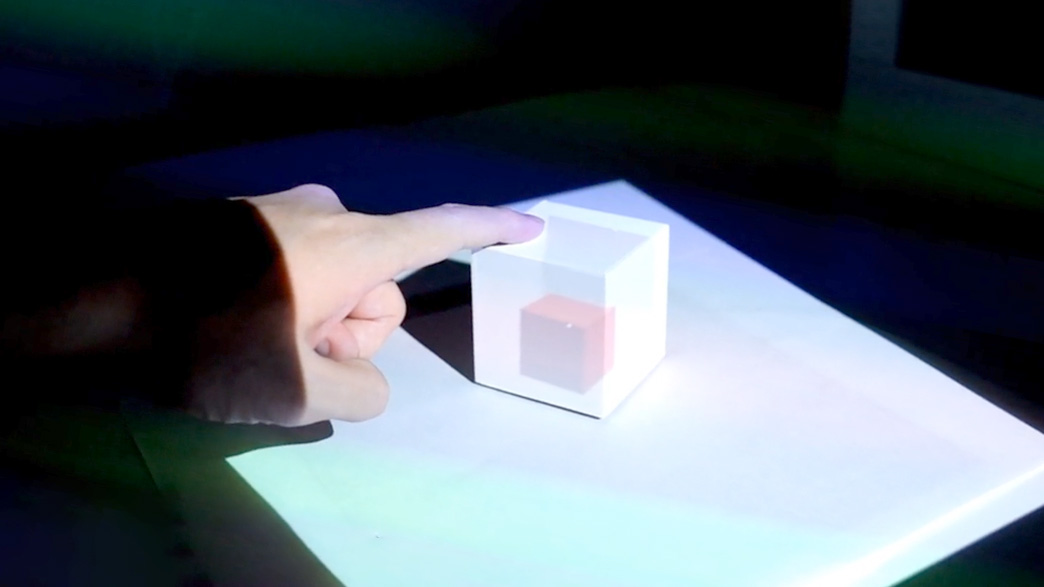

The inSide system in use. Images from inSide [95] video by Tang et al., MIT Media Lab, Tangible Media Group (2014).

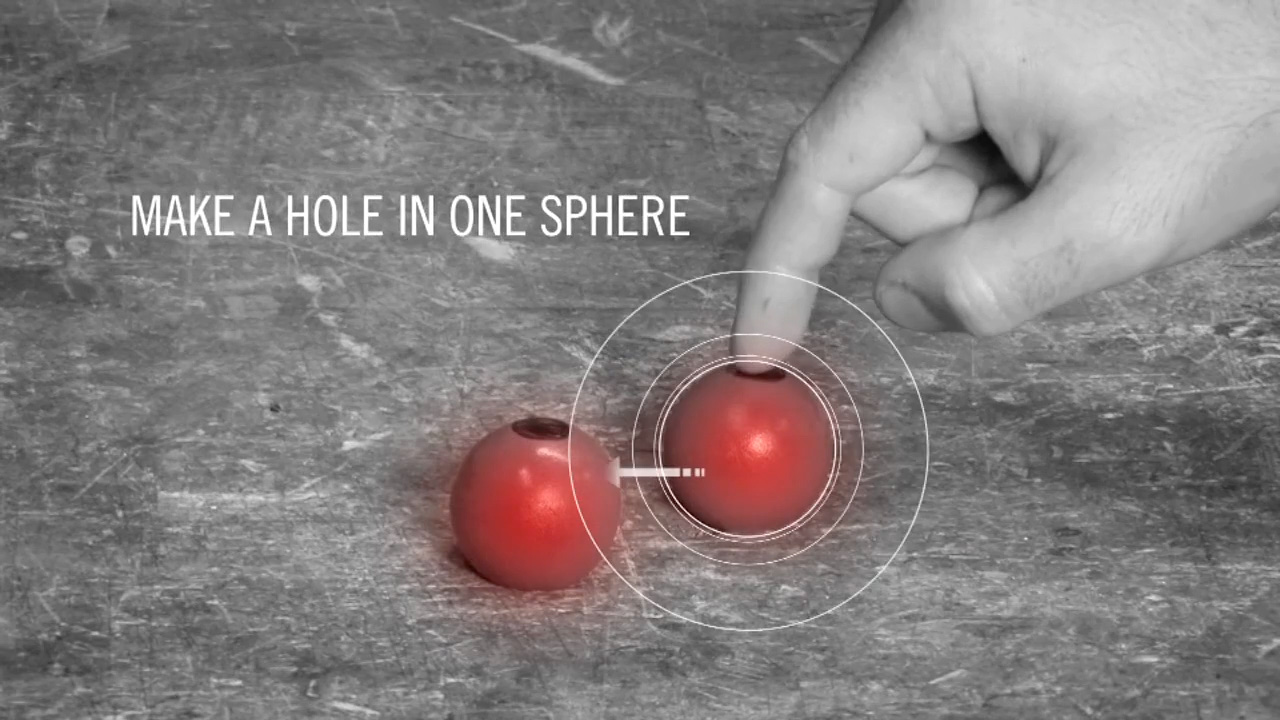

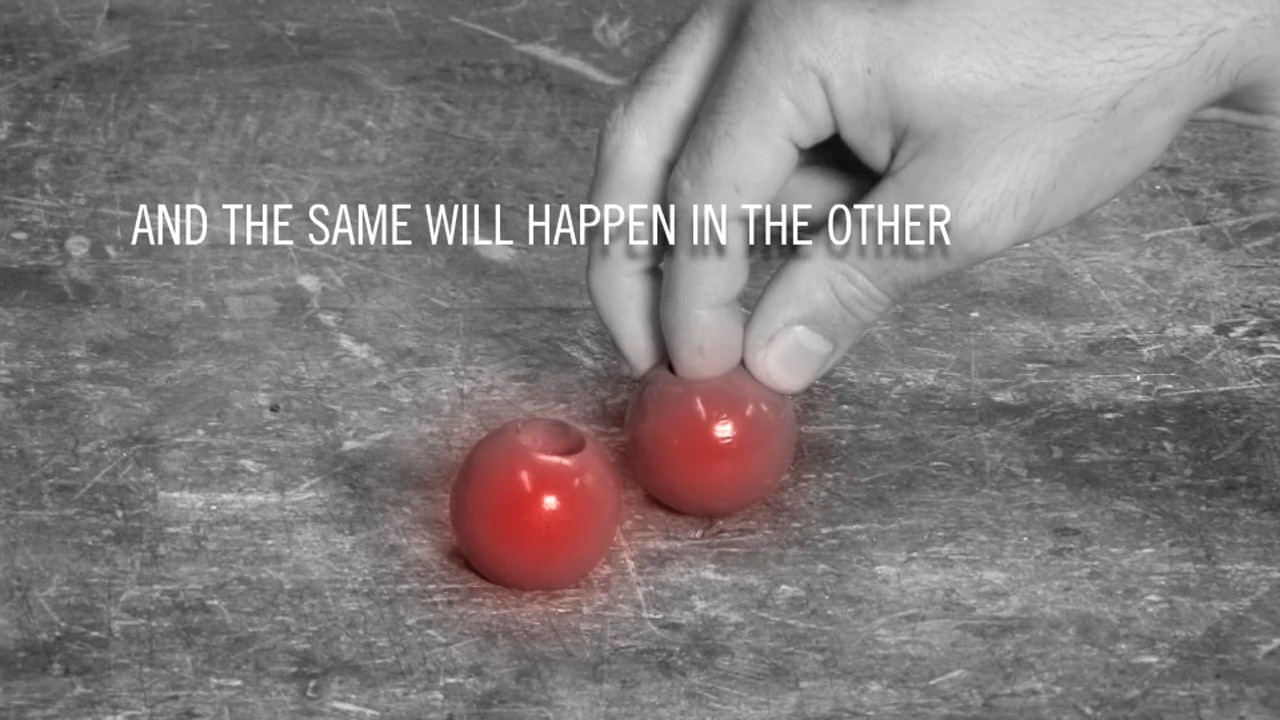

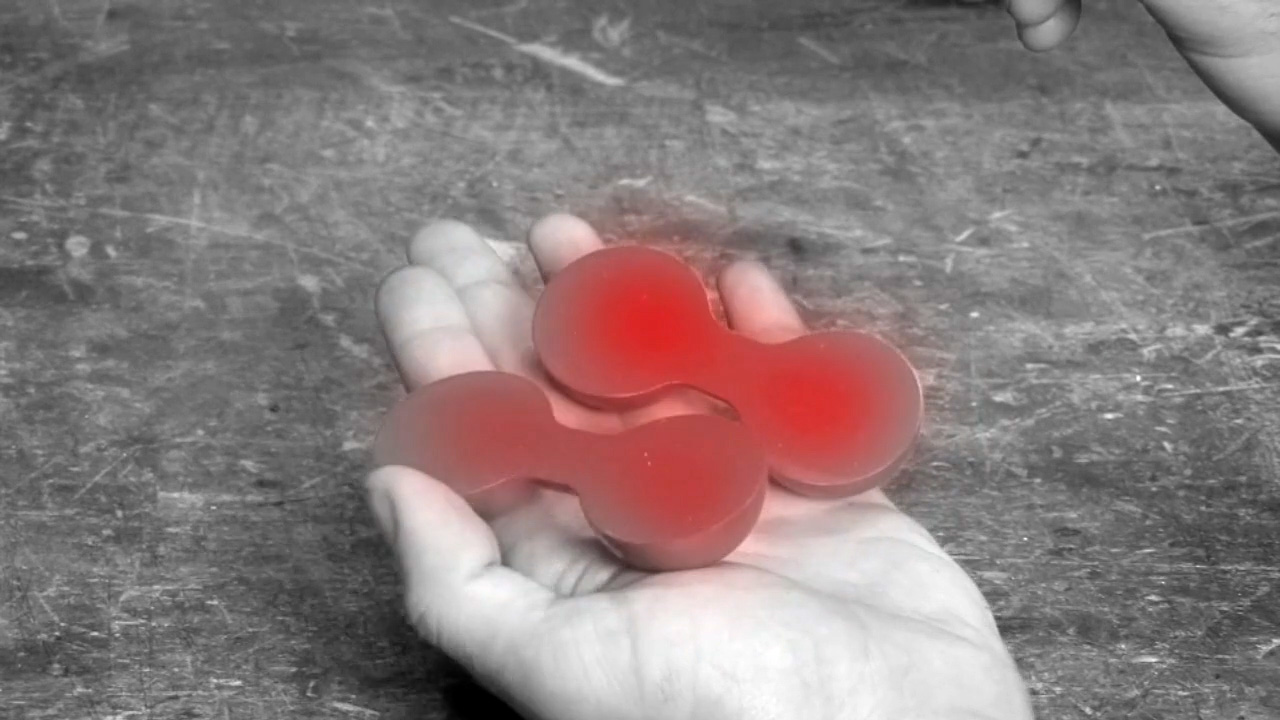

The Perfect Red speculative design. Video stills from Perfect Red [48, pp.47–48] video by Bonanni et al., MIT Media Lab, Tangible Media Group (2012).

The Pillow Talk system in use. Video stills from Pillow Talk [76] video by Joanna Montgomery (2010). Used with permission.

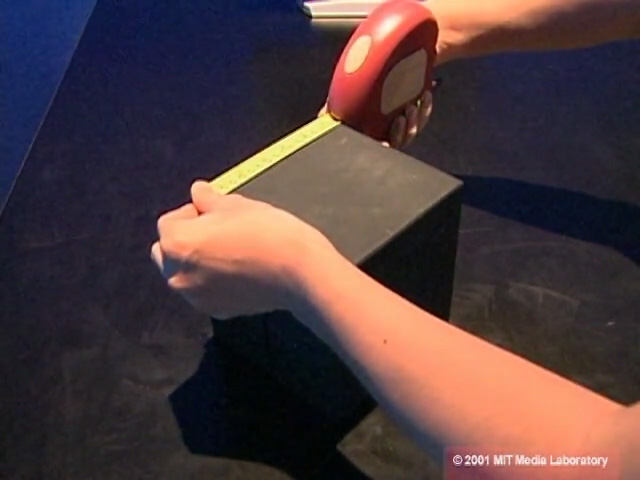

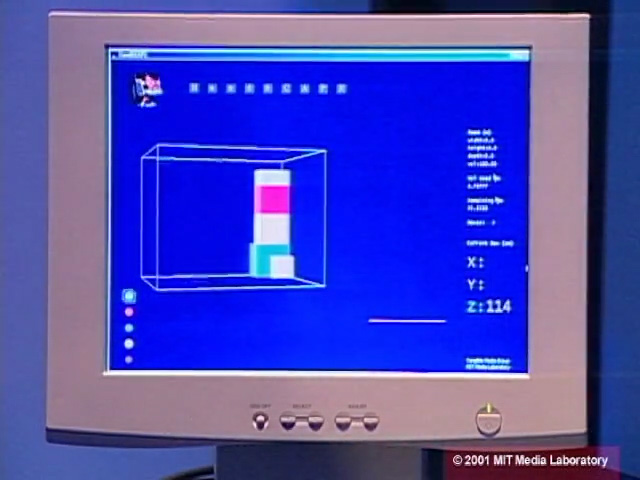

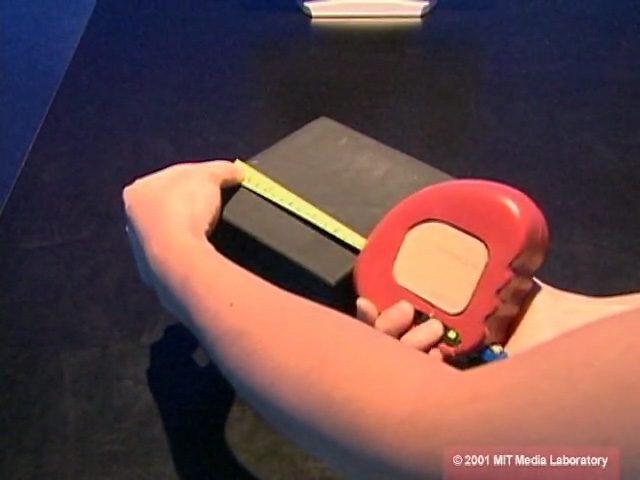

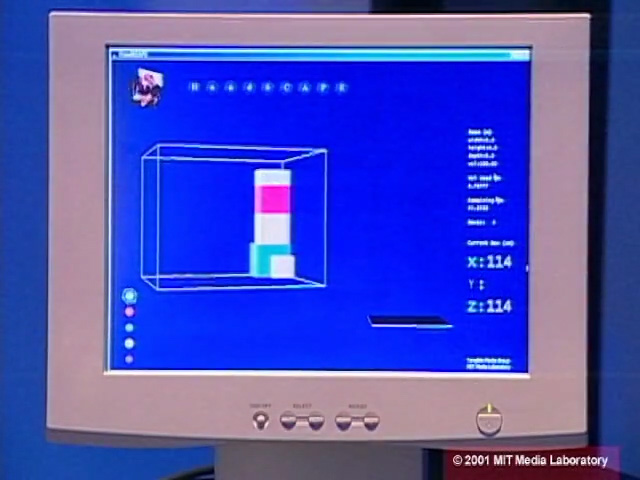

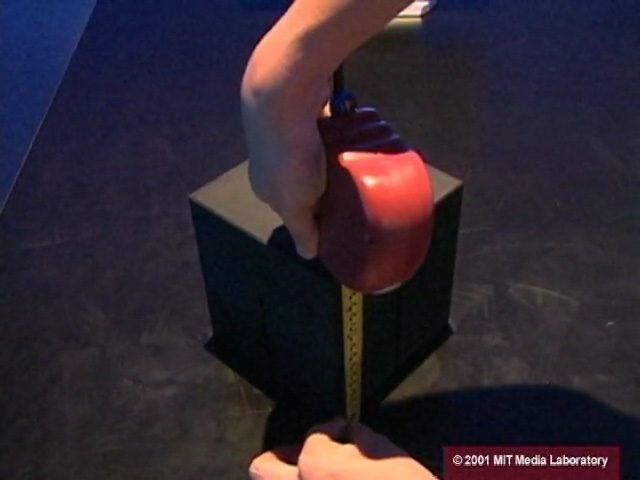

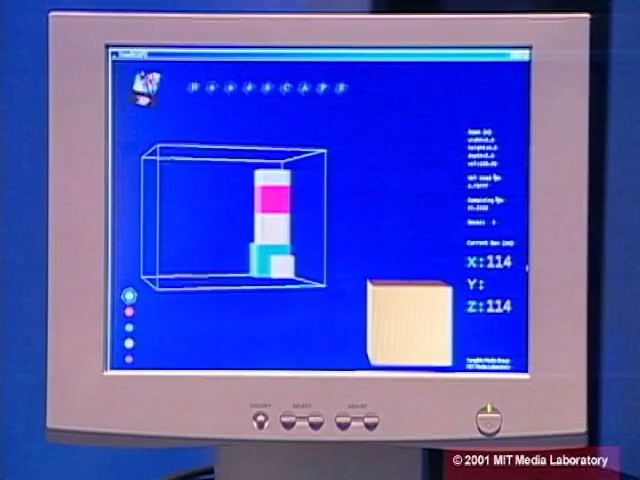

The HandSCAPE system in use. Video stills from HandSCAPE [62] video by Lee et al., MIT Media Lab, Tangible Media Group (2000).

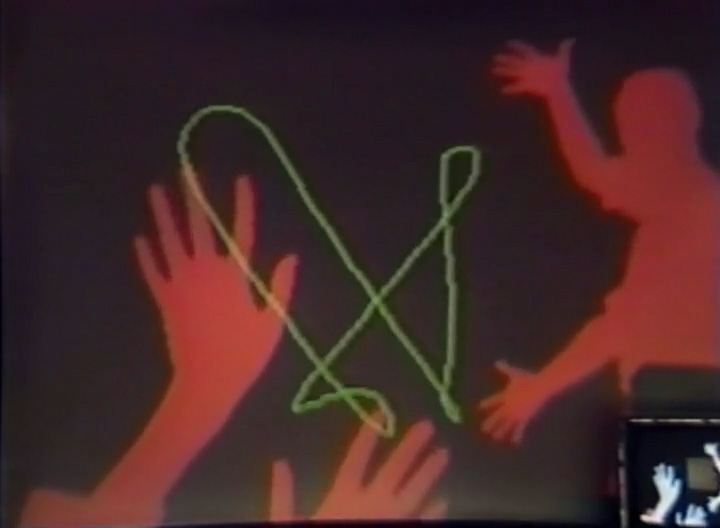

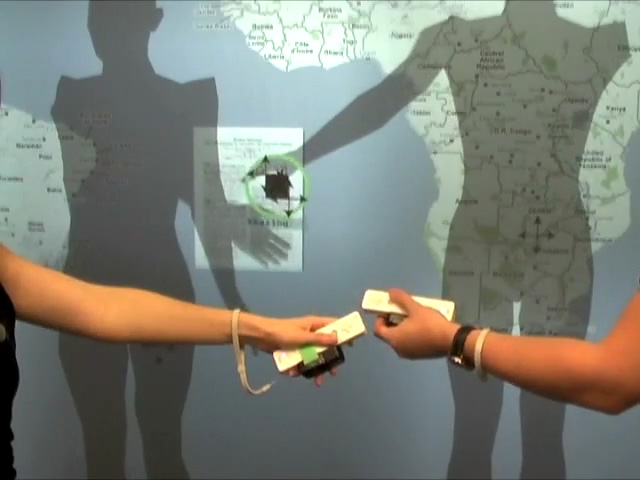

The VIDEOPLACE system in use. Video stills from Videoplace '88 [51] video by Myron Krueger et al. (1988).

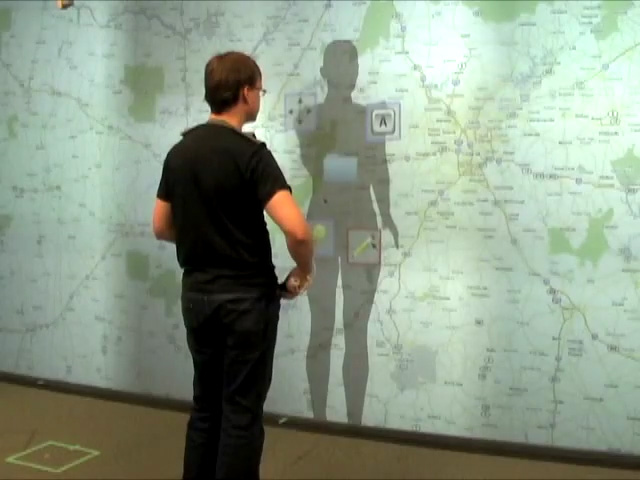

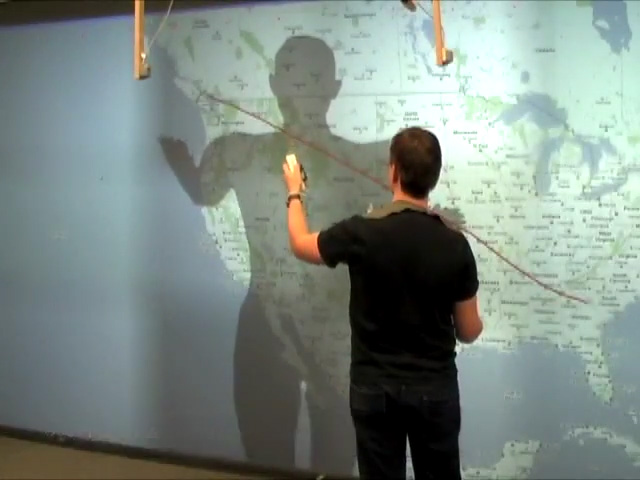

The Whole Body Large Wall Display Interface system in use. Video stills from Whole Body Large Wall Display Interaction [91] video by Shoemaker et al. (2010). Used with permission.

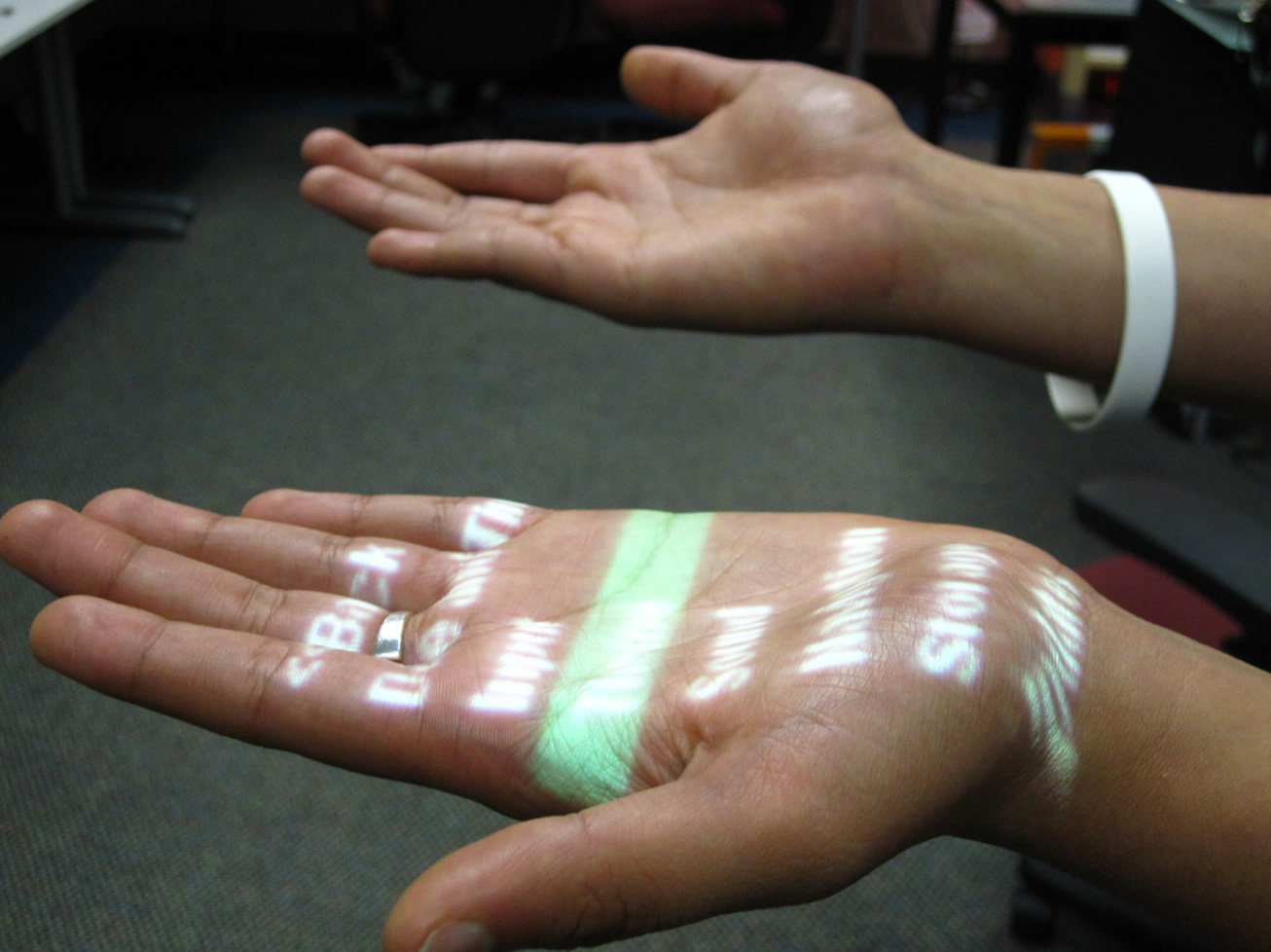

The Armura system in use. Images from On-Body Interaction: Armed and Dangerous [42] by Harrison et al. (2012). Used with permission.

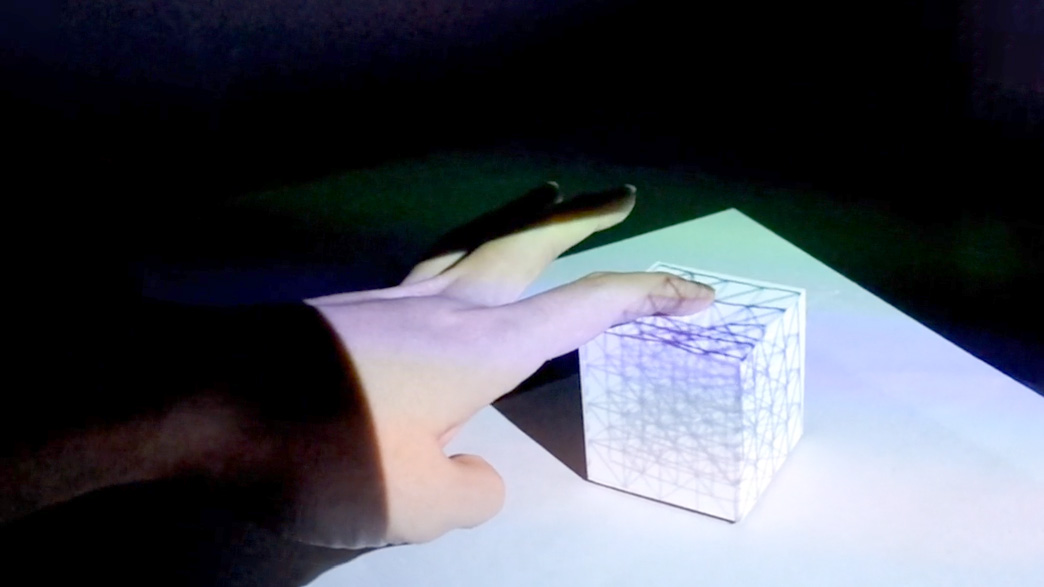

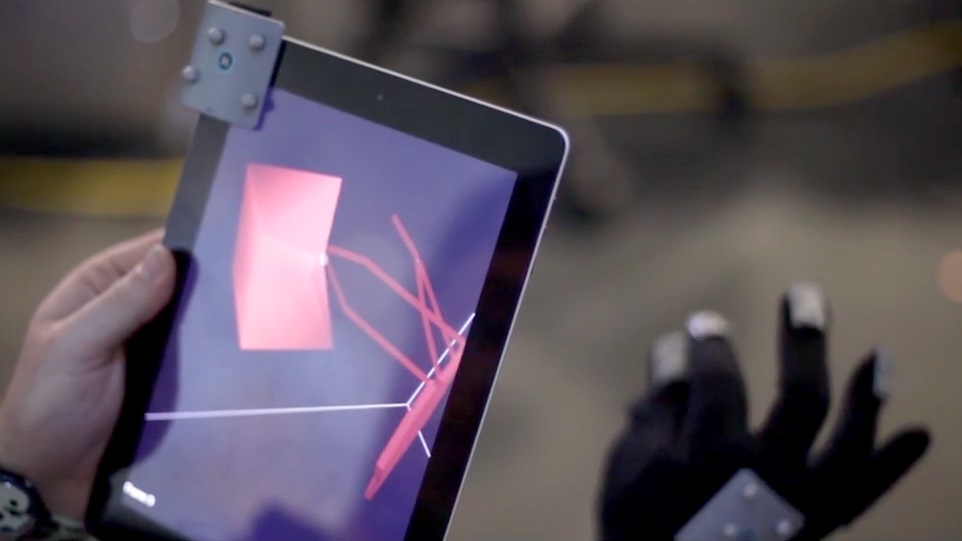

The T(ether) system in use. Images from T(ether) – Spatially-Aware Handhelds, Gestures and Proprioception for Multi-User 3D Modeling and Animation [53] video by Lakatos et al., MIT Media Lab, Tangible Media Group (2014).

The Project North Star system in use. Video stills from Project North Star: Exploring Augmented Reality [59] and Project North Star: Desk UI [58] videos by Leap Motion, Inc. (2018). Used with permission.

The Wall++ system in use. Video stills from Wall++: Room-Scale Interactive and Context-Aware Sensing [104] video by Zhang et al. (2018).

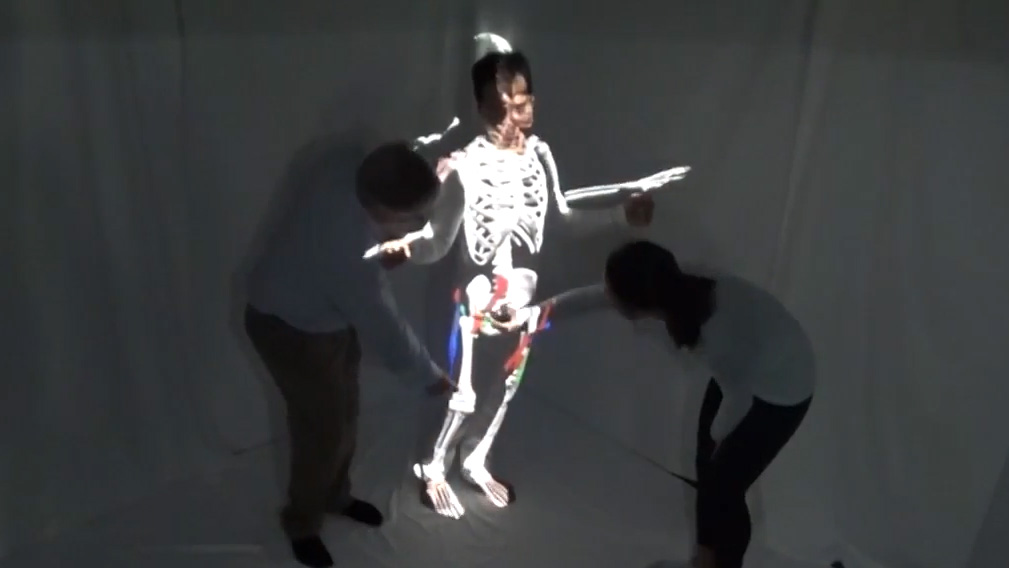

The Augmented Studio system in use. Video stills from Augmented Studio: Projection Mapping on Moving Body for Physiotherapy Education [45] video by Hoang et al., Microsoft Research Centre for SocialNUI (2017).

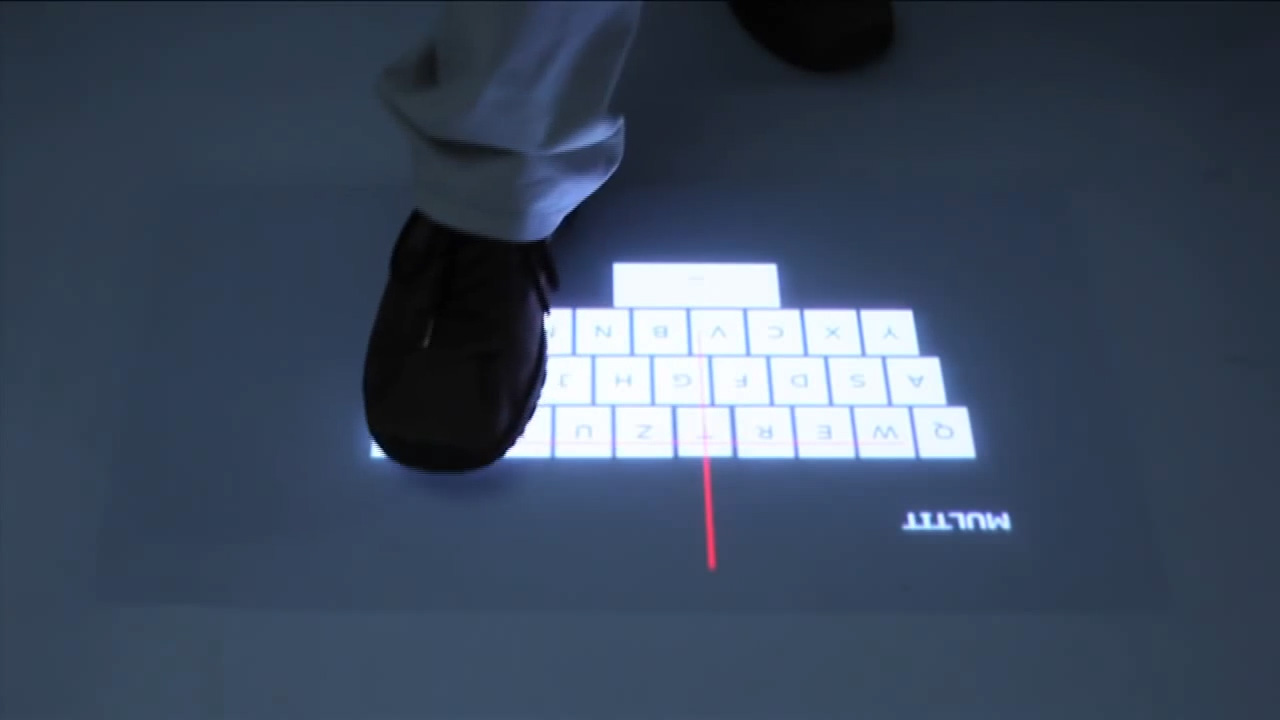

The Multitoe system in use. Video stills from Multitoe interaction: bringing multi-touch to interactive floors [5] video by Augsten et al., Hasso Plattner Institute (2010). Used with permission.

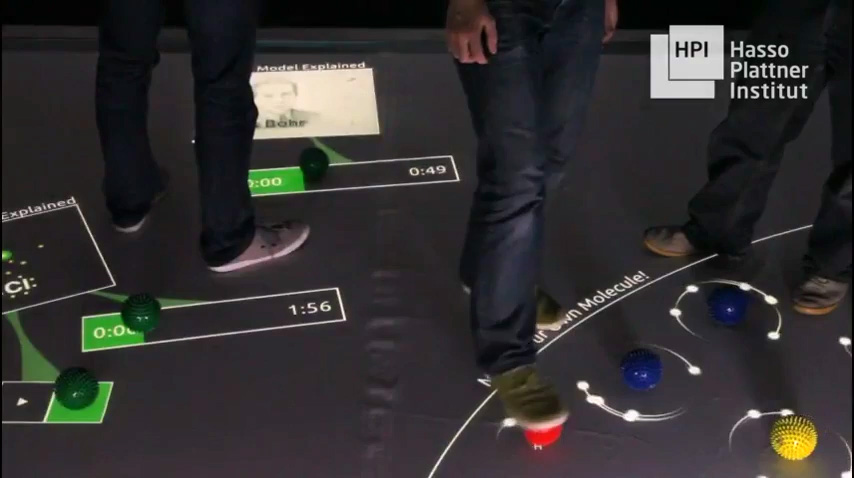

The Kickables system in use. Video still from Kickables: Tangibles for Feet [90] video by Schmidt et al., Hasso Plattner Institute et al. (2014). Used with permission.

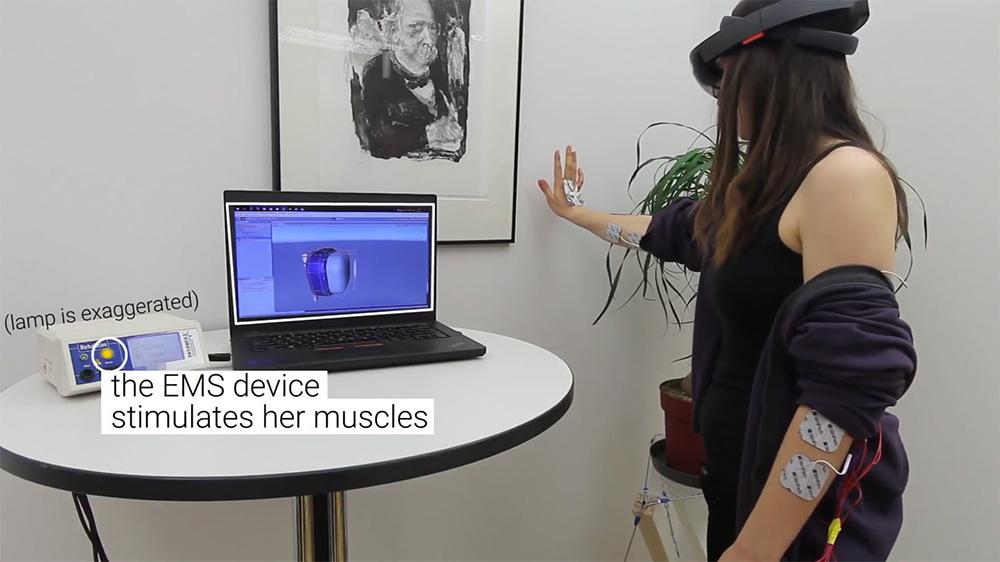

The electrical muscle stimulation force feedback system by Lopes et al. (2018) in use. Video stills from Adding Force Feedback to Mixed Reality Experiences and Games using Electrical Muscle Stimulation [64] video by Lopes et al., Hasso Plattner Institute (2018). Used with permission.

Mirrorworlds Concept: The Architect. Video still from Mirrorworlds Concept: The Architect [57] by Leap Motion, Inc. (2018). Used with permission.

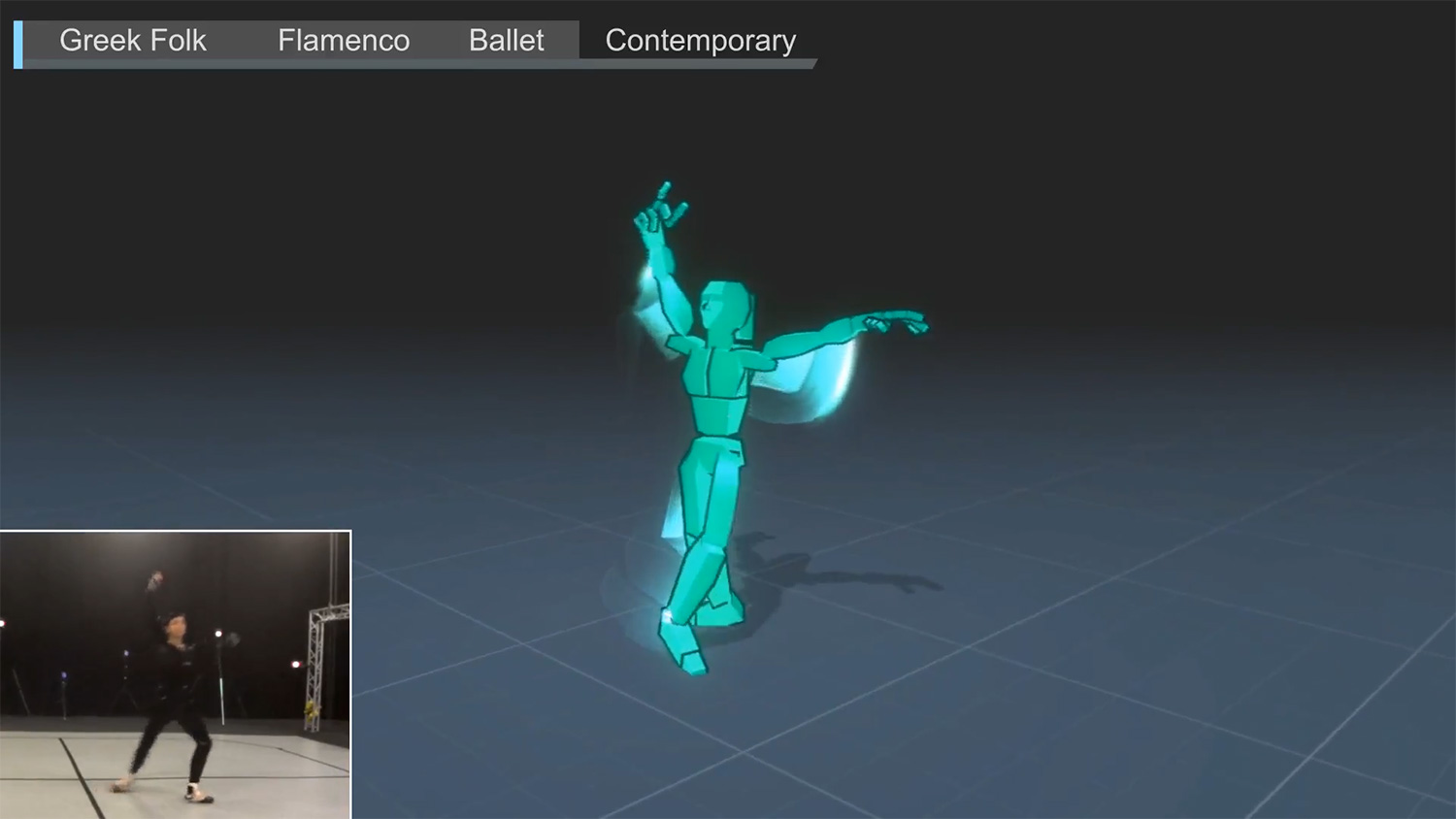

The Choreomorphy system in use. Video still from Choreomorphy [81] video by El Raheb et al. (2018). Used with permission.

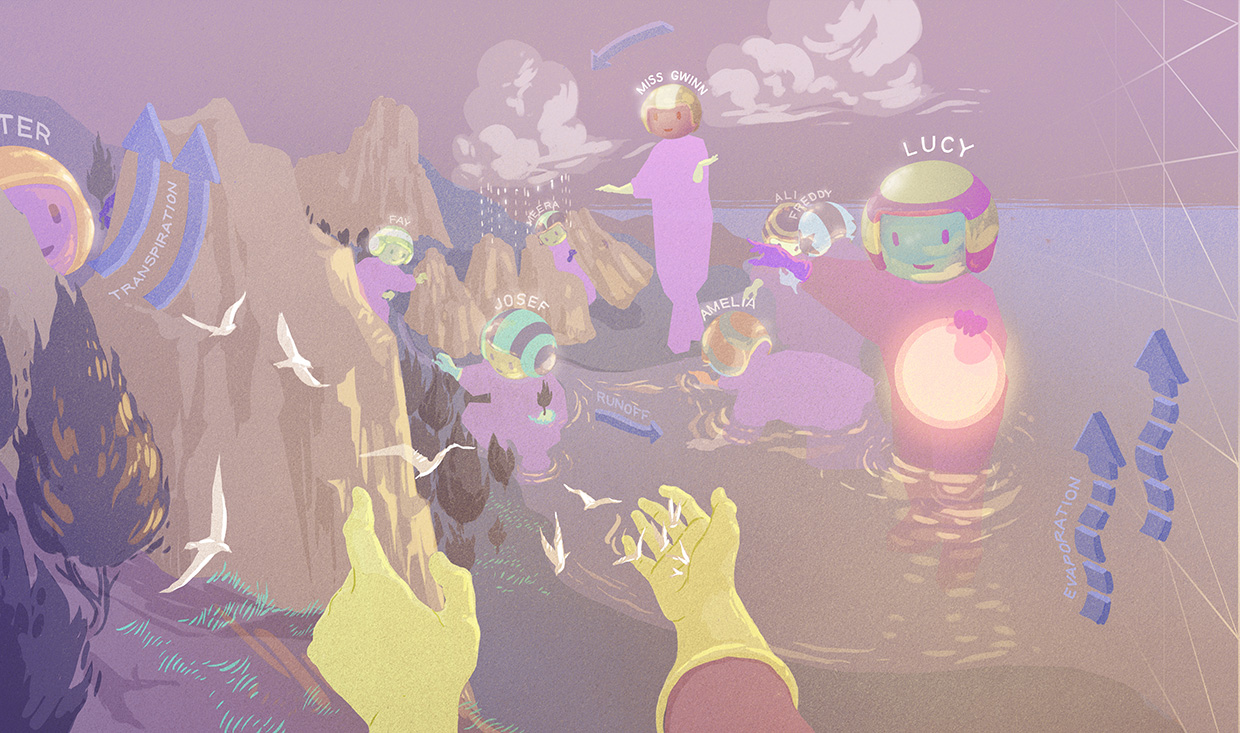

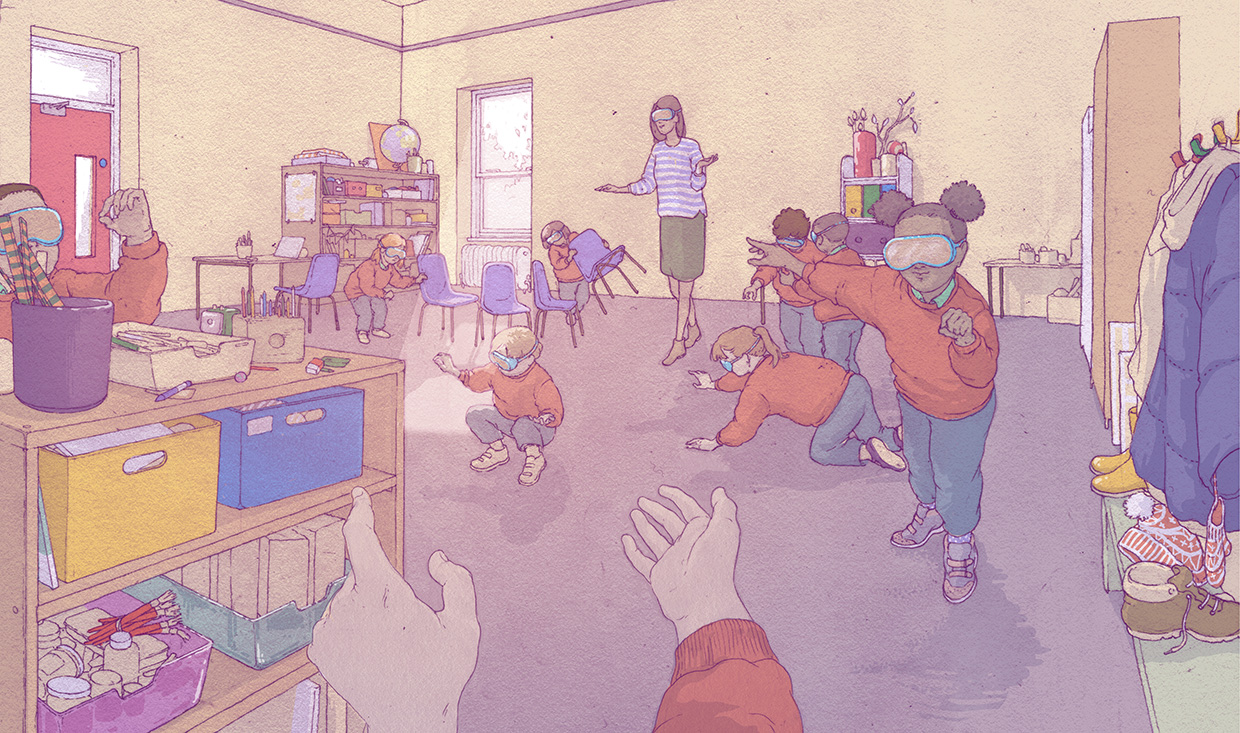

A Mirrorworld in a classroom, for exploring the water cycle of an environmental landscape at room scale. Shown is the augmented reality setting (above) and the corresponding physical setting (below). Images from Leap Motion, Inc. [68] by Keiichi Matsuda, illustrations by Anna Mill (2018). Used with permission.

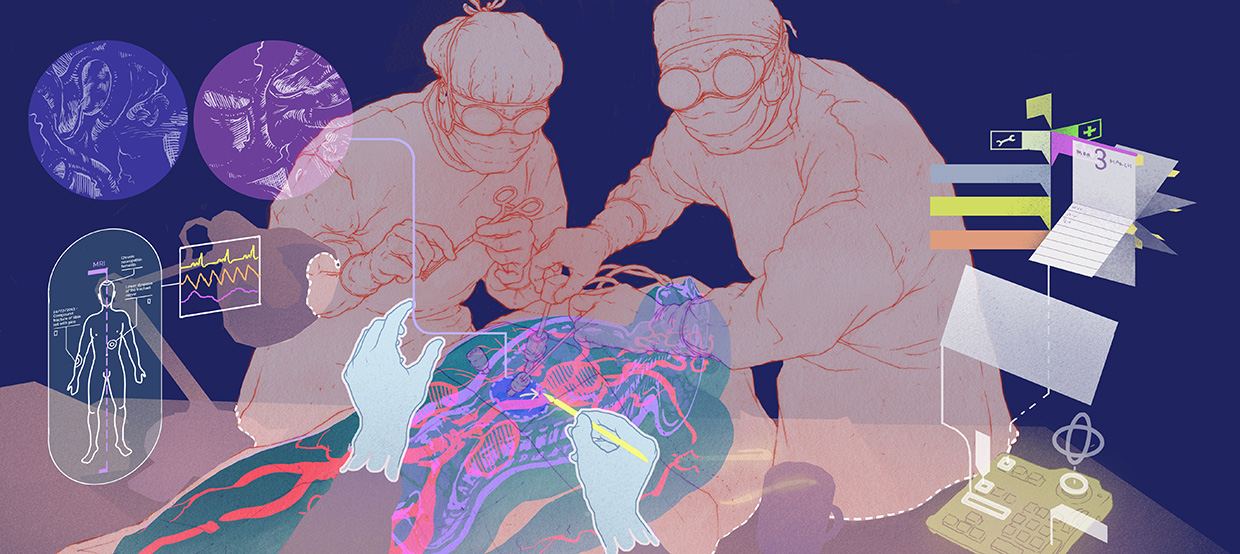

A Mirrorworld in an office collocated with a medical operating theatre. Shown is the augmented reality setting (above) and the corresponding physical setting (below). Images from Leap Motion, Inc. [68] by Keiichi Matsuda, illustrations by Anna Mill (2018). Used with permission.

A virtually concealed thief in a speculative augmented reality. Video still from 'Hyper-Reality' [67] by Keiichi Matsuda (2016). Used with permission.

Quotes from Apple Inc. patent Sports monitoring system for headphones, earbuds and/or headsets [80] by Prest et al. (2014). Quotes extracted from patent by Apple Inc.

A rendition of Computational Costume [6] in practice with its hardware design highlighted in blue. Illustration by Janelle Barone, made in collaboration with the author.

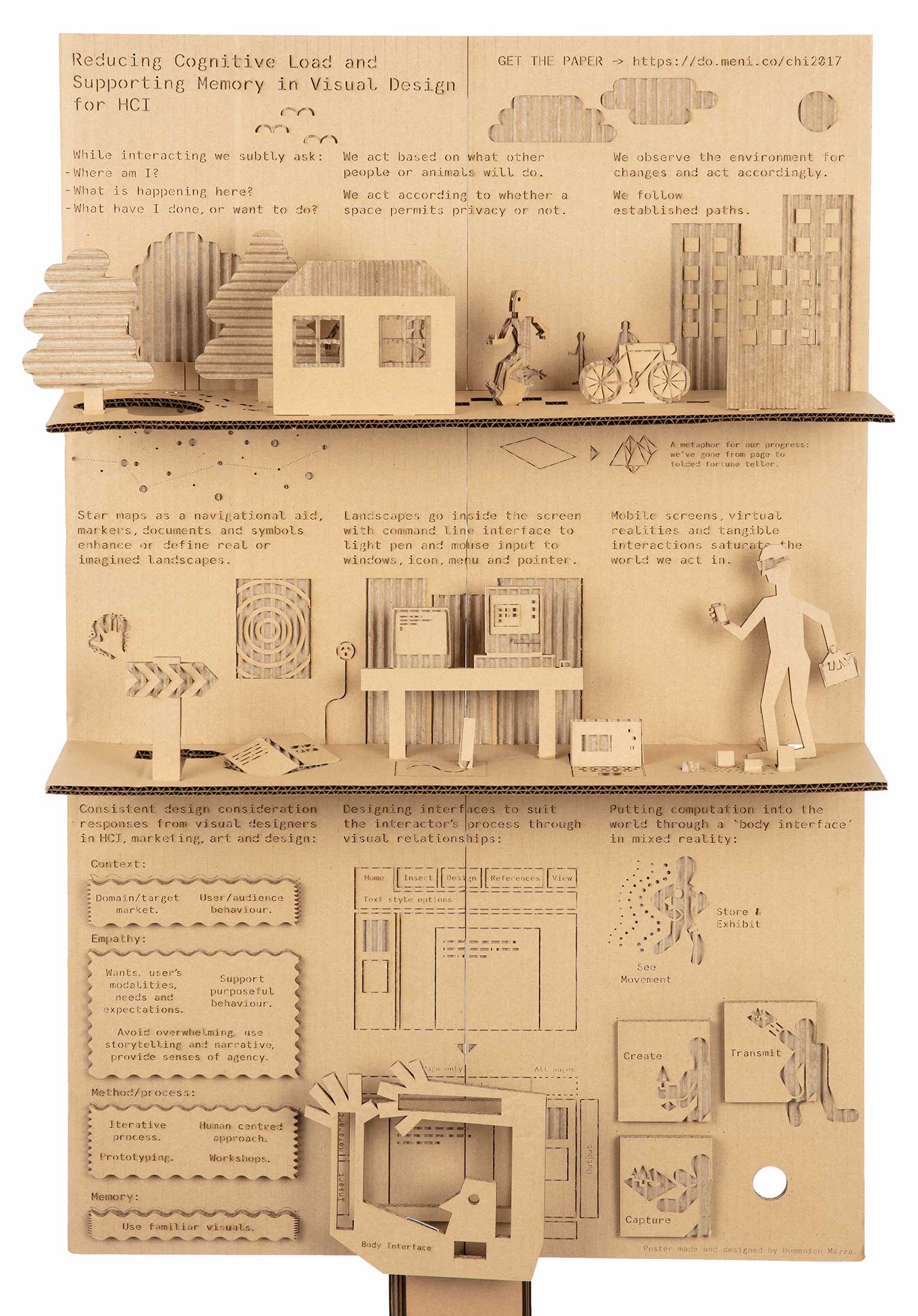

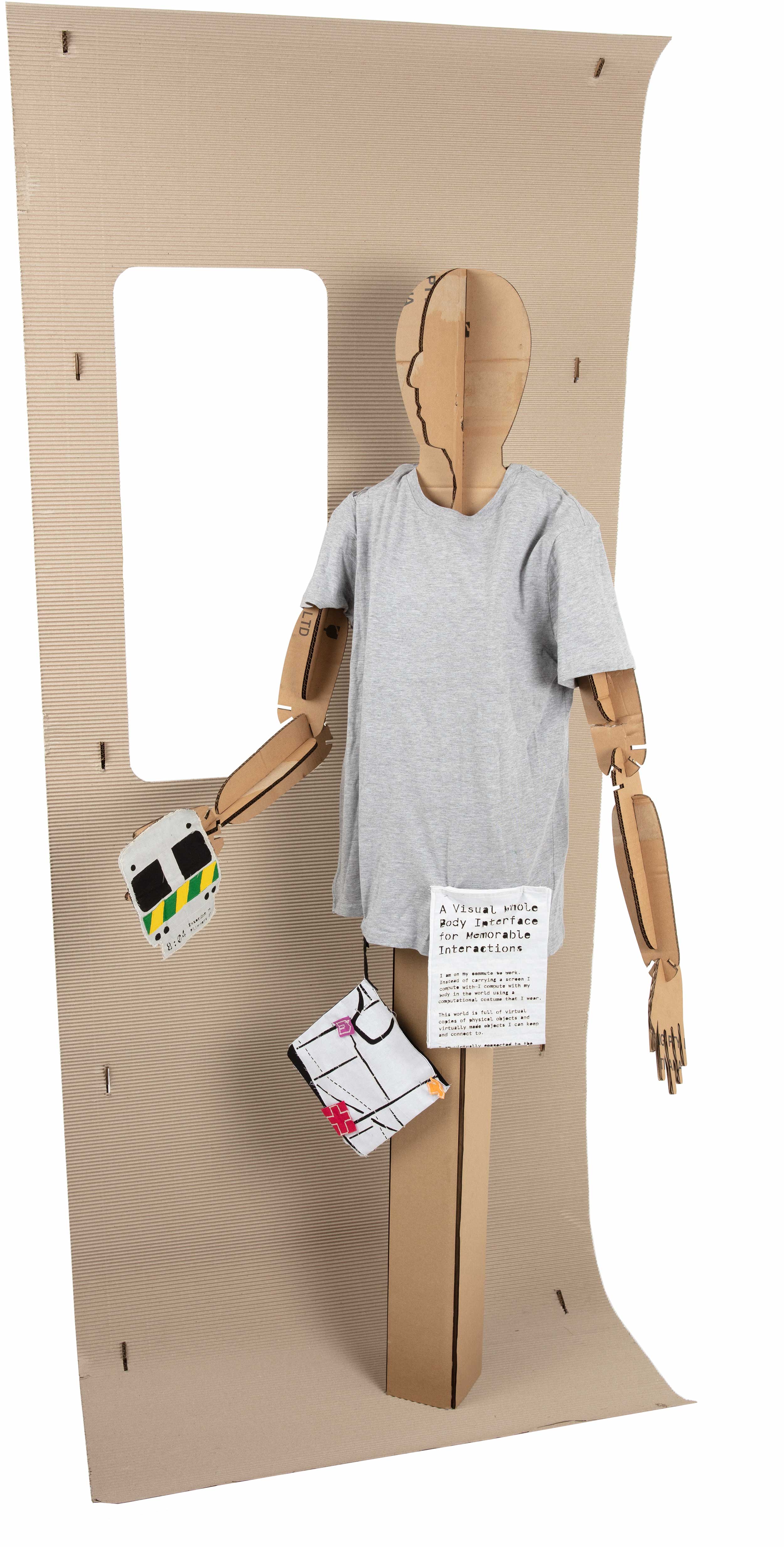

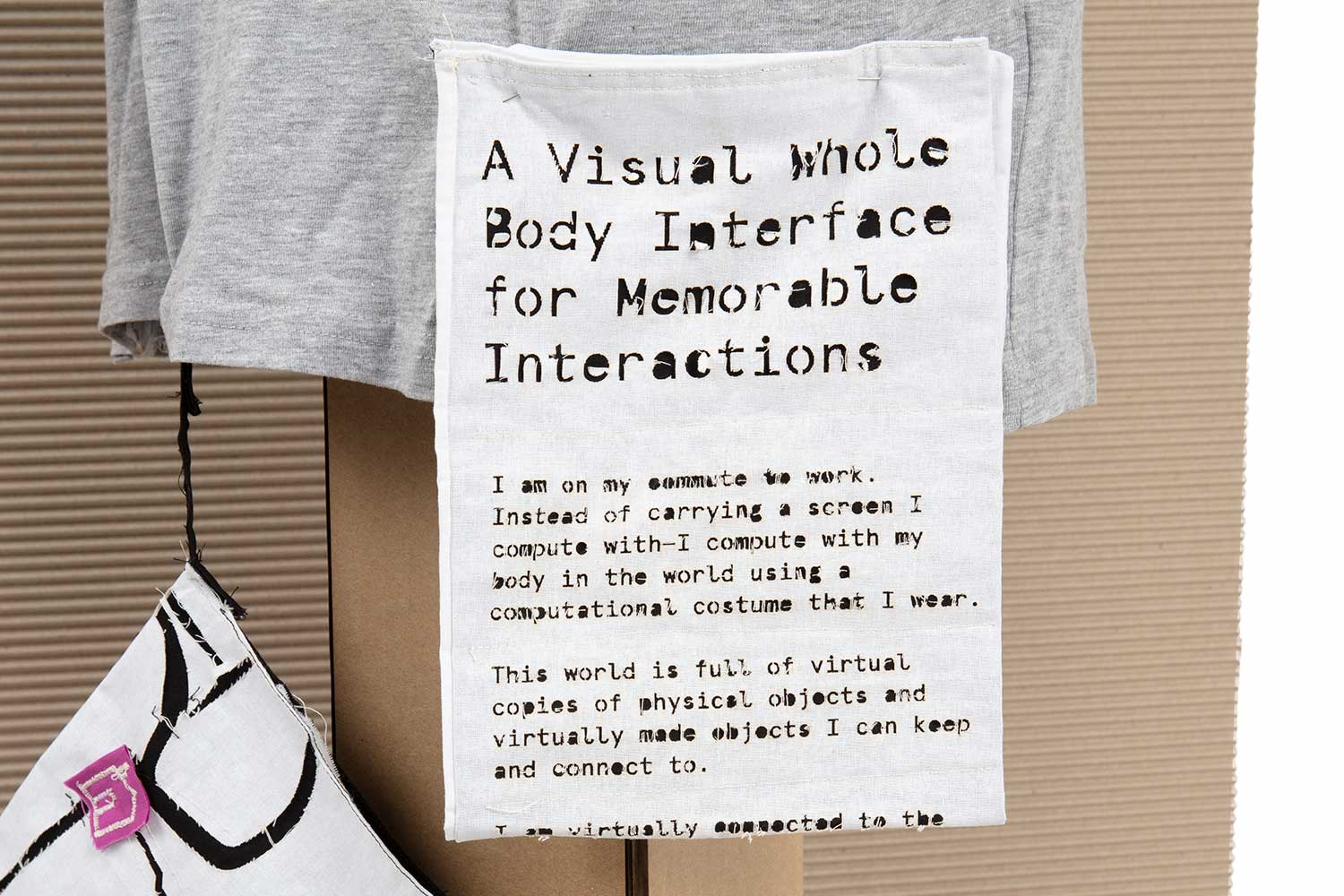

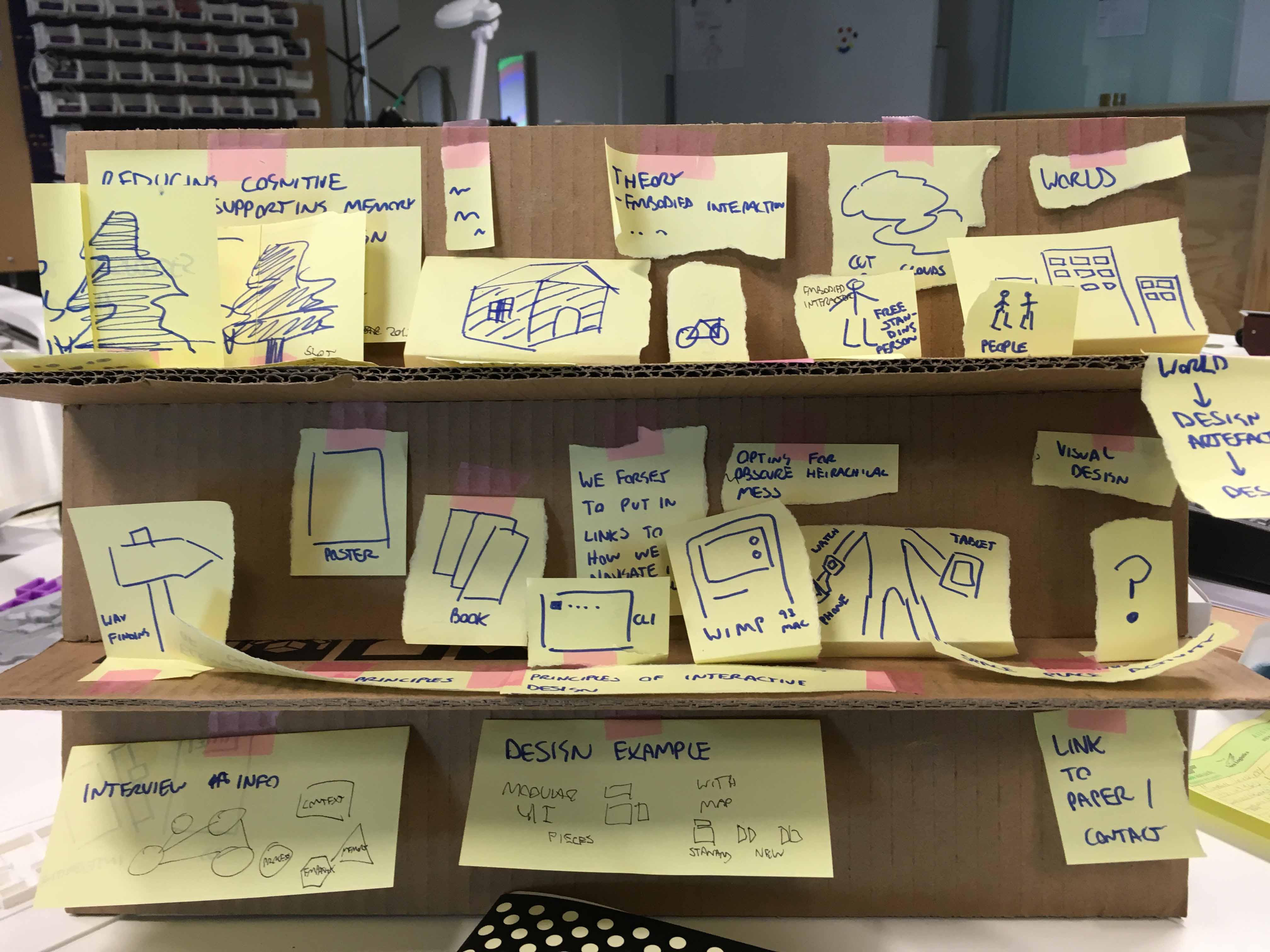

Cardboard poster made for the CHI 2017 (Conference on Human Factors in Computing Systems) Student Research Competition, Denver, Colorado, USA, May 2017. Photography by Jon McCormack.

Hand assembly of the cardboard poster. Images are author's own.

Hand and forearm interface mock-up for crafting the cardboard poster. Photography by Jon McCormack.

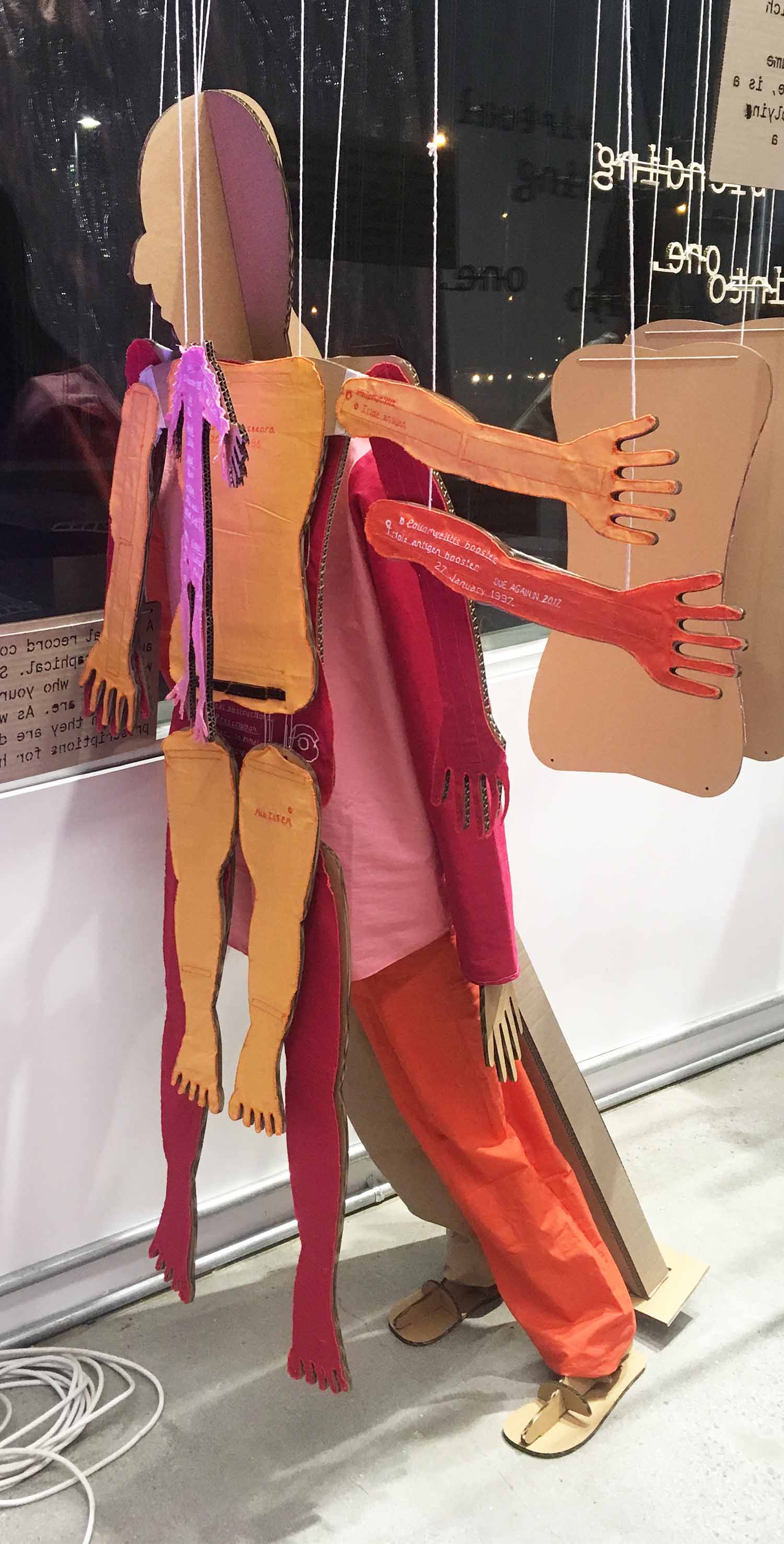

Computational Costume v0 mannequin front and back. Shown at No Vacancy Gallery QV in Melbourne, Victoria, Australia as part of the Melbourne FashionTech collective's showcase during White Night 17 February 2018. Photography by Jon McCormack.

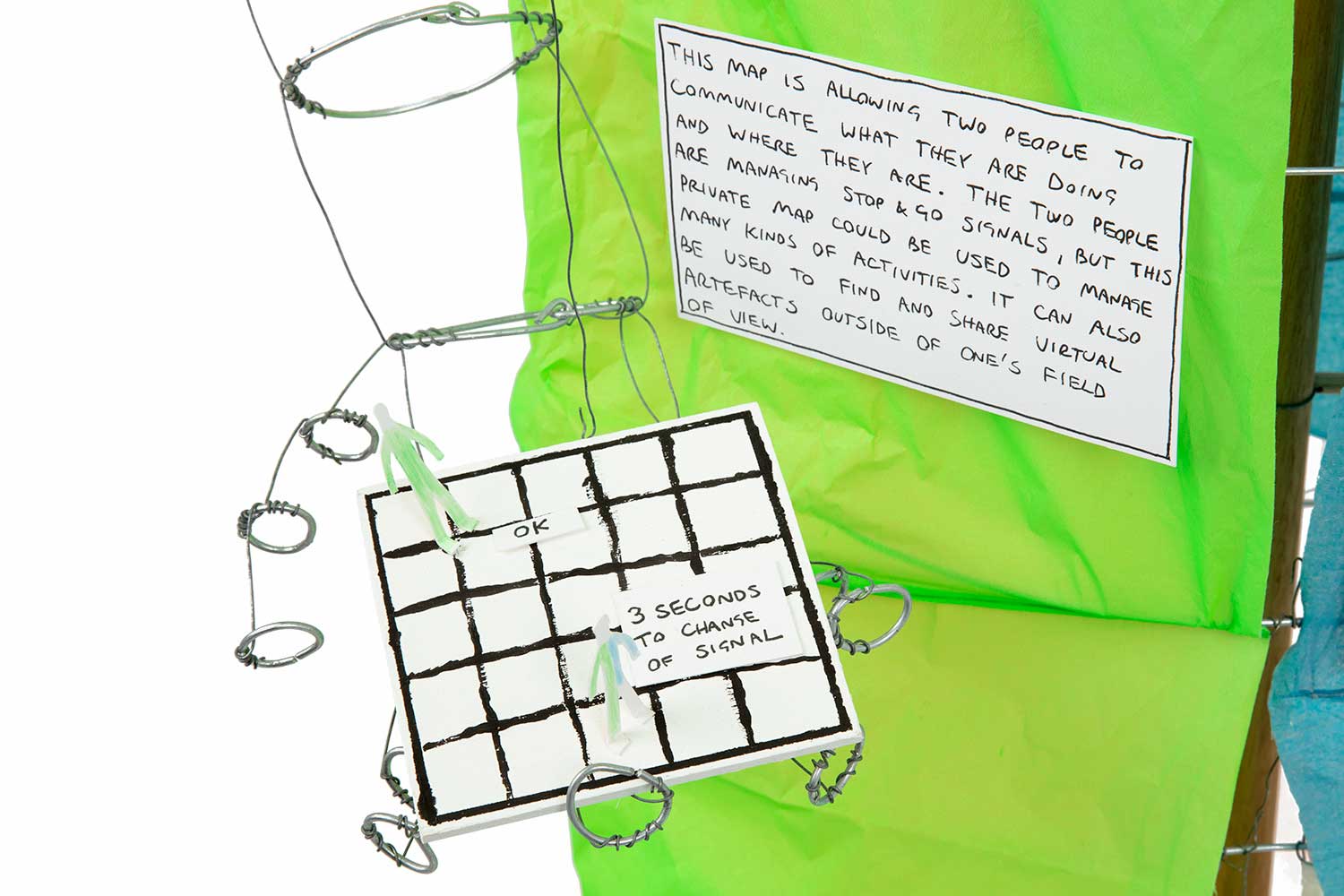

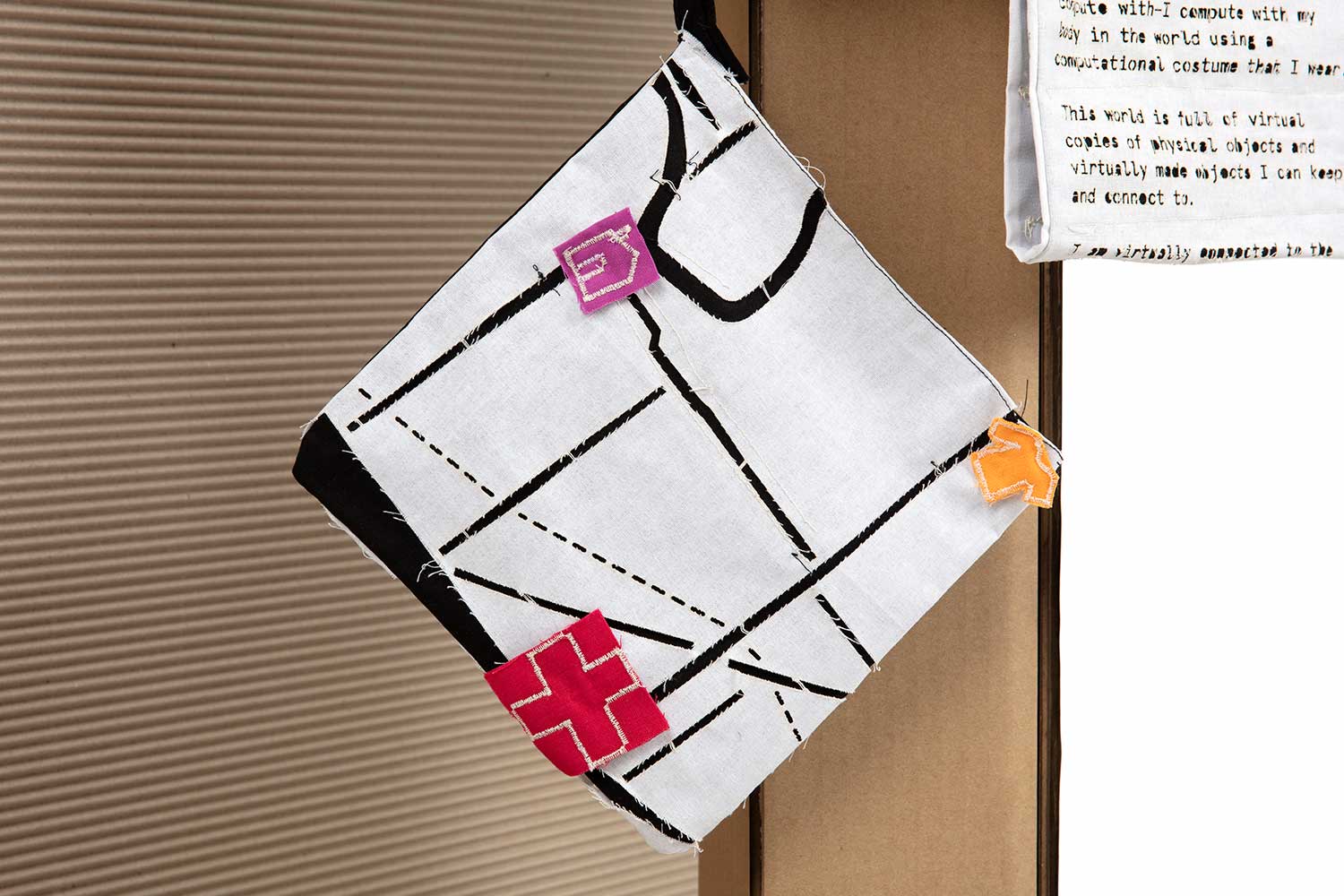

Map tool in Computational Costume v0. Photography by Jon McCormack.

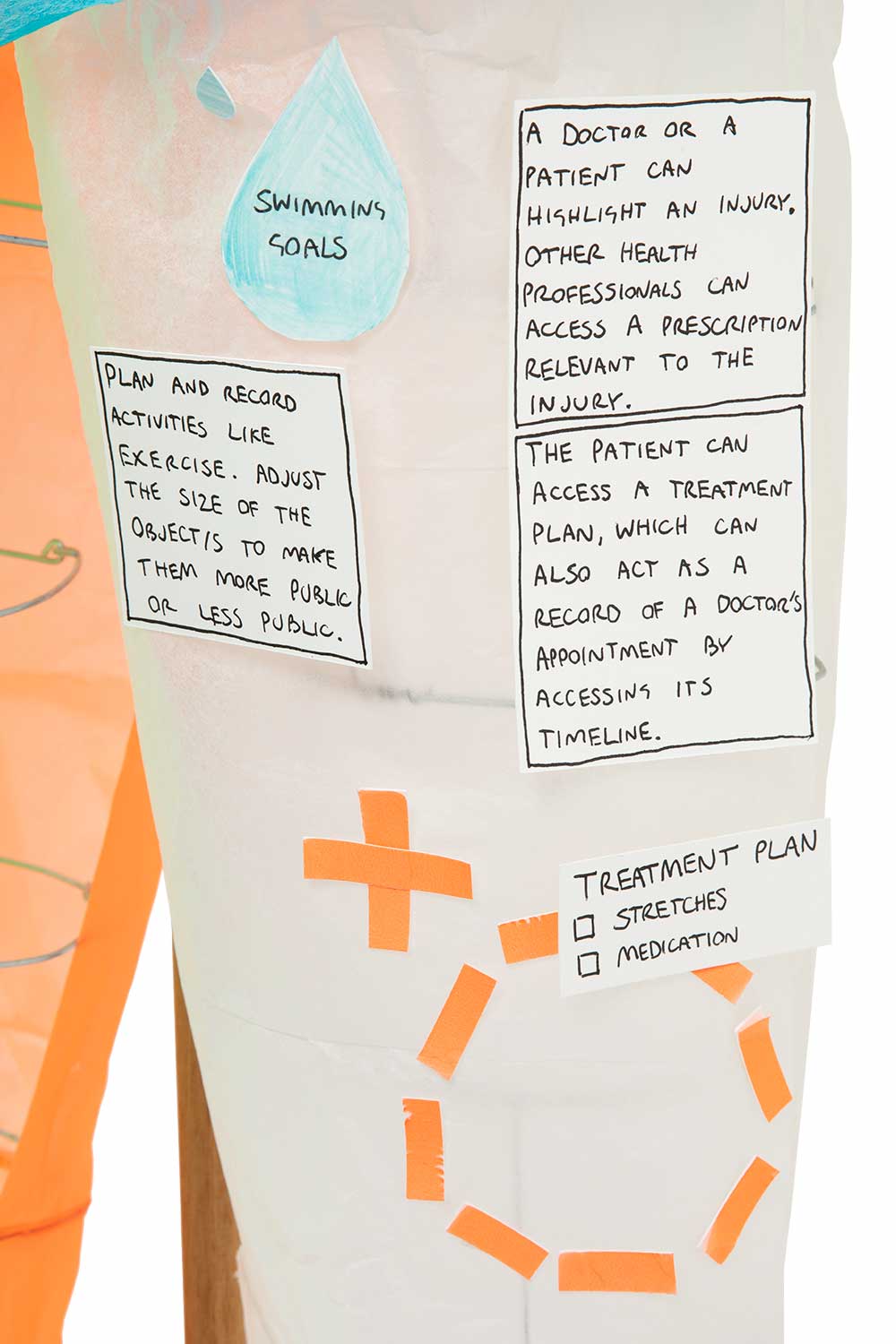

Computational Costume v0 featuring personal effects. Photography by Jon McCormack.

Computational Costume v0 featuring health treatment plan and swimming goals. Photography by Jon McCormack.

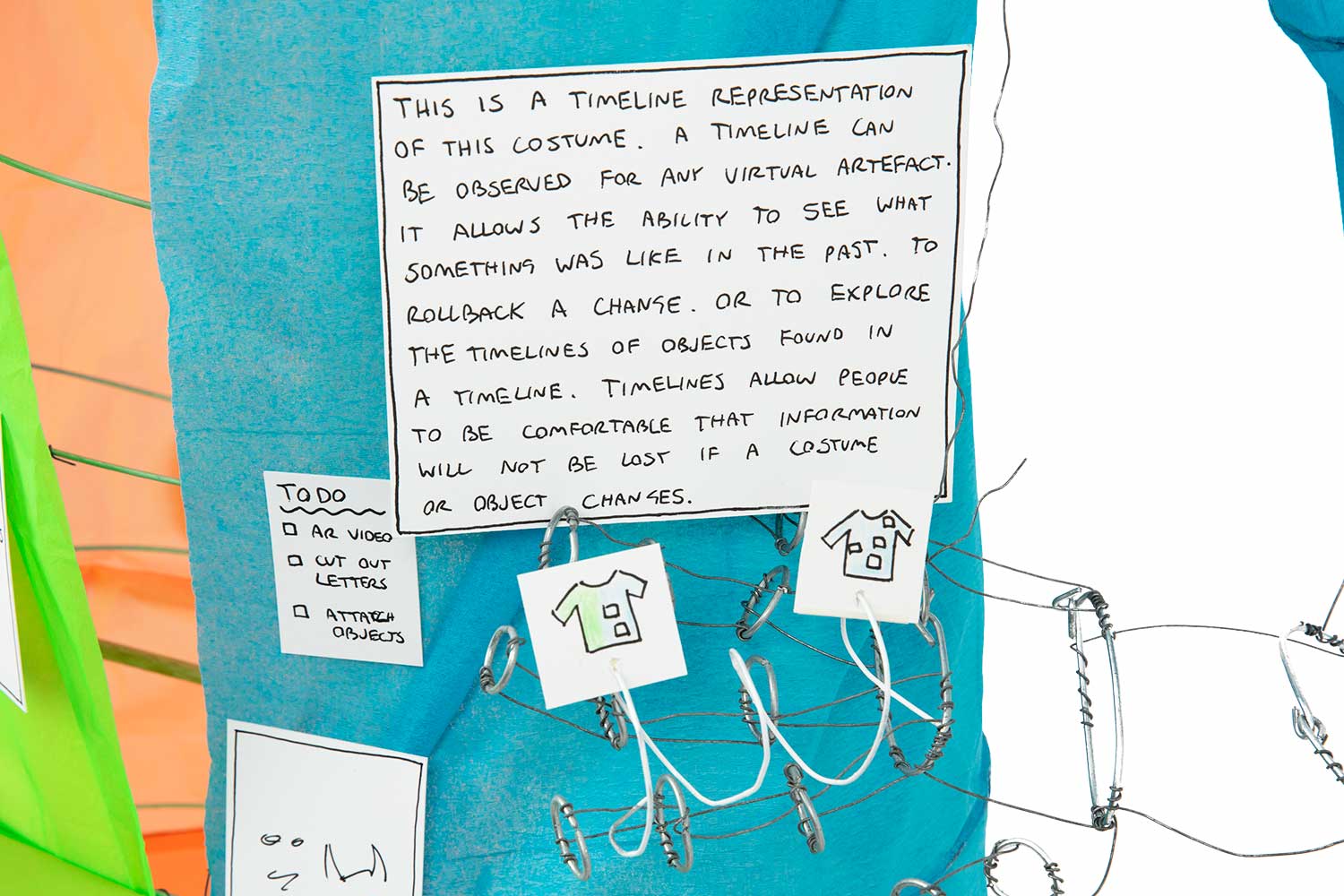

Timeline tool in Computational Costume v0. Photography by Jon McCormack.

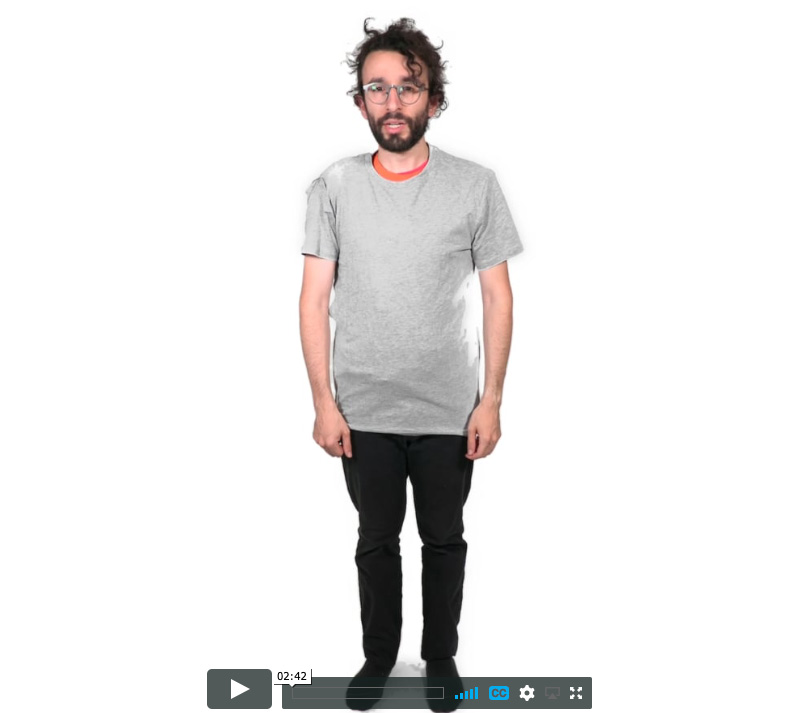

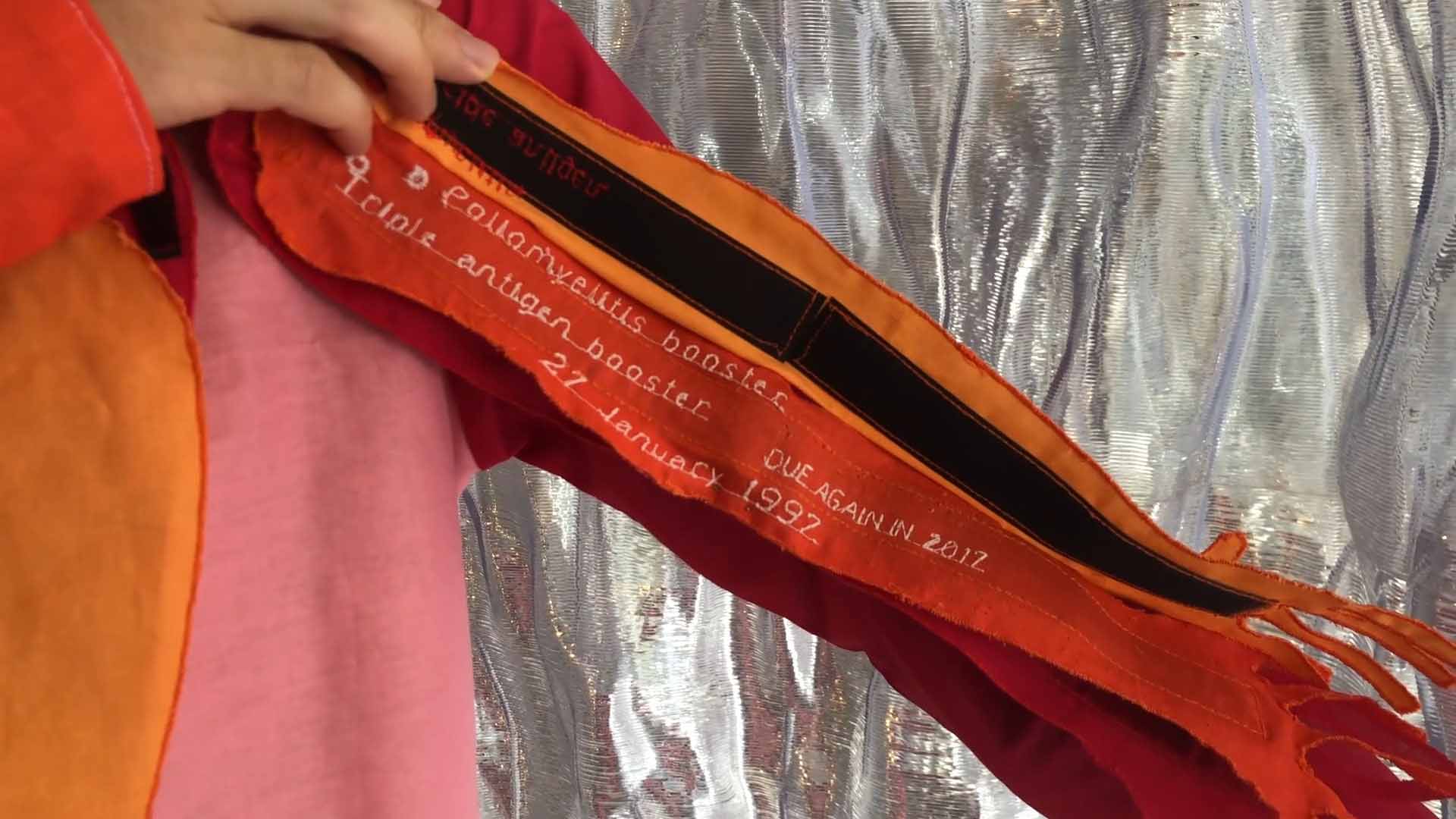

Re-enactment video of the Computational Costume v1 performance [71]. Video is author's own.

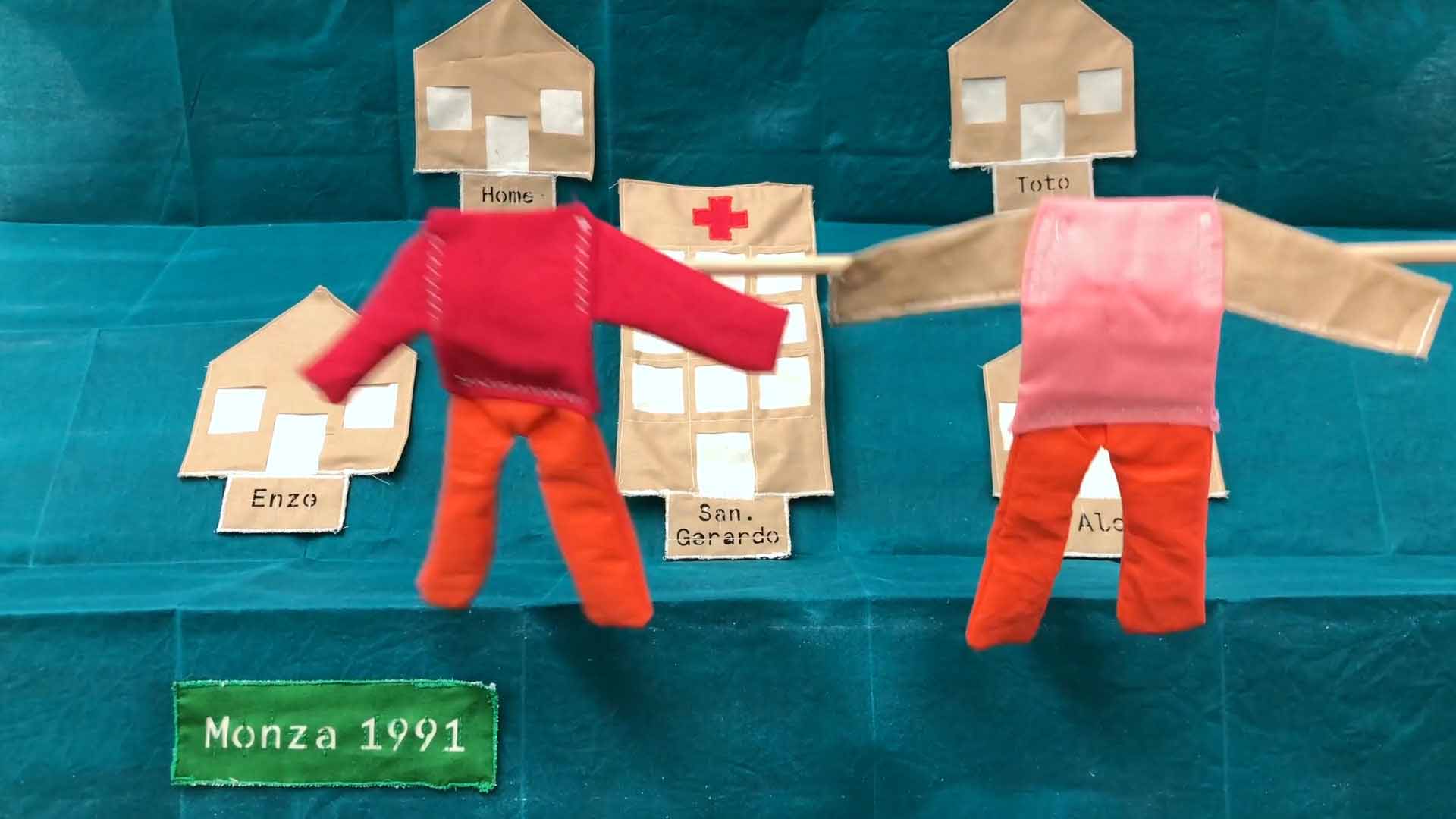

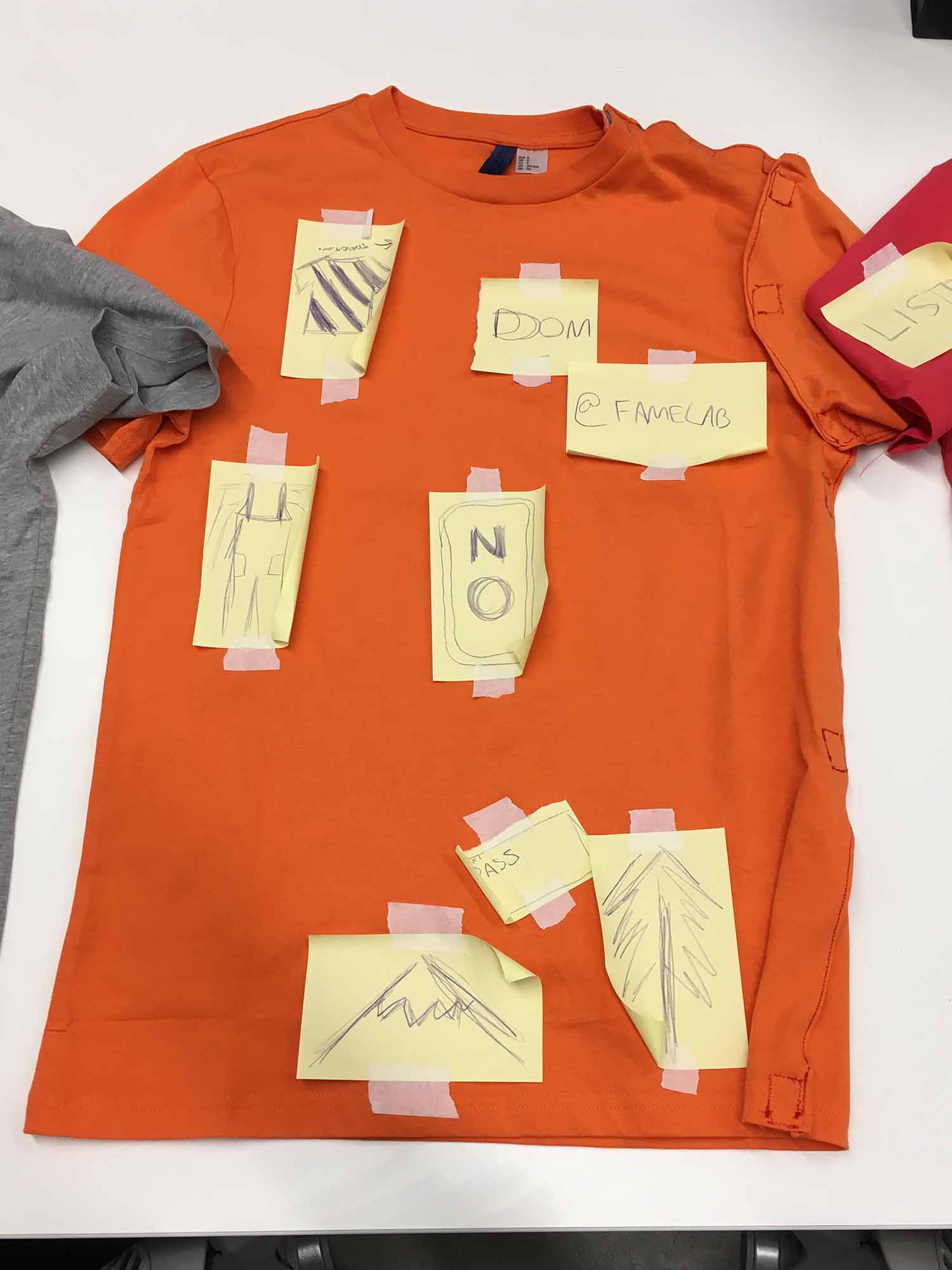

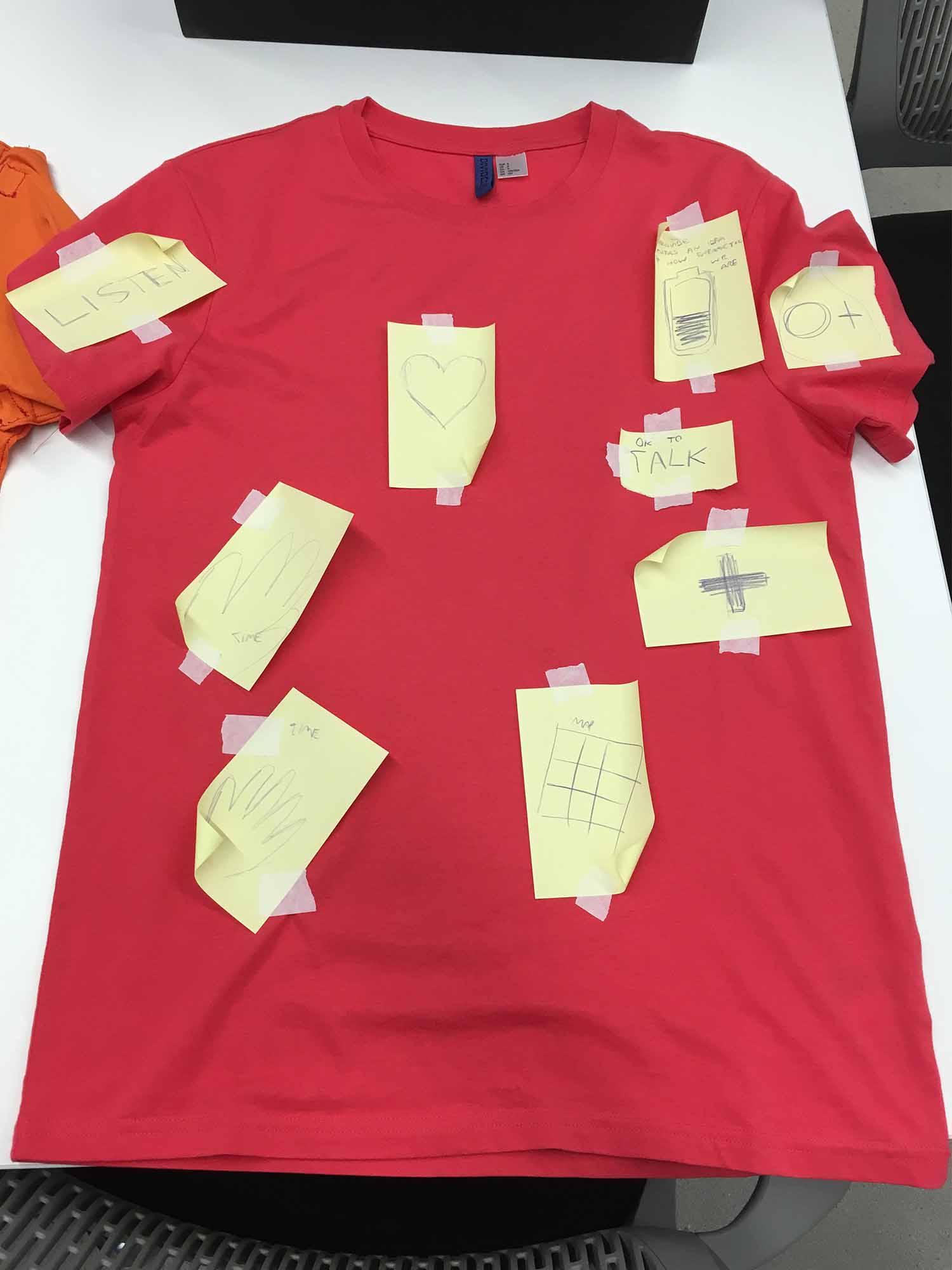

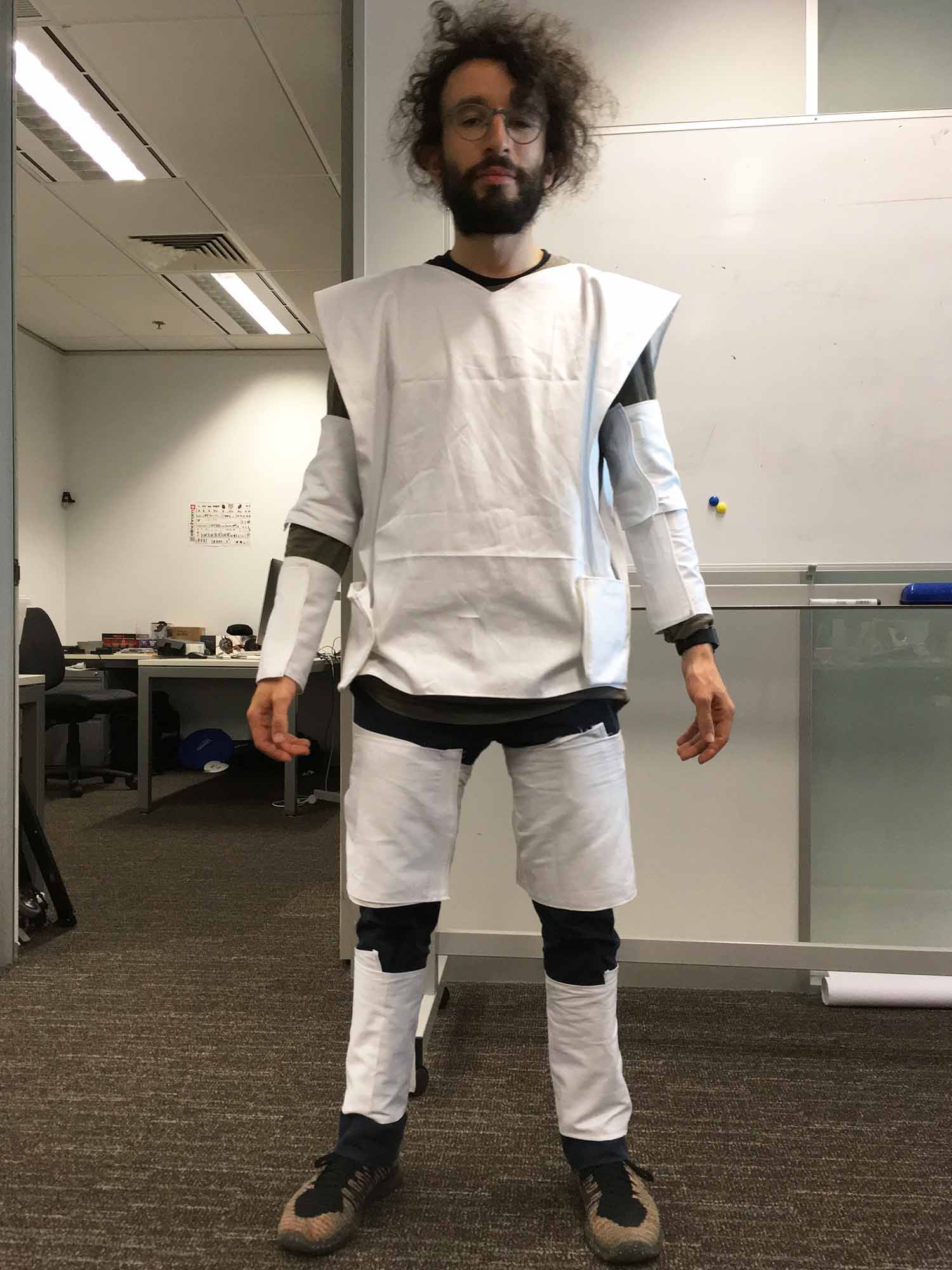

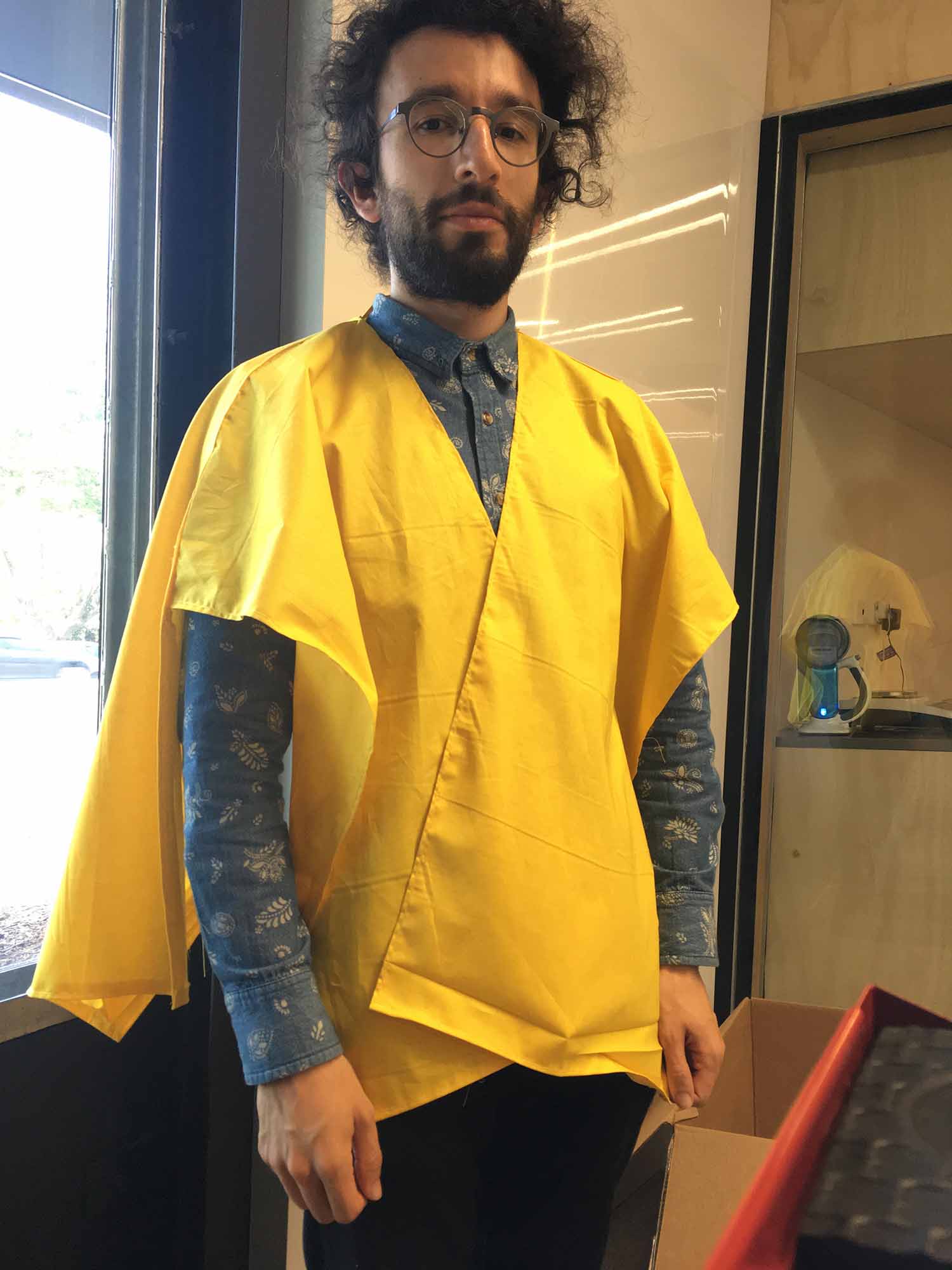

Computational Costume v1 from left to right: personal costume, worksite costume and medical emergency costume. Photography by Jon McCormack.

A copy of a boarded train and map tool, used to communicate the wearer's location and estimated time of arrival in Computational Costume v1. Photography by Jon McCormack.

Some reading material made public in Computational Costume v1. Photography by Jon McCormack.

A worksite costume indicating a job for the wearer, which can be affixed to the costume in Computational Costume v1. Photography by Jon McCormack.

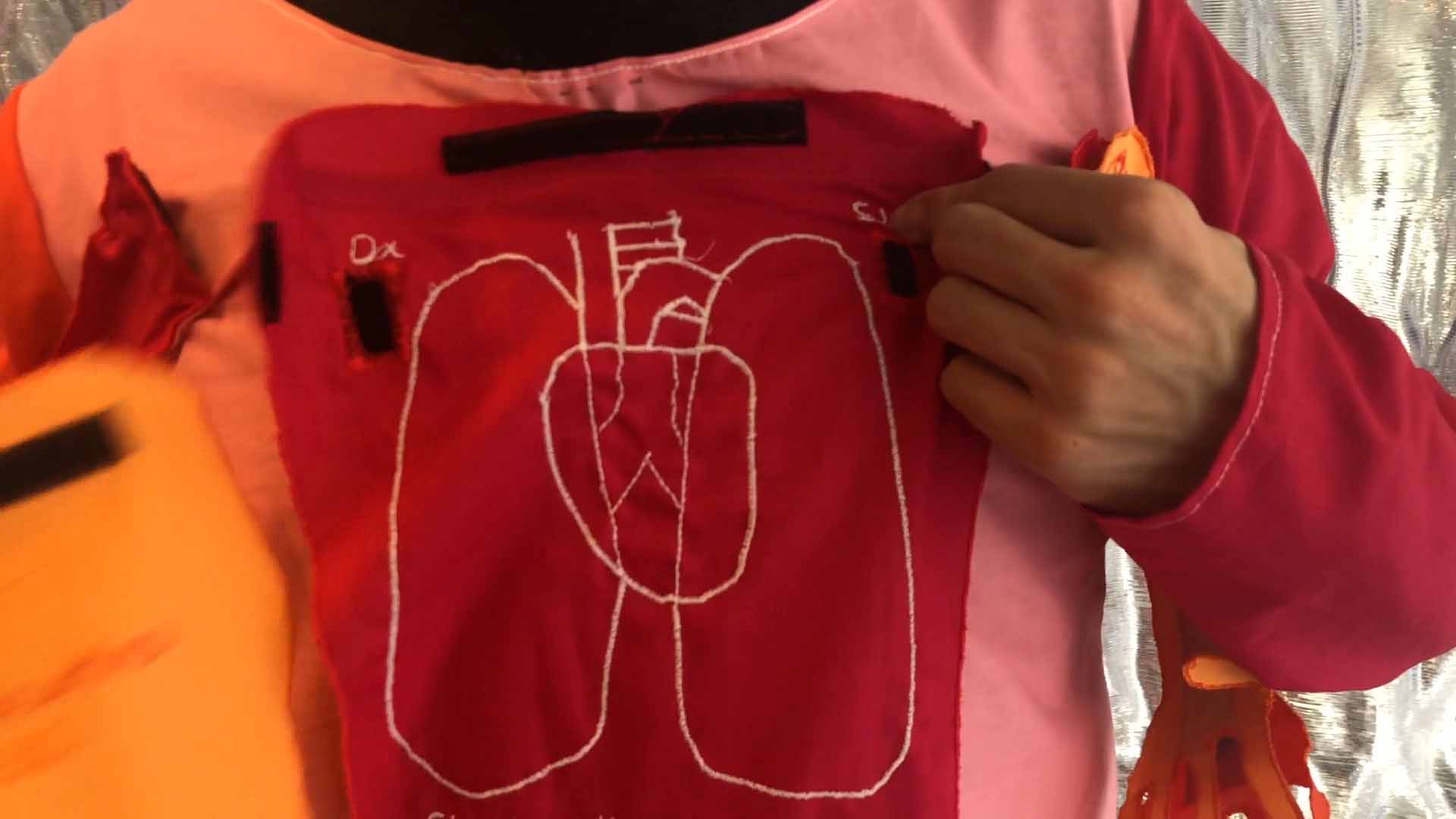

A medical emergency costume displaying areas of injury with indication of a drug administered (displayed as an 'F' for Fentanyl), heart biometrics, enclosed private records and support sent by loved ones via touch from the map tool in Computational Costume v1. Photography by Jon McCormack.

Computational Costume v2 video [72]. Video is author's own.

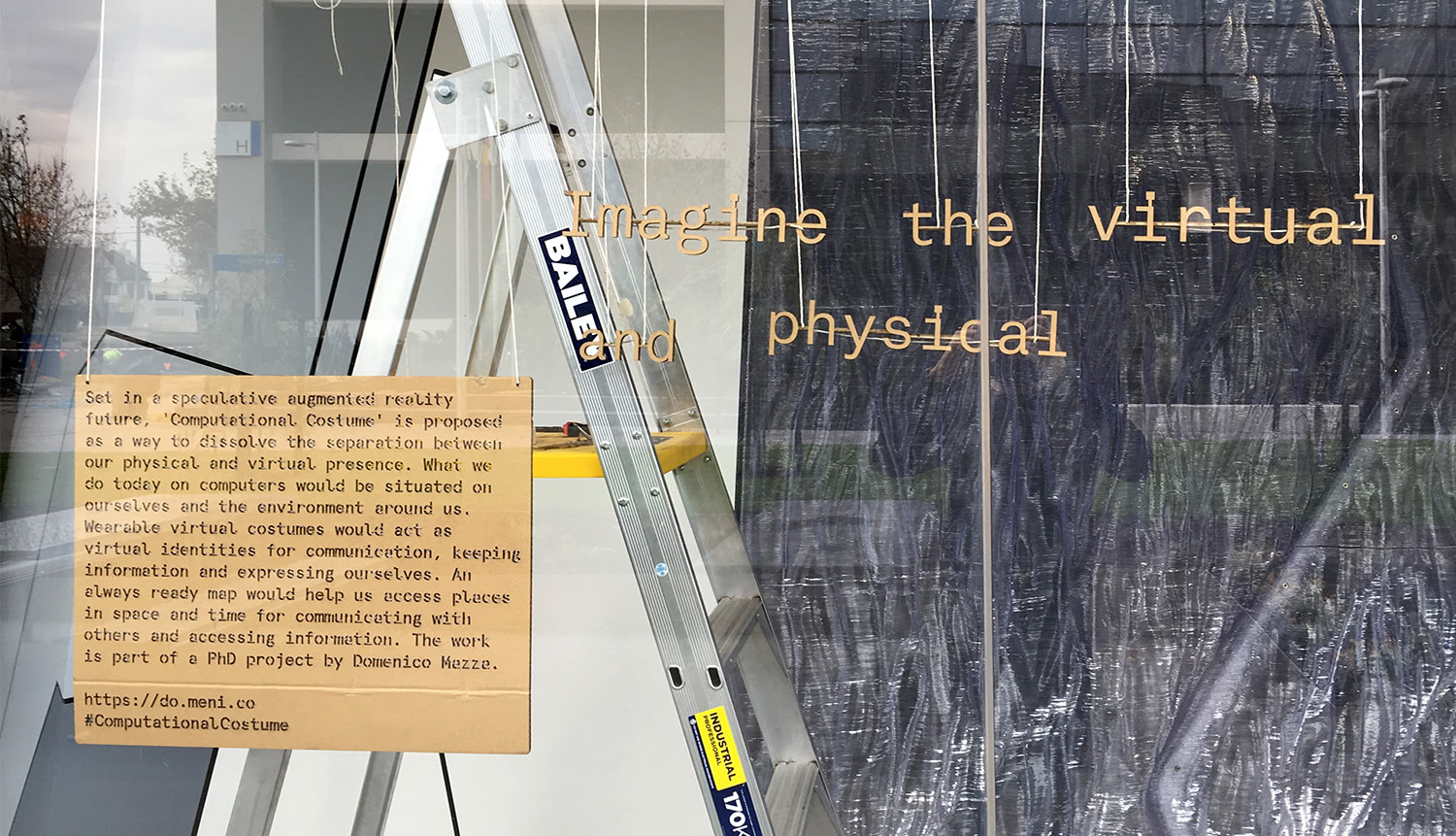

The Computational Costume v2 video, costumes and props on display at Monash University's SensiLab The Looking Glass window display, Caulfield, Victoria, Australia from 1 July 2018 until 26 November 2018. Image is author's own.

The Computational Costume v2 costumes and props, with video, on display at the Design Translations exhibition by Health Collab, MADA Gallery, Caulfield, Victoria, Australia, 3–6 December 2018. Image is author's own.

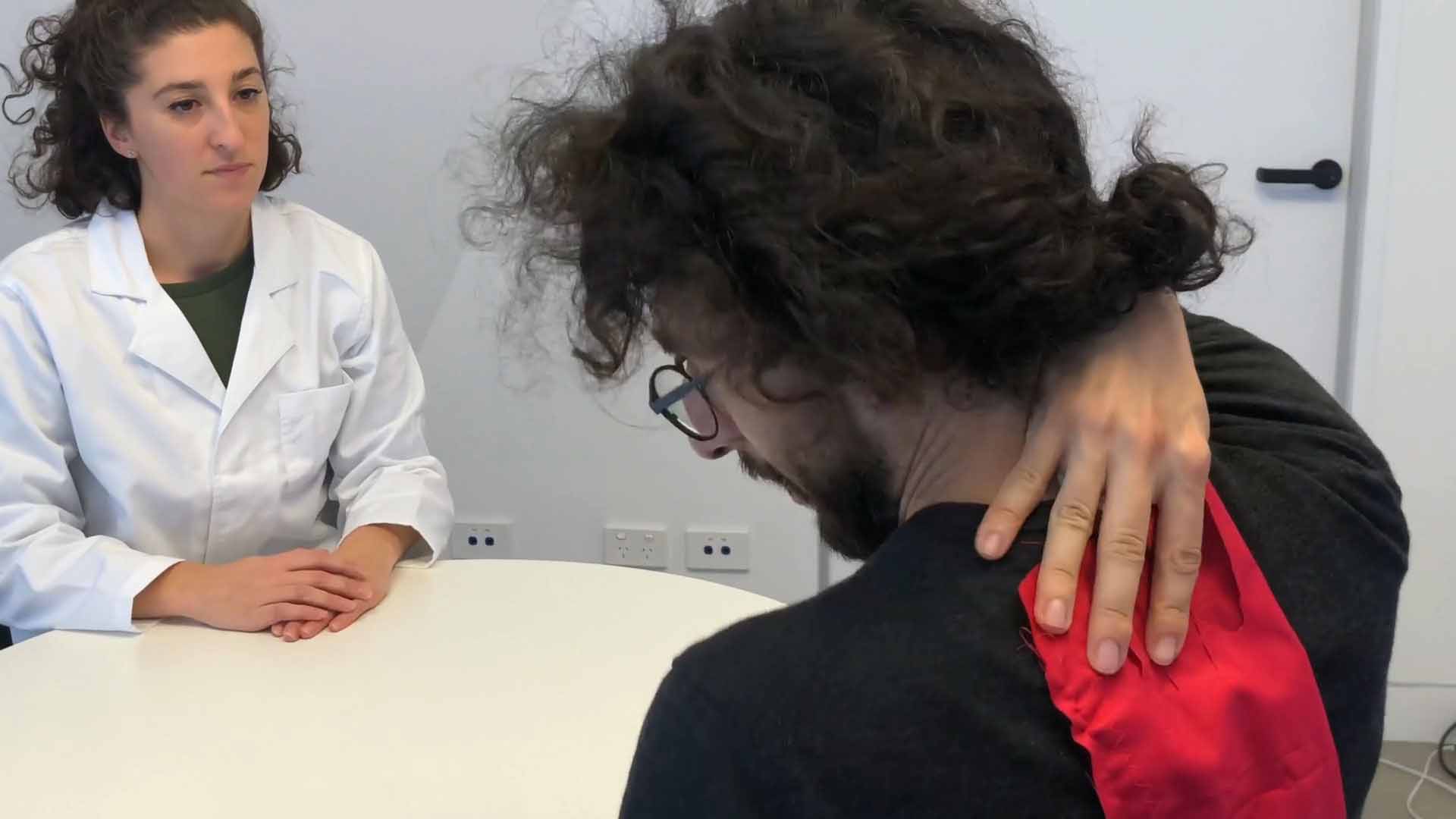

Applying a mark onto the back using an object for marking and costume token at 00:40–00:42 in the Computational Costume v2 video [72]. Images are author's own.

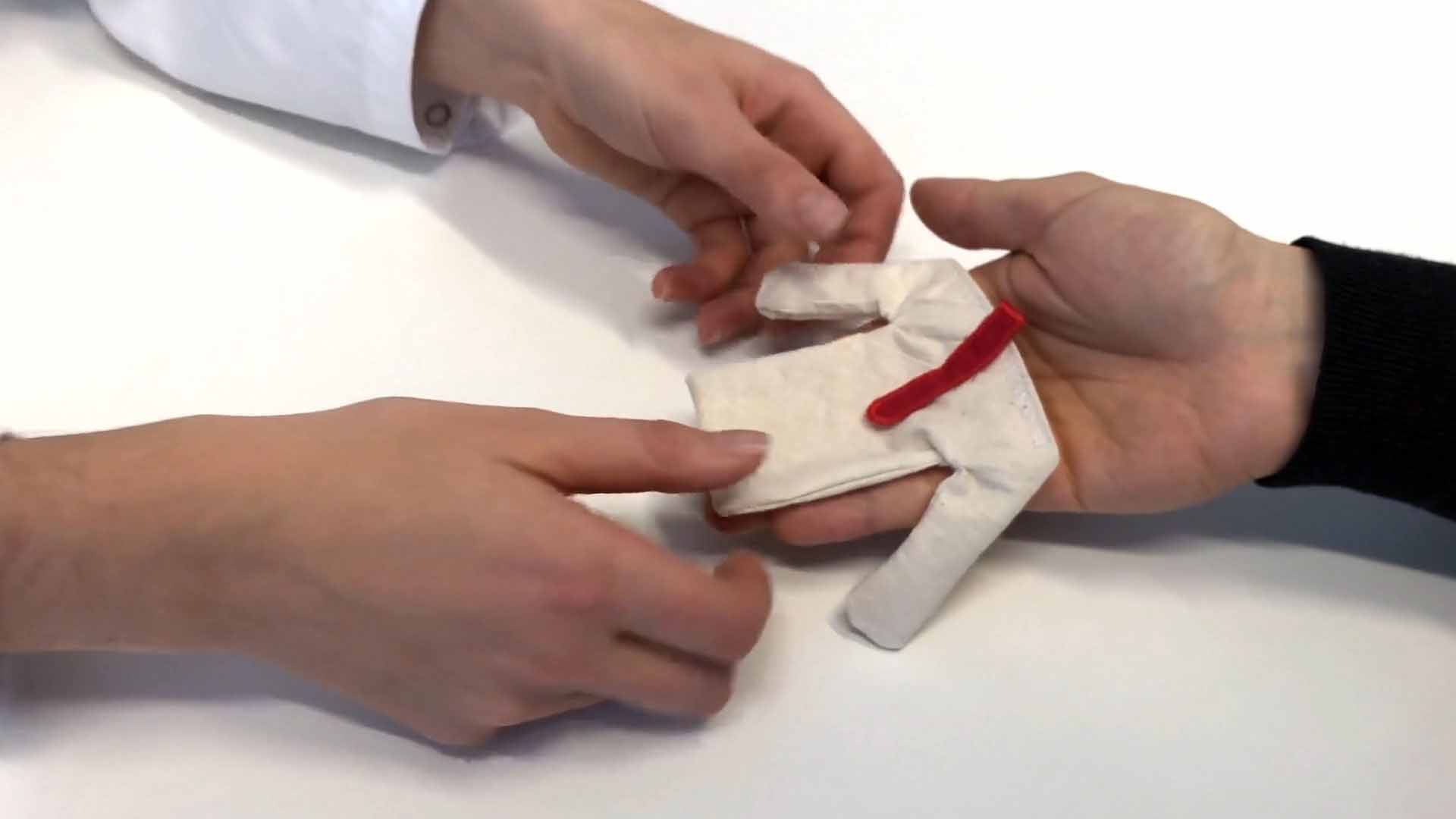

The costume token allows access to a costume. In this case a health professional can see a medical record costume at 00:46–00:52 in the Computational Costume v2 video [72]. Images are author's own.

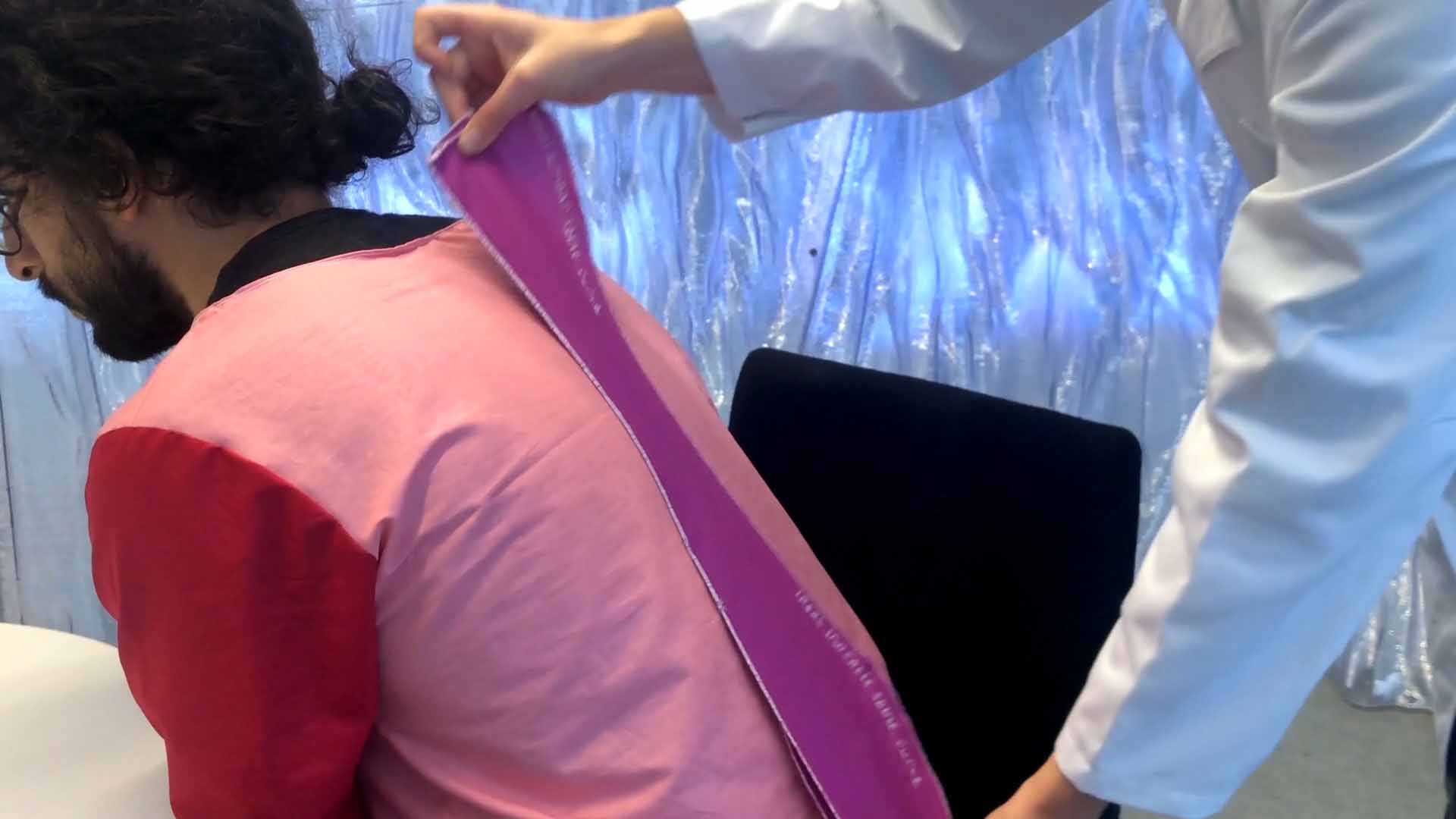

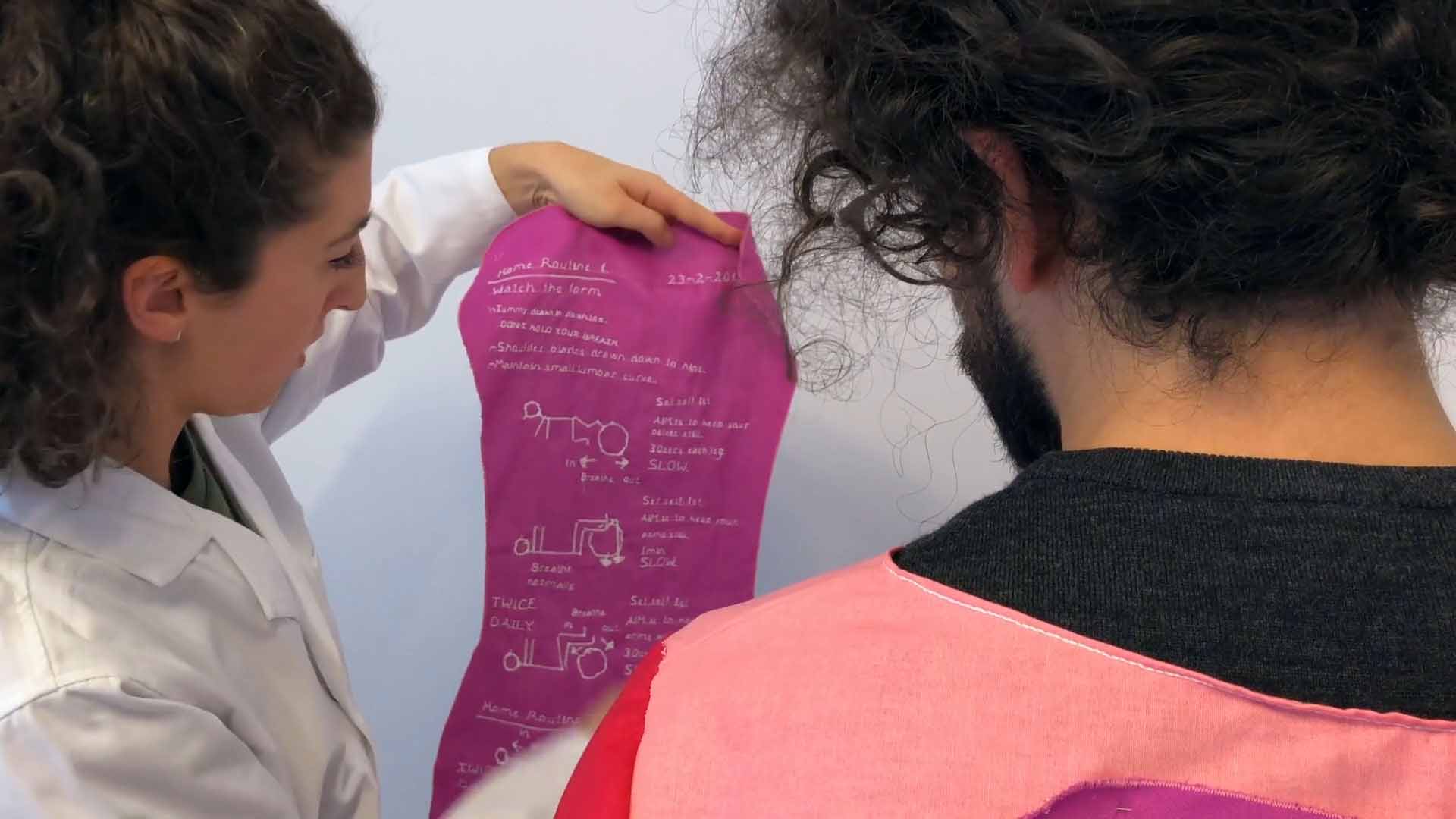

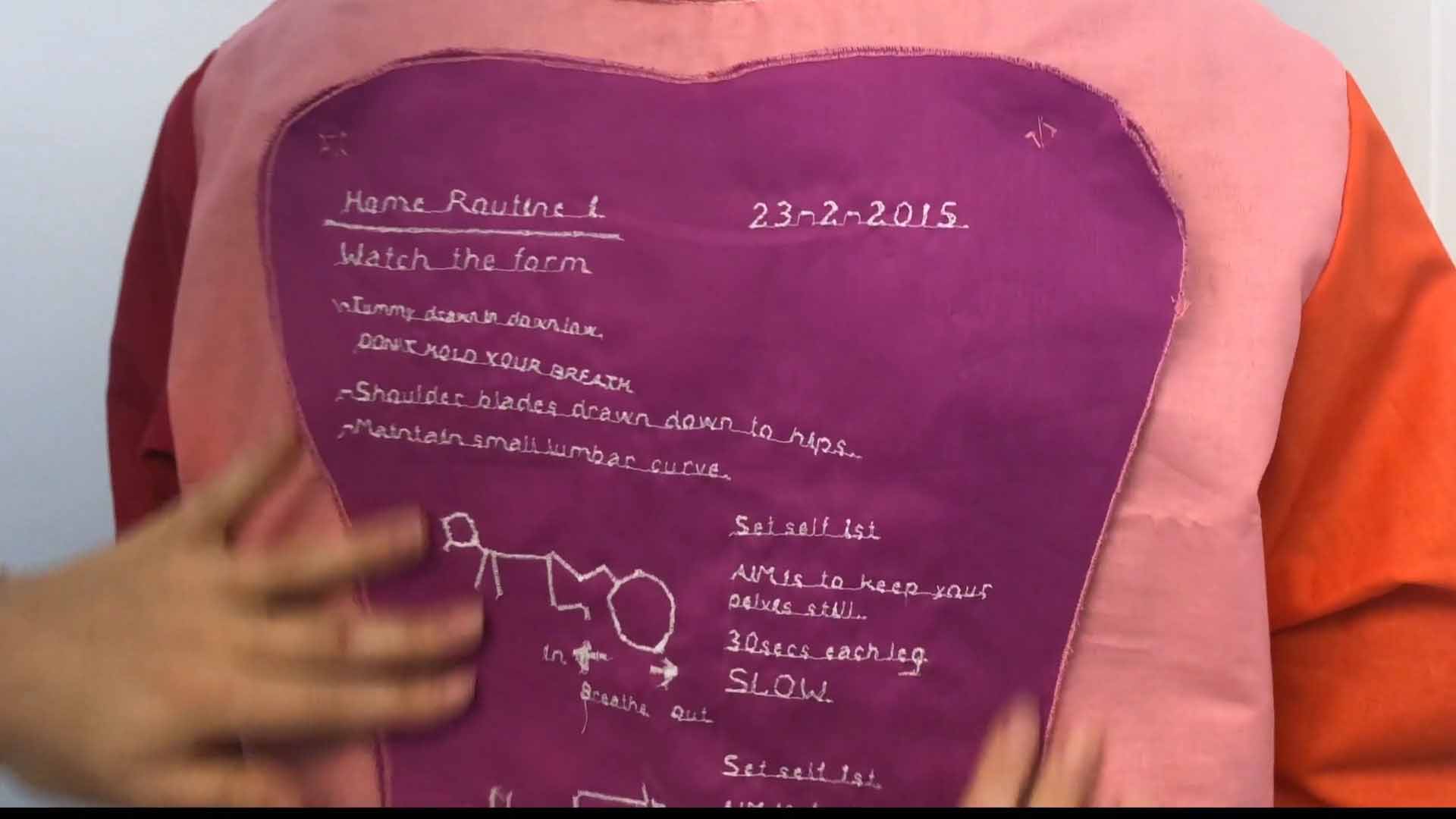

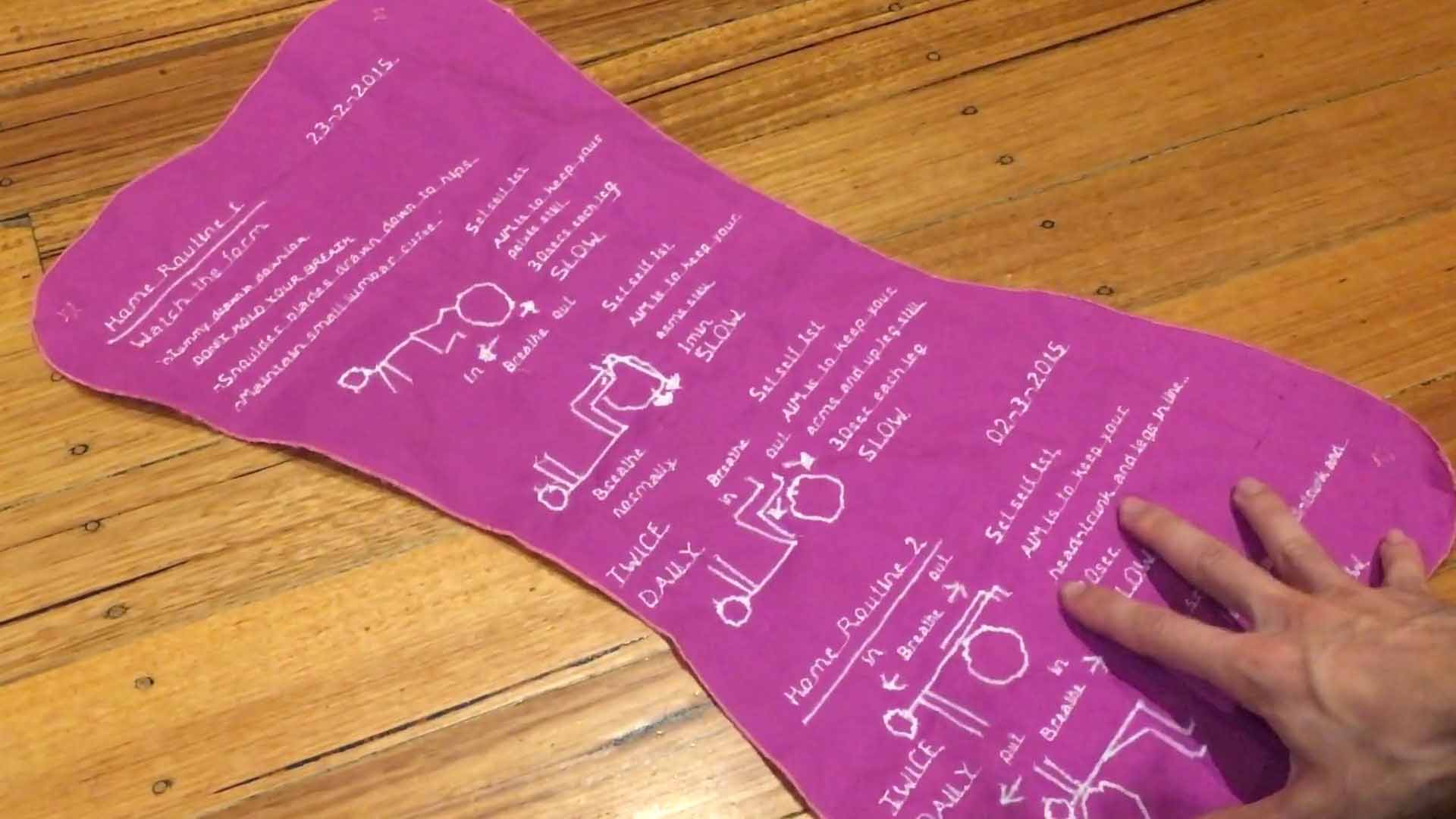

A health professional applies a ready-made diagram from a wall and specialised treatment plan to the patient's costume for reference at 00:52–01:19 in the Computational Costume v2 video [72]. Images are author's own.

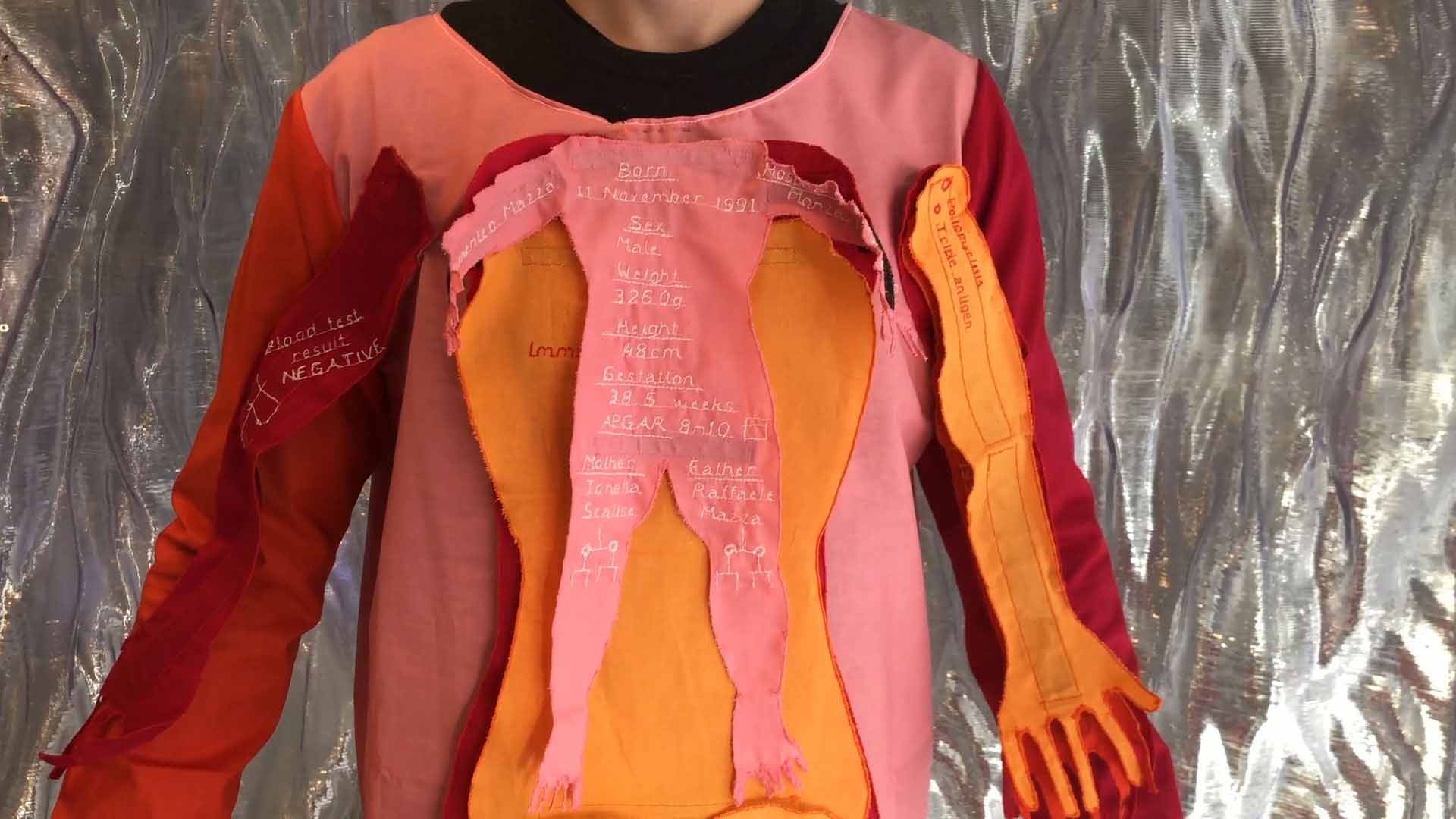

A medical record costume hosting a lifetime of records as chronologically ordered silhouettes at 01:32–01:40 in the Computational Costume v2 video [72]. Images are author's own.

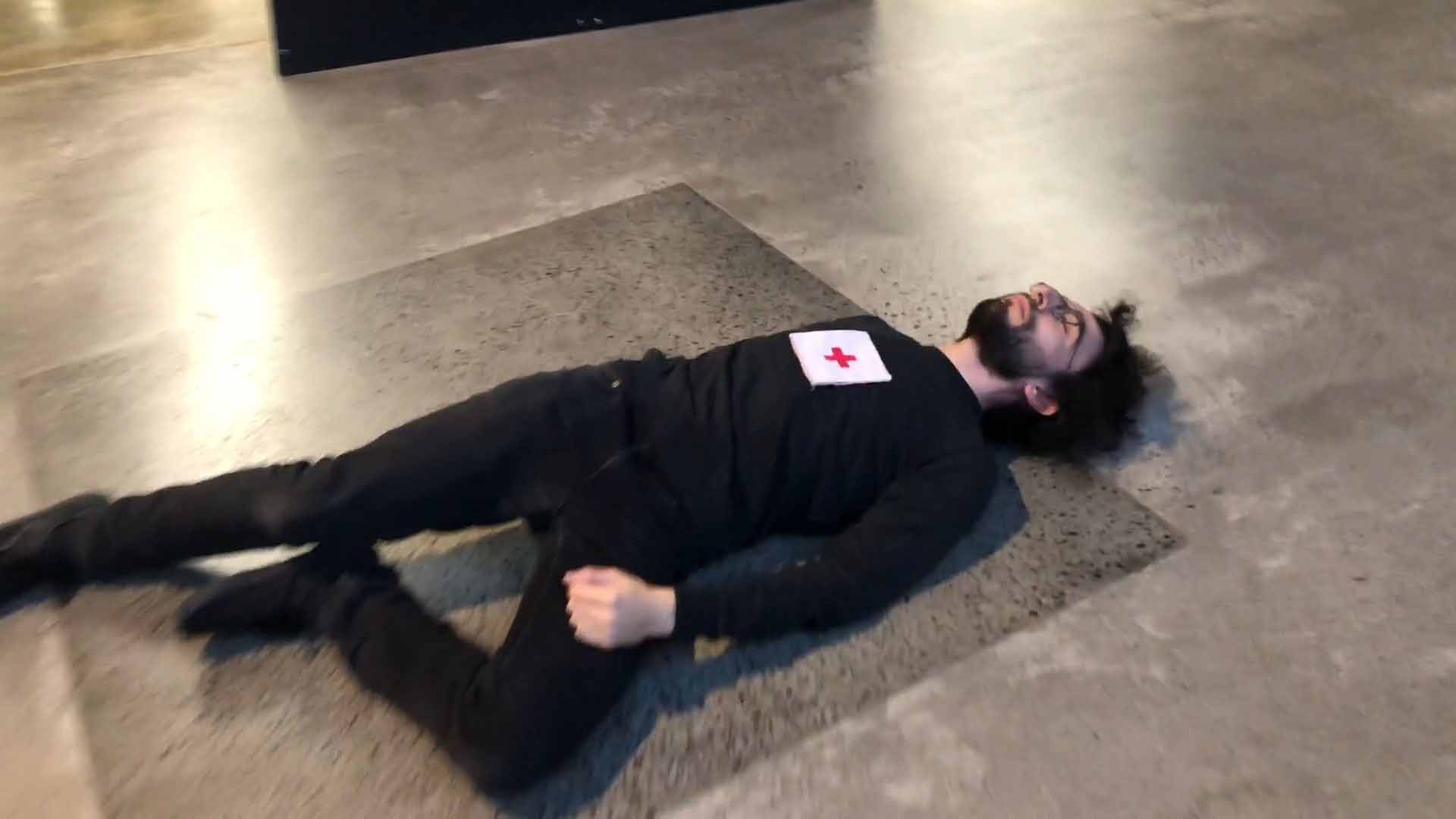

A medical record appears automatically on a wearer in an emergency situation as a call-to-action for bystanders at 01:53–01:56 in the Computational Costume v2 video [72]. Images are author's own.

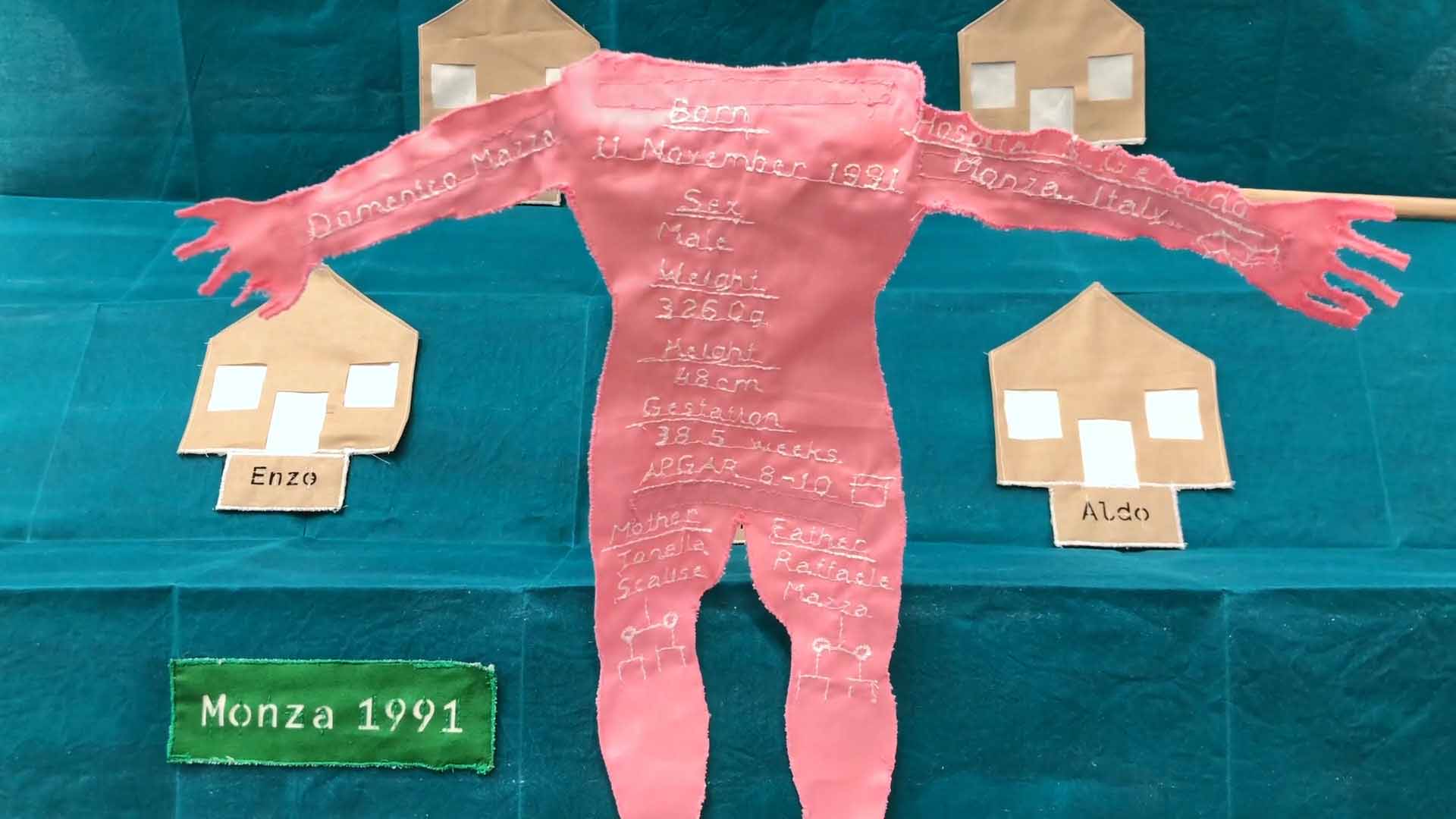

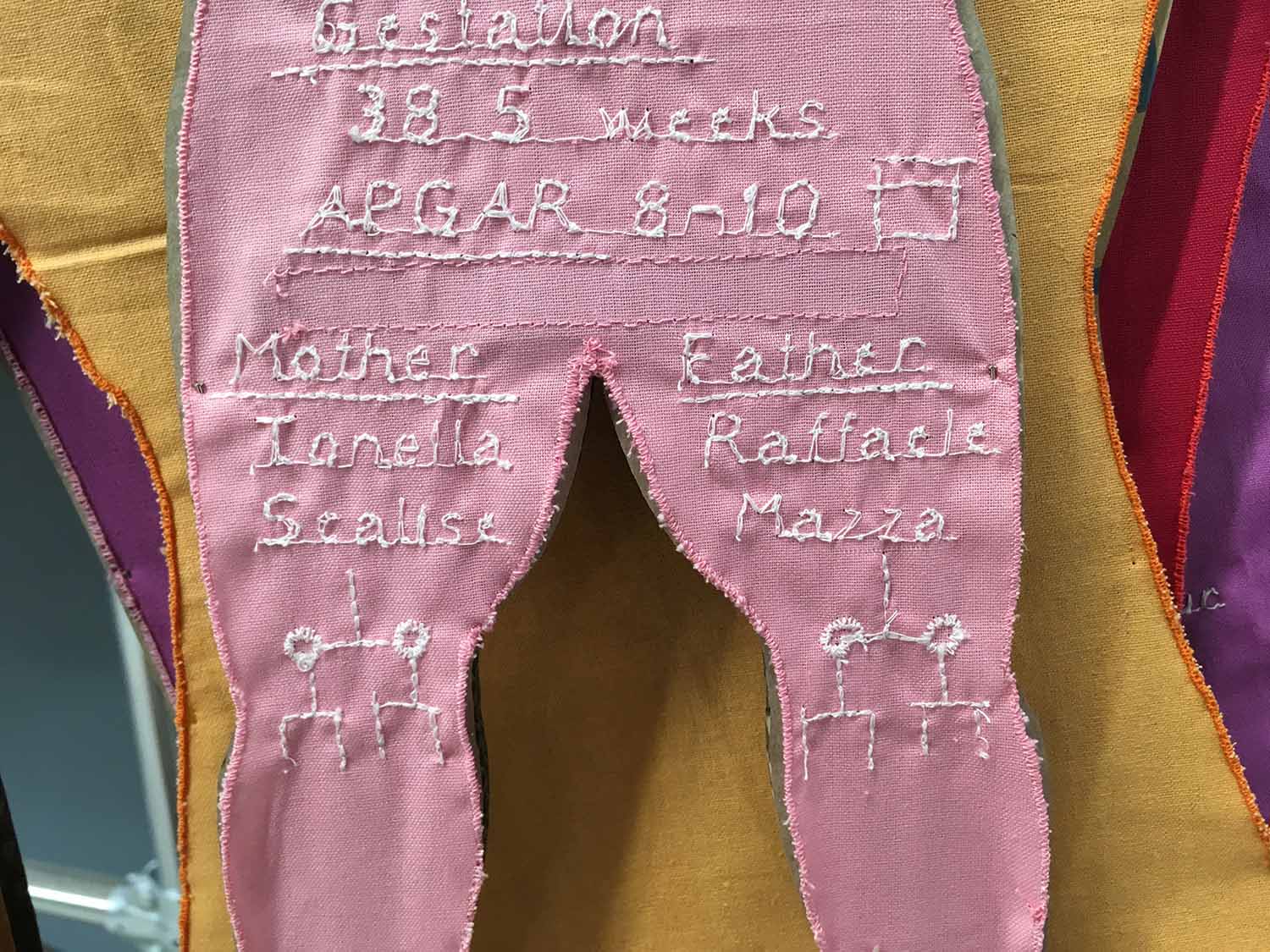

The map tool allowing access to a birth record's information on birth parents and birthplace at 02:04–02:06 in the Computational Costume v2 video [72]. Images are author's own.

The map tool facilitating communication between wearers and acting as a navigational aid at 02:16–02:23 in the Computational Costume v2 video [72]. Images are author's own.

The map tool allowing access to a remote location for object retrieval at 02:29 in the Computational Costume v2 video [72]. Image is author's own.

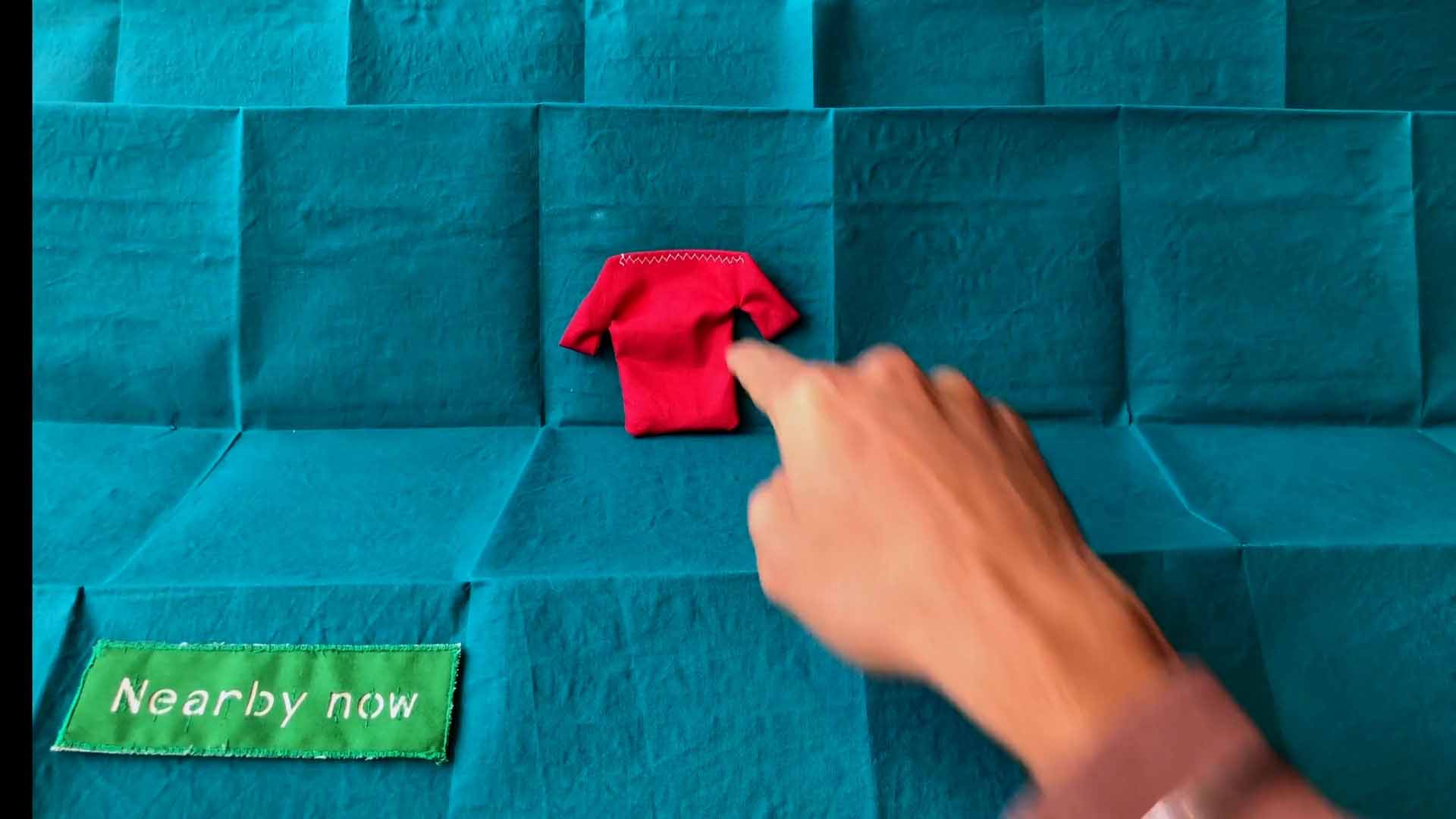

The costume and shared objects as tools to manage privacy at 02:40–03:05 in the Computational Costume v2 video [72]. Images are author's own.

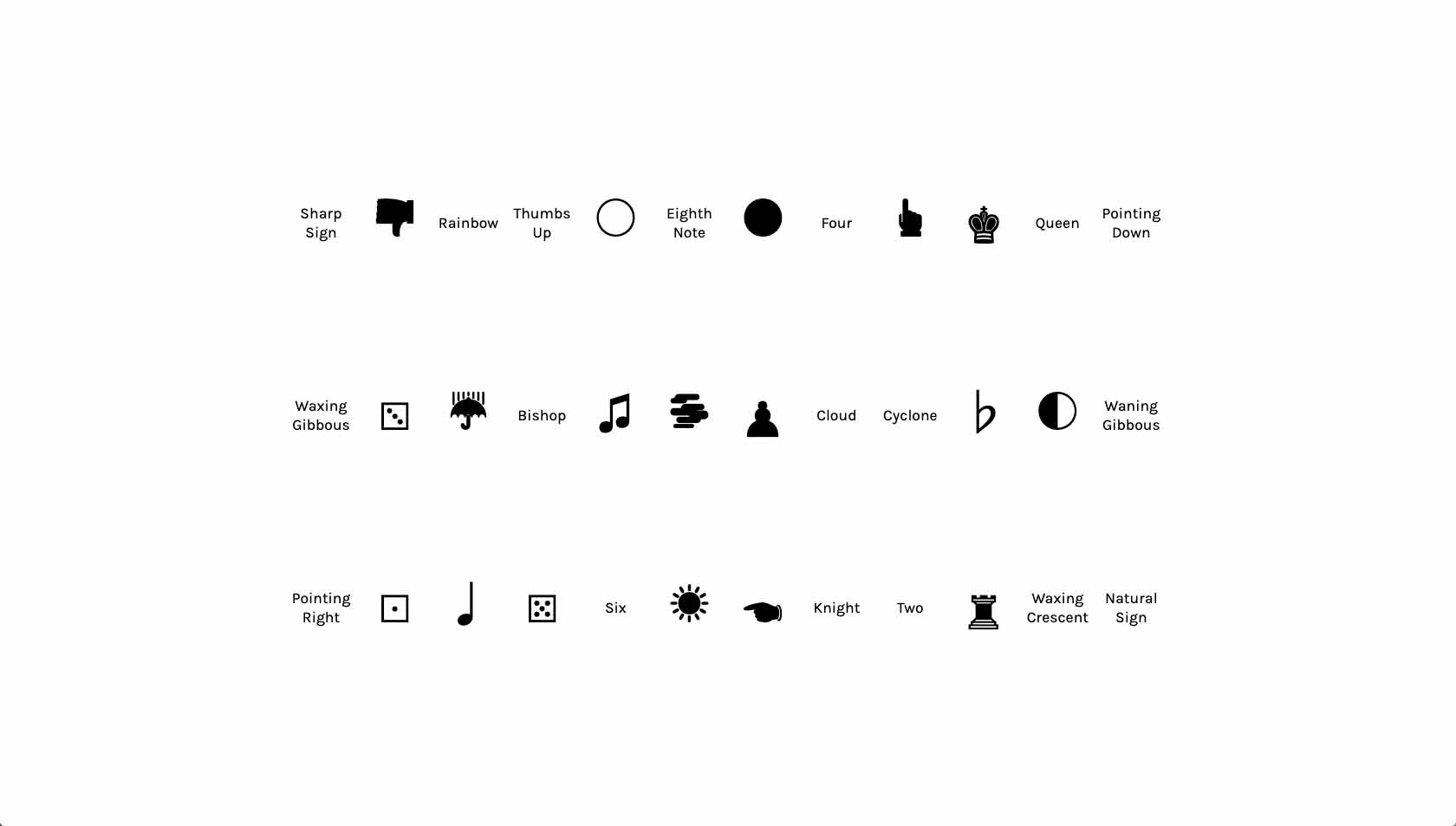

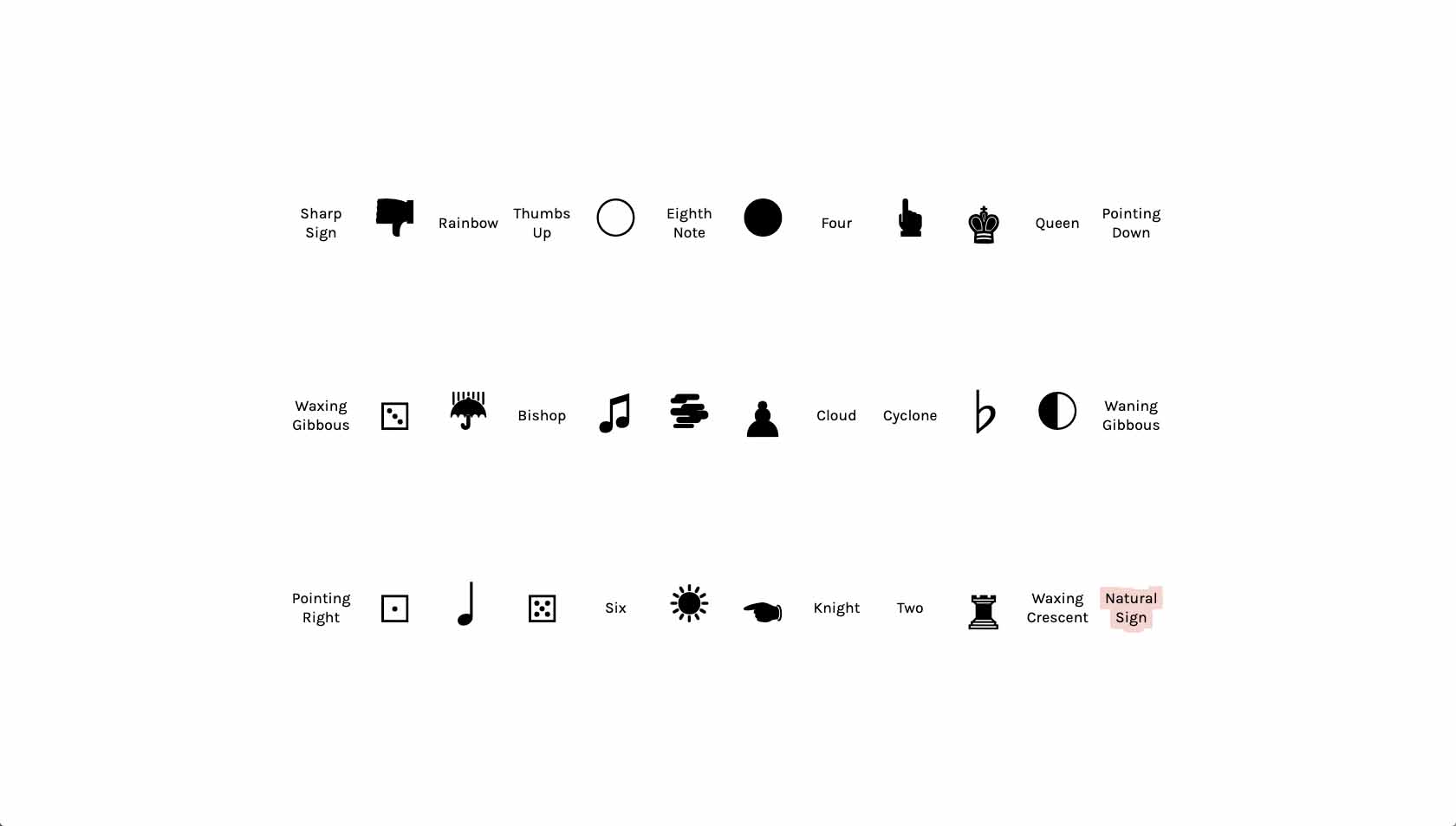

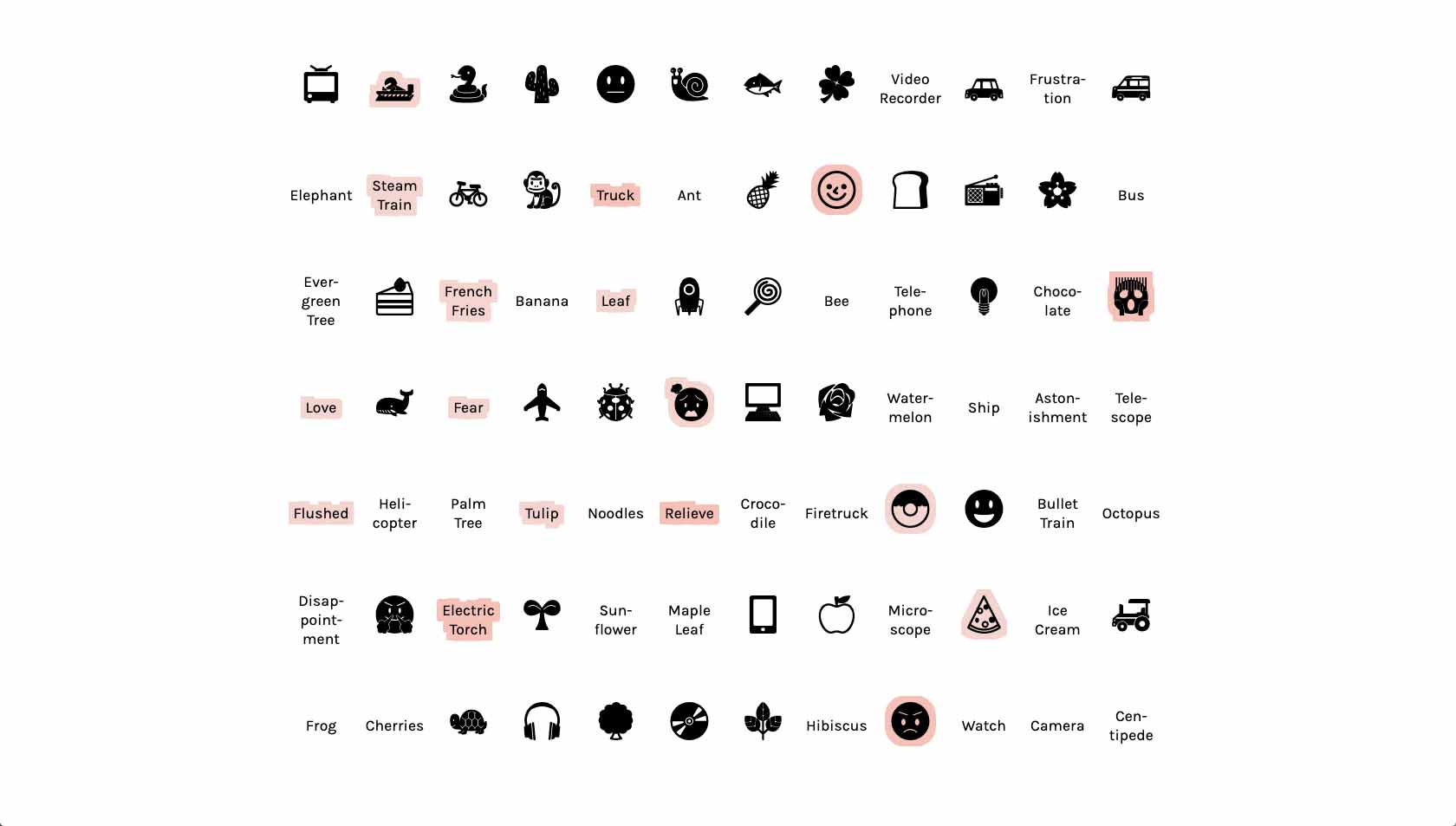

The first turn in the practice round of the Memory Menu. Images are author's own.

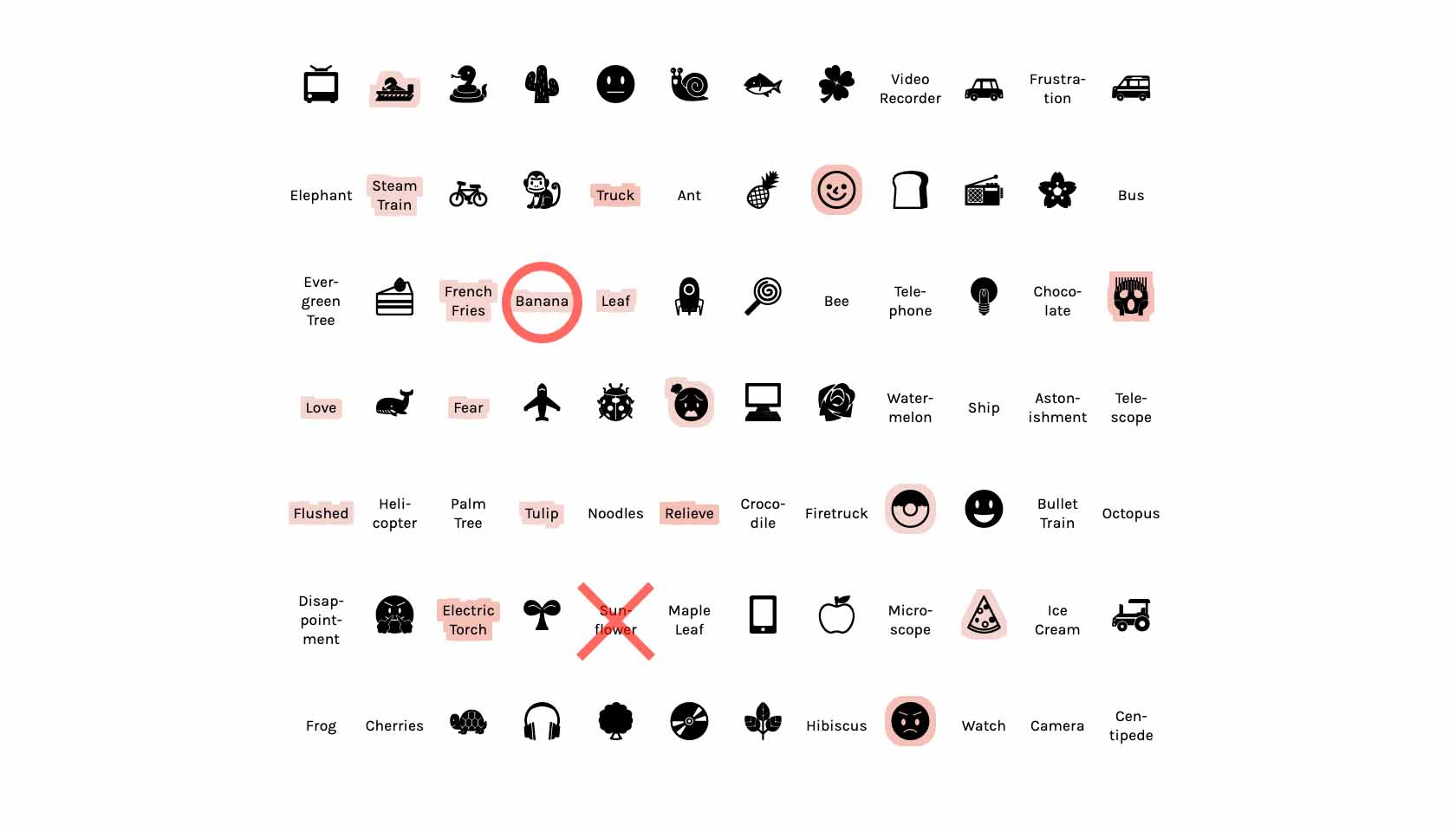

A selection error is highlighted in Memory Menu, for picking 'Sunflower' instead of the required selection, 'Banana'. Image is author's own.

Accuracy of recollection of the most picked category for baseline versus use-wear menus. Correct selections are compared against selections that are one off, two off, three off and so on from being correct.

Accuracy of recollection of the least picked category for baseline versus use-wear menus. Correct selections are compared against selections that are one off, two off, three off and so on from being correct.

Difficulty reported by participants for 1st menu baseline (grey) with 2nd menu use-wear versus 1st menu use-wear (red) with 2nd menu baseline.

Difficulty reported by participants for 2nd menu baseline (grey) with 1st menu use-wear versus 2nd menu use-wear (red) with 1st menu baseline.

Likert scale responses for effectiveness of the use-wear effect.

68 optional written responses for effectiveness of the use-wear effect coded into three categories.

Likert scale responses for desirability of the use-wear effect.

53 optional written responses for desirability of the use-wear effect coded into four categories.

A mock-up of the Cardboard poster. Image is author's own.

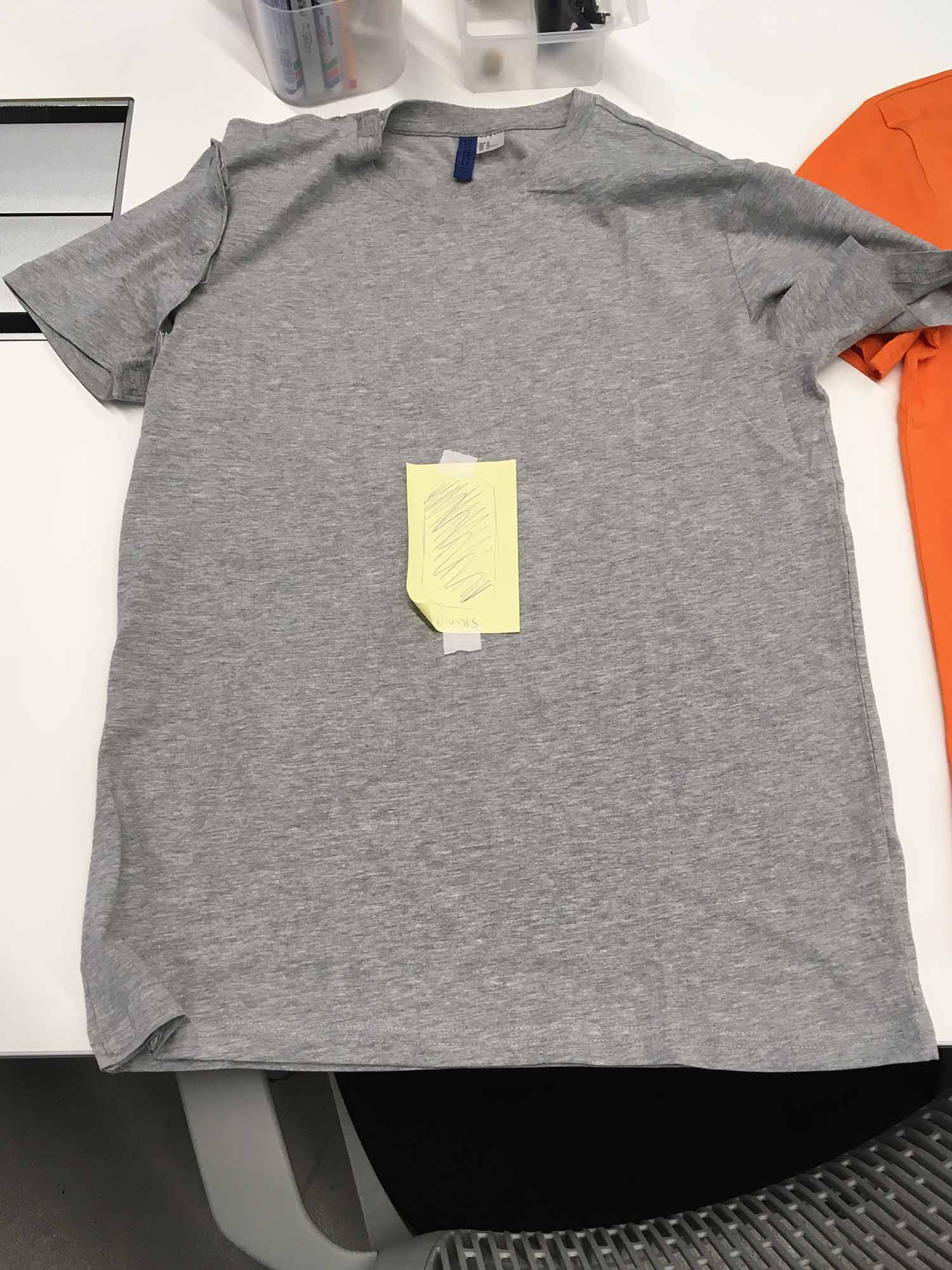

Paper mock-ups of Computational Costume v1. Images are author's own.

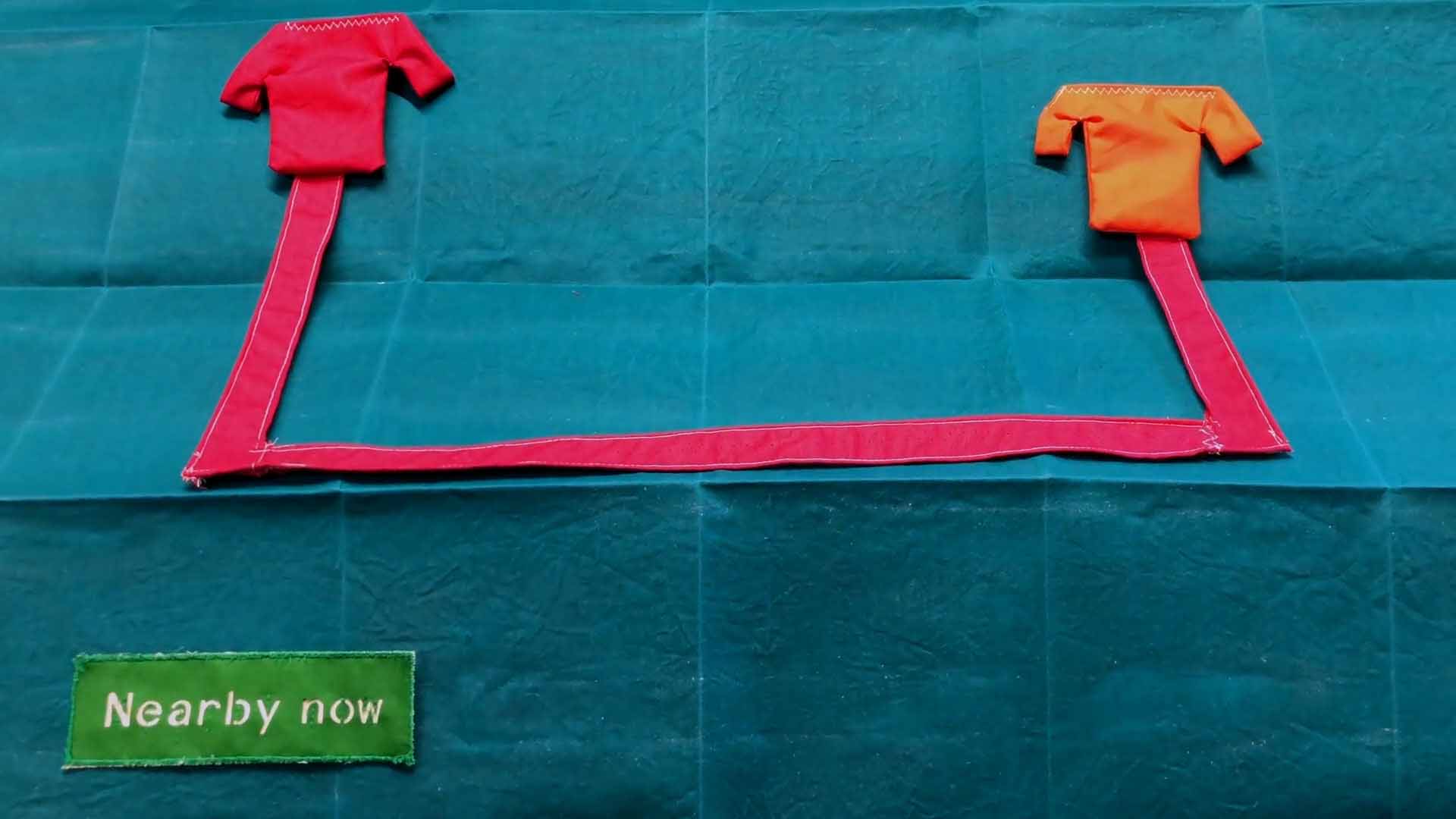

Fabric patterns for a shirt and pants. Torso (left), shirt arm (middle) and pants (right). Image is author's own.

Pants and top made from fabric patterns for laser cutting. Images are author's own.

Floating cardboard signage as imagined esemplastic objects, for the exhibition of Computational Costume v2. Image is author's own.

Hand-cut (above) versus laser-cut (below) fabric objects for Computational Costume v1. Images are author's own.

Manual and automated embroidery used for Computational Costume v2. Image is author's own.

Computational Costume design iterations. Images are author's own.

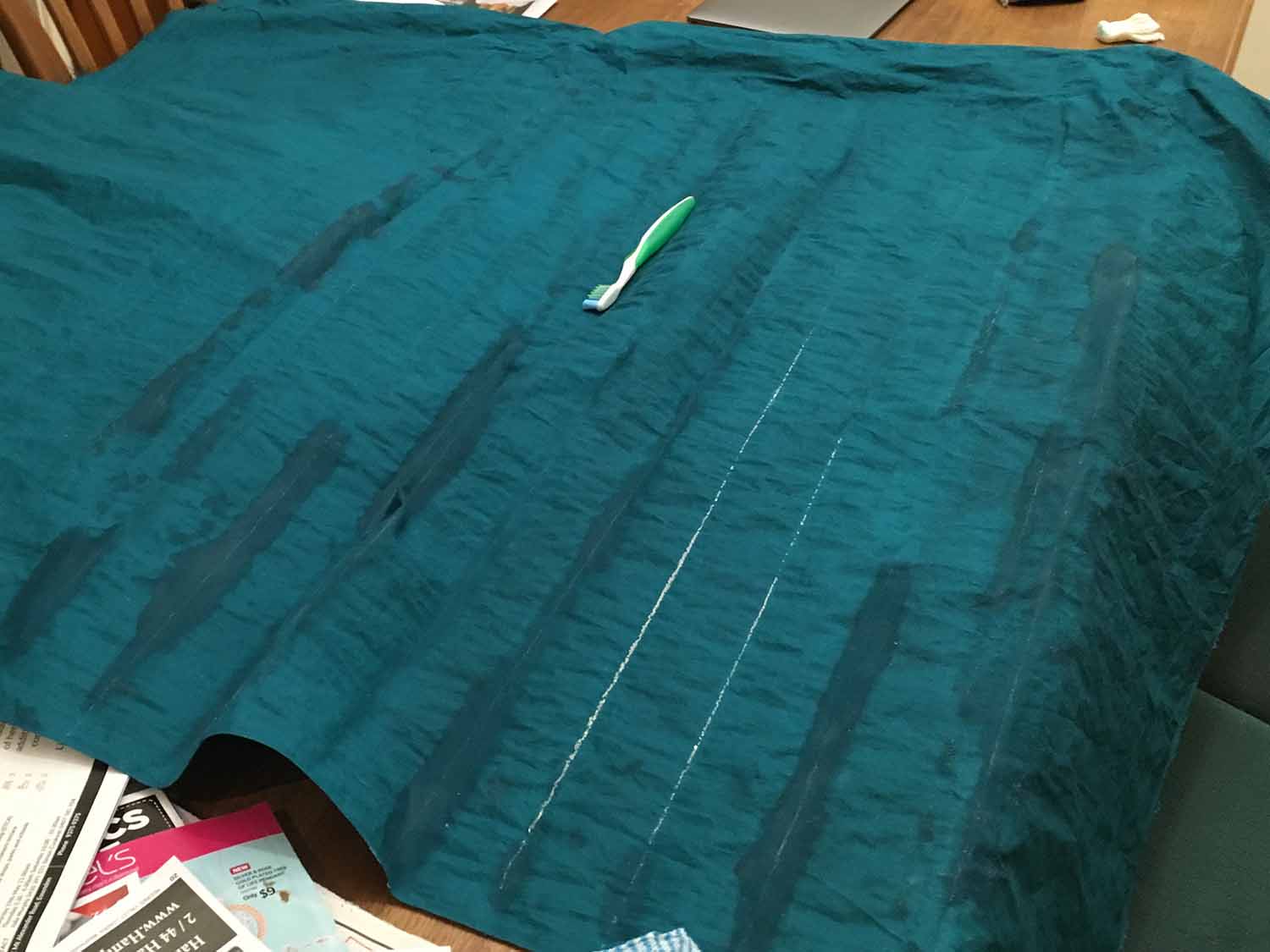

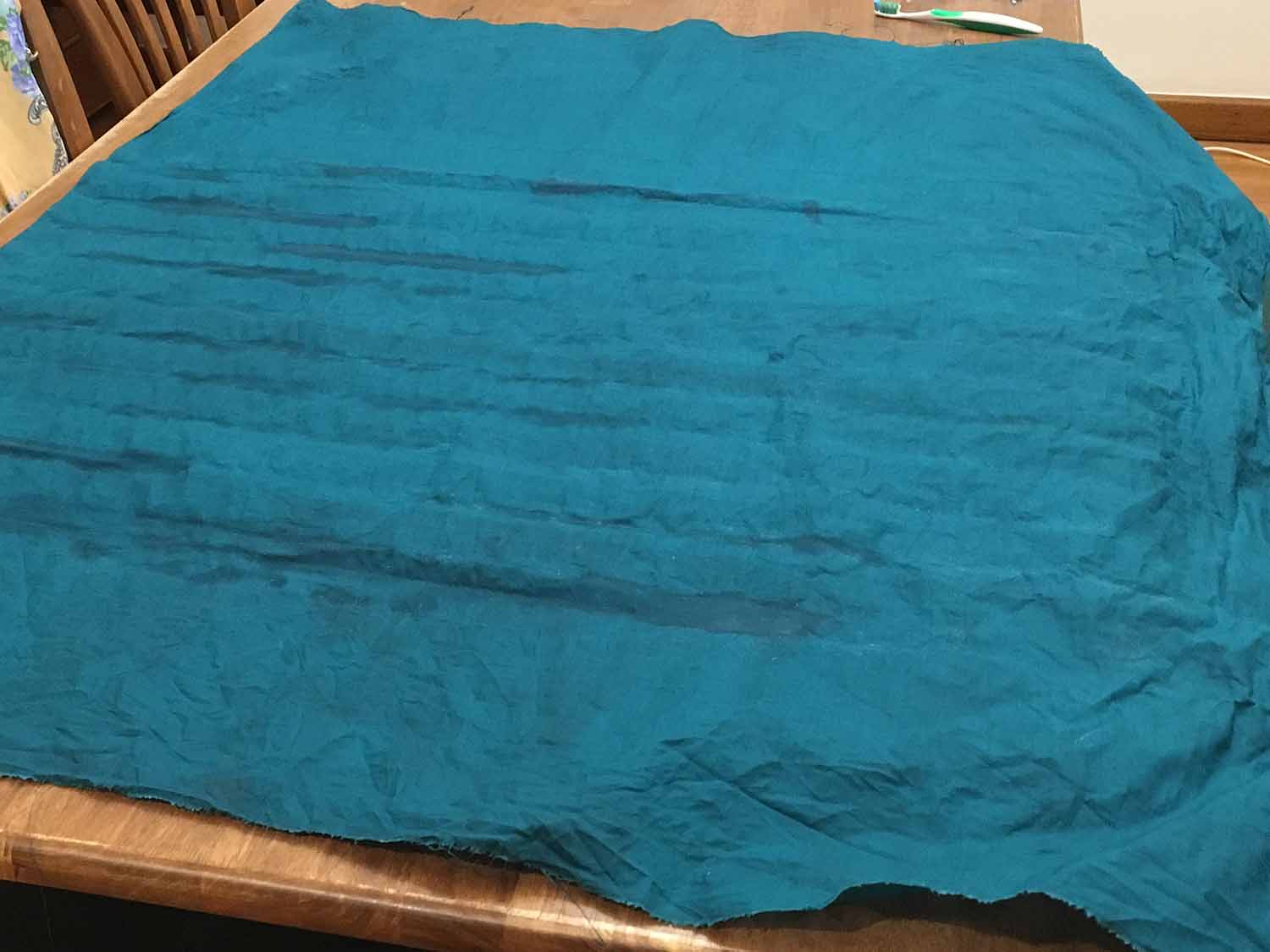

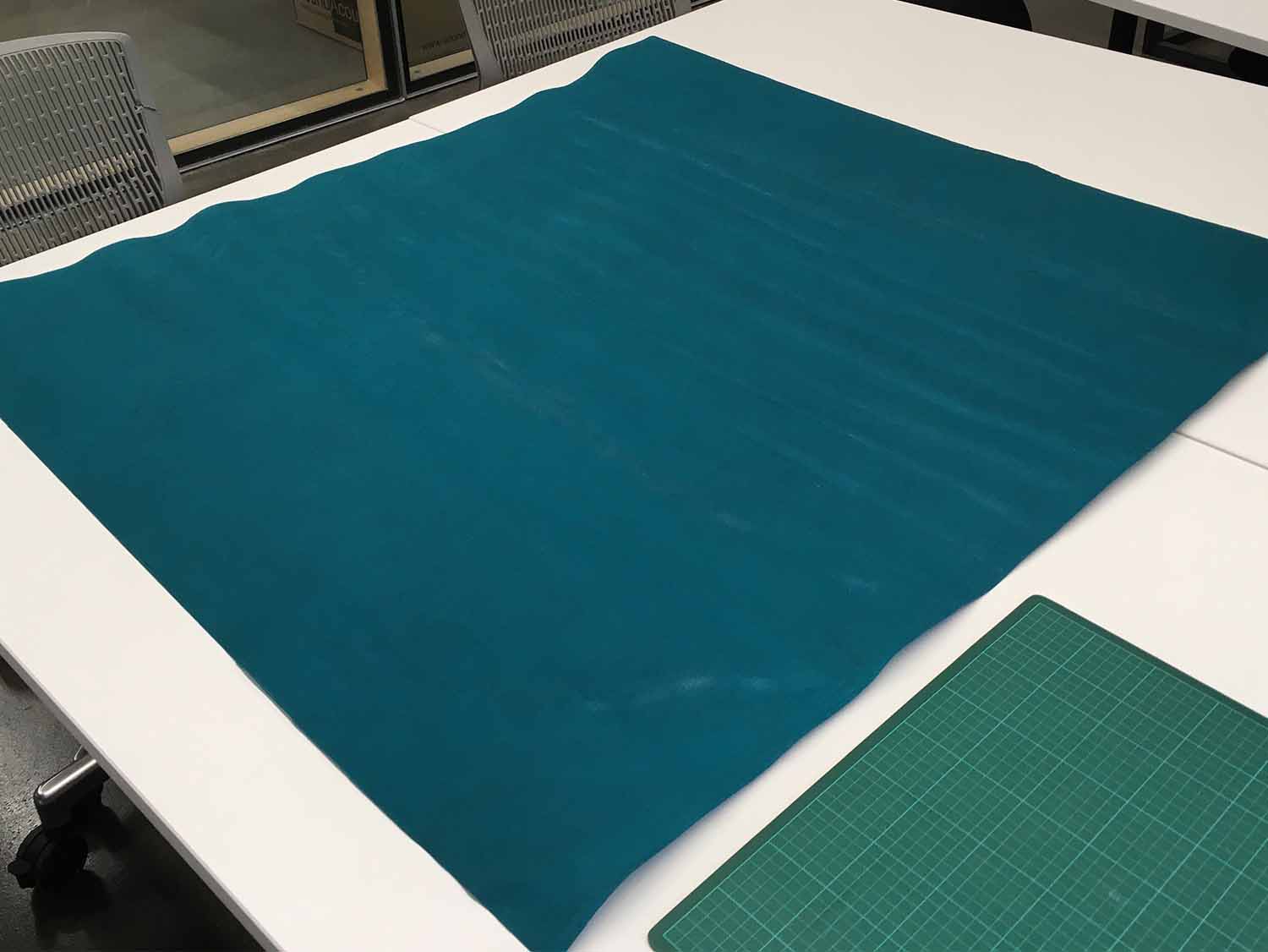

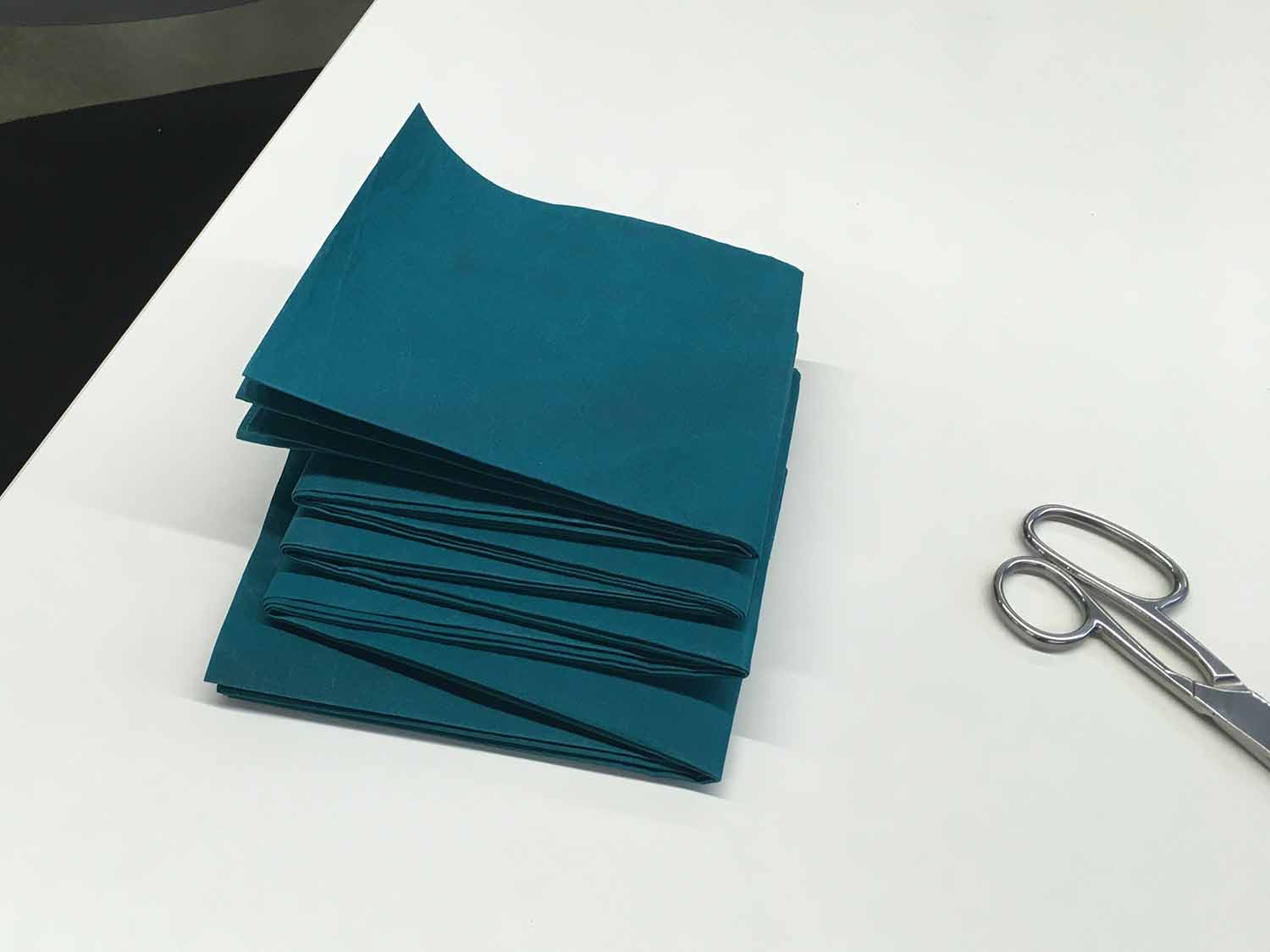

Stiffening cotton broadcloth for folding, from left to right: soaking in 1:1 PVA glue and water solution; air drying; cleaning glue residue; cleaned sheet; ironed sheet; and Miura folded sheet for Computational Costume v2. First image photography by Tonella Scalise, all other images are author's own.

A re-purposed jar used as a prop in the Computational Costume v2 video. Image is author's own.

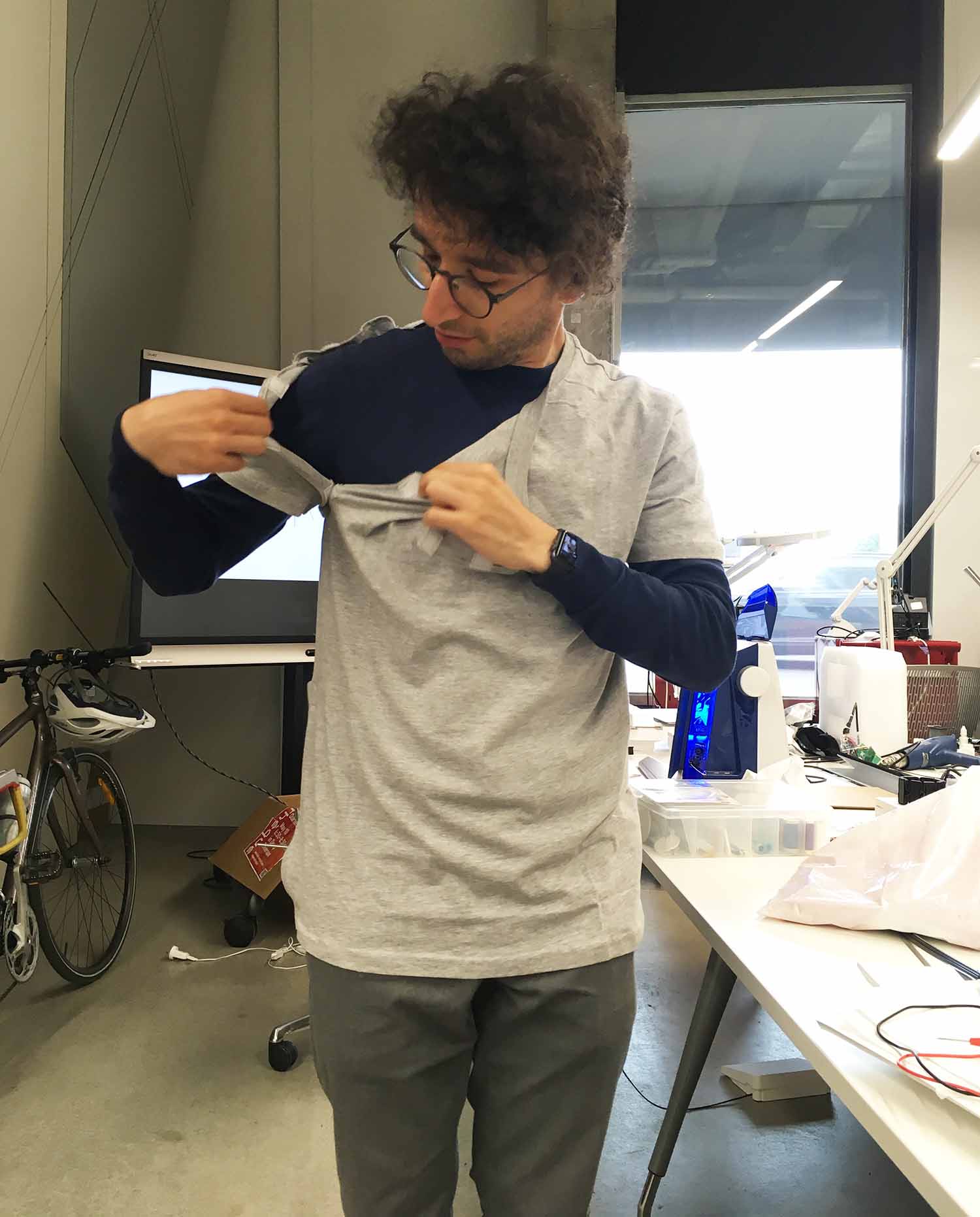

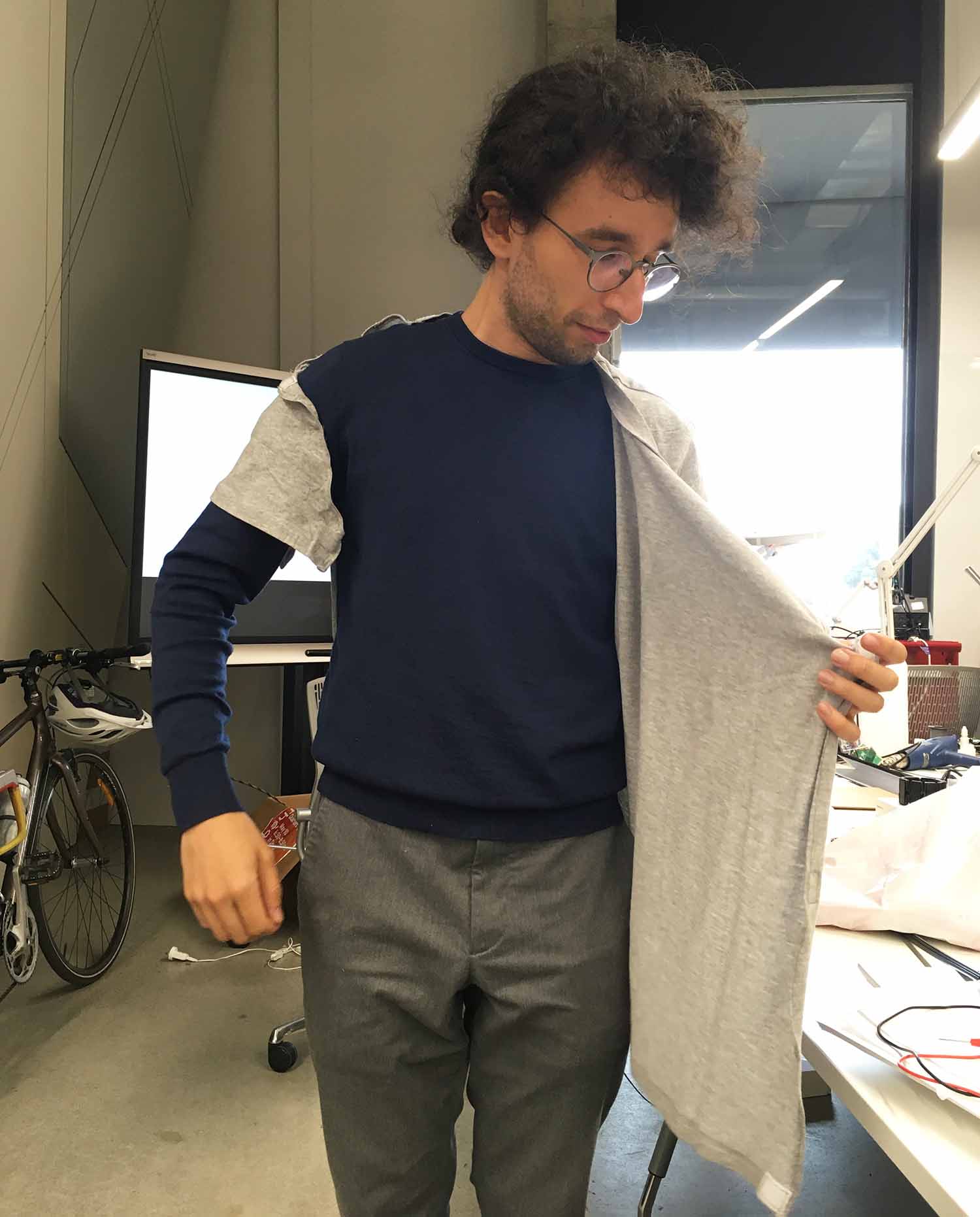

A ready-made T-shirt adapted for quick release with hook-and-loop fasteners for Computational Costume v1. Images are author's own.

Coveralls with loop fastener strips sewn on (left) for attaching objects with hook fasteners sewn on (middle and right) for Computational Costume v2. Images are author's own.

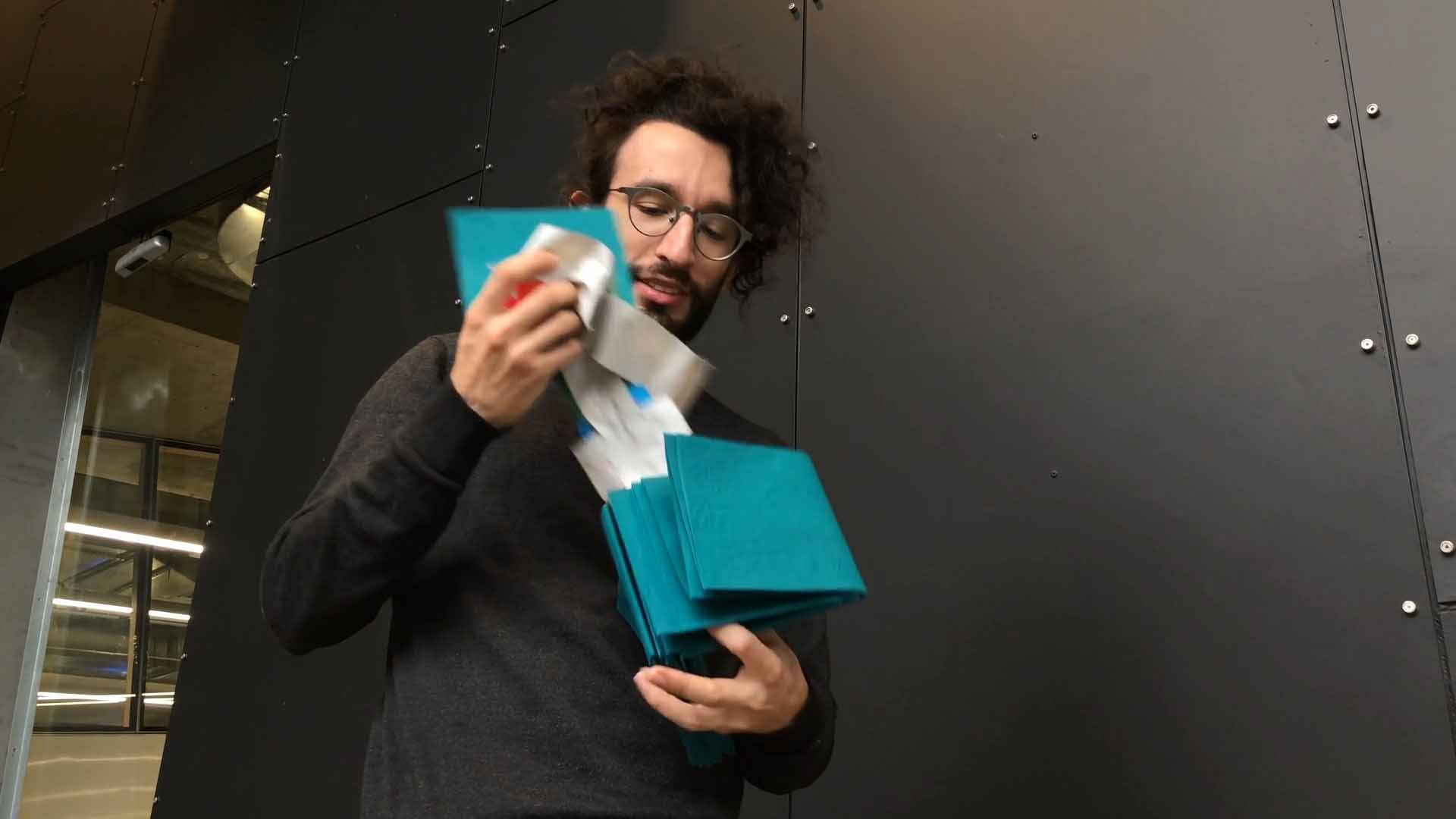

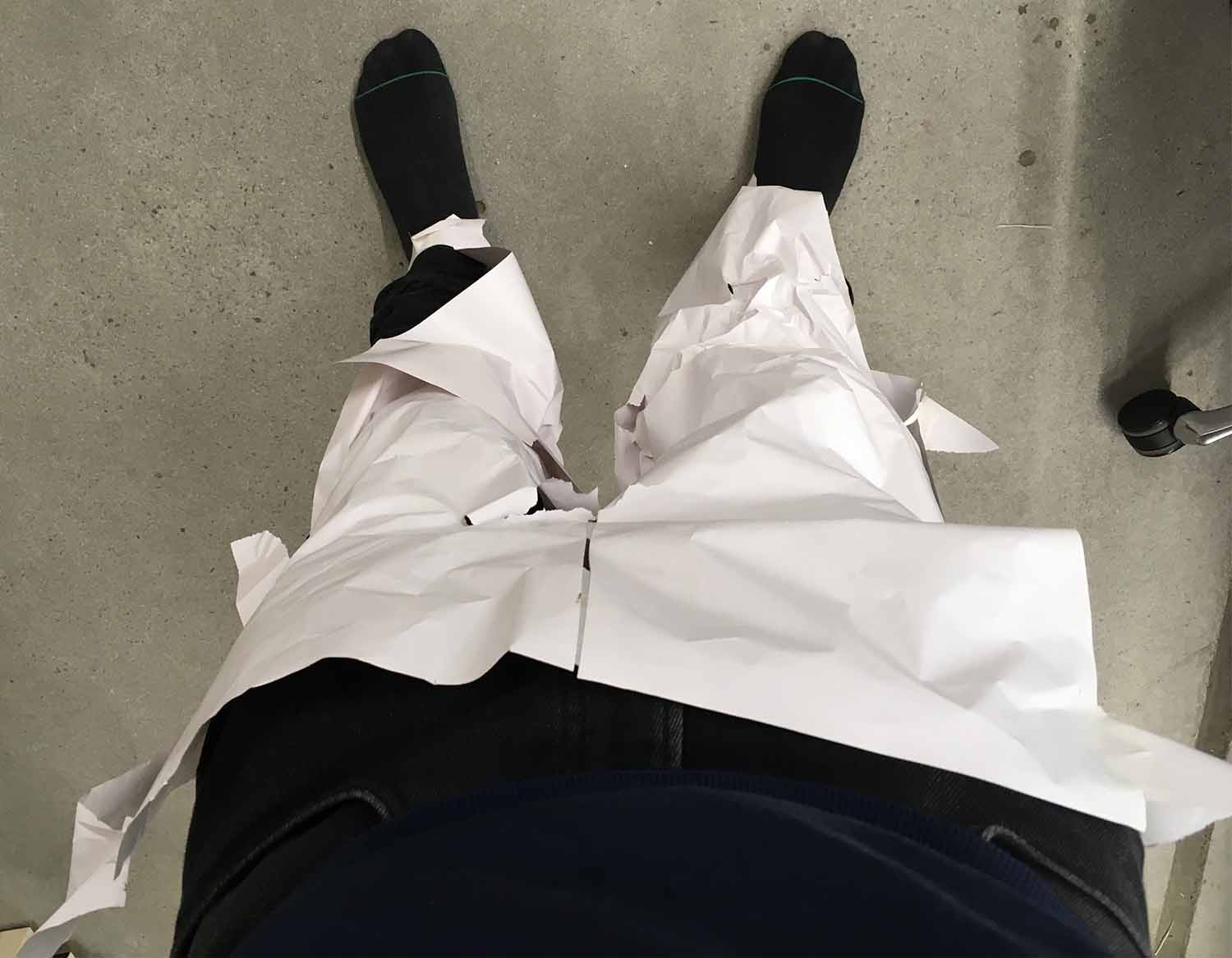

Attempting to wear a paper costume. First image photography by Toby Gifford, second image is author's own.

Metal snap fasteners used in Computational Costume v2. Image is author's own.

A discreetly placed pin allows the ability to attach a marker to a token in the Computational Costume v2 video. Images is author's own.

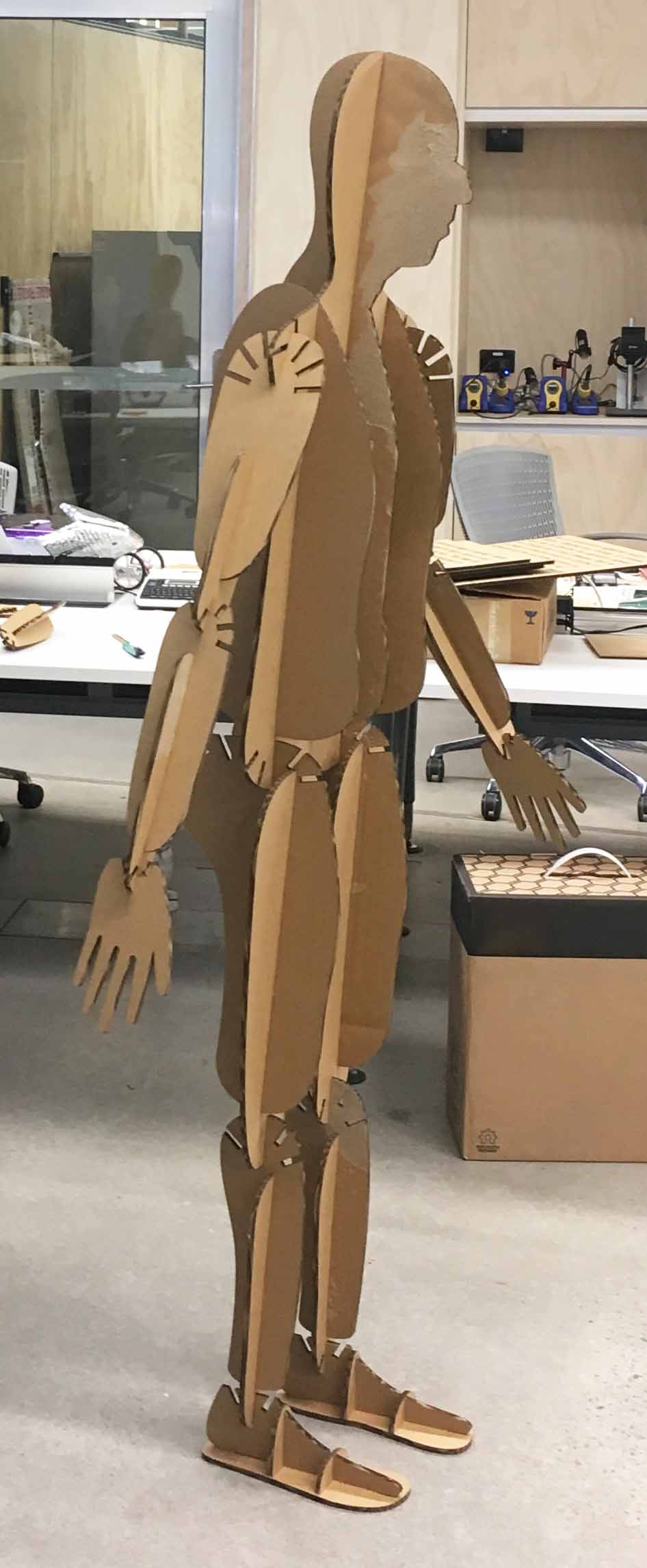

The original Computational Costume mannequin (left), the mannequin toppling over with added leg supports (middle) and the updated mannequin configuration with strengthened interlocks and a single vertical support to replace the legs (right). Images are author's own.

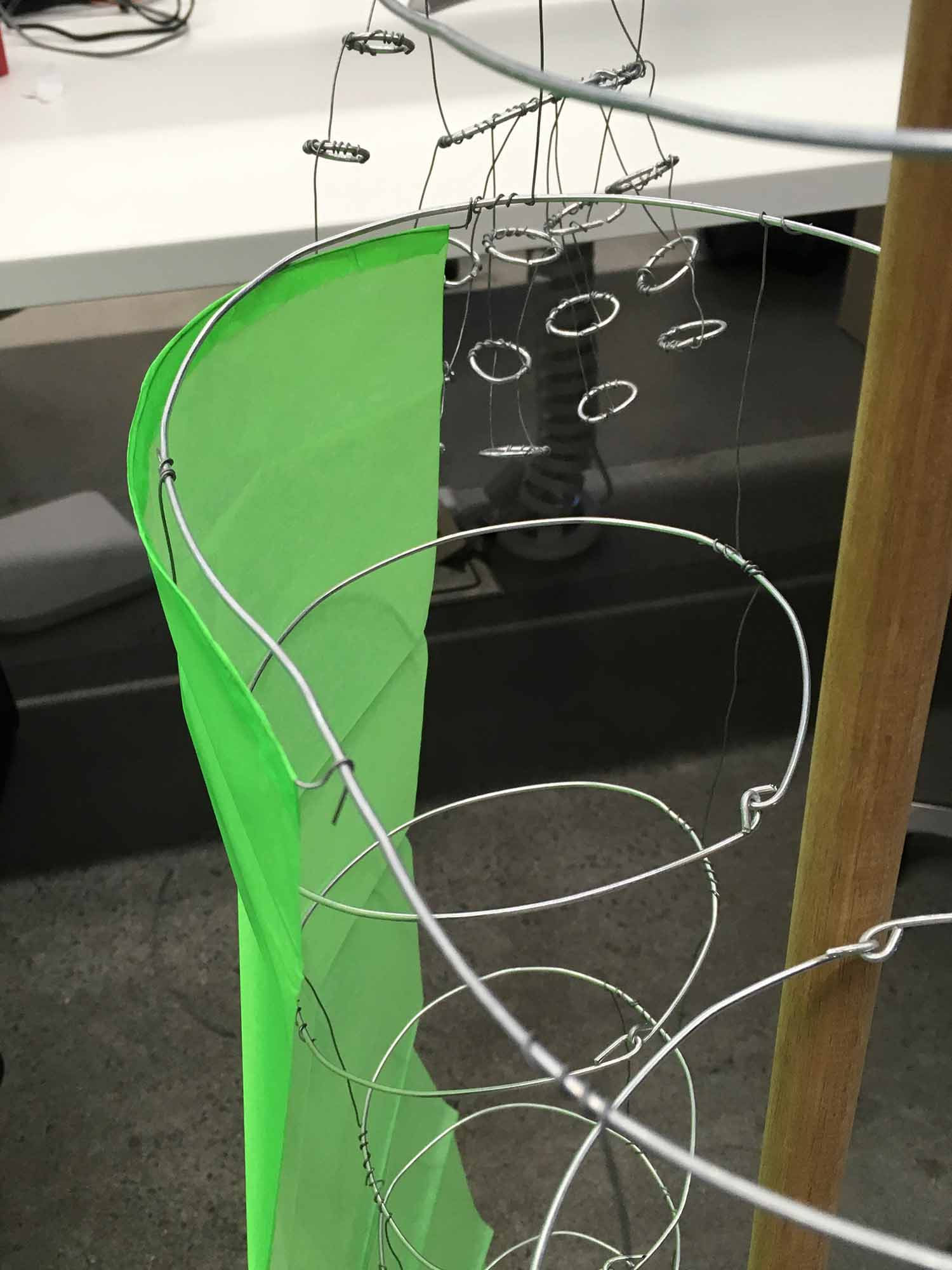

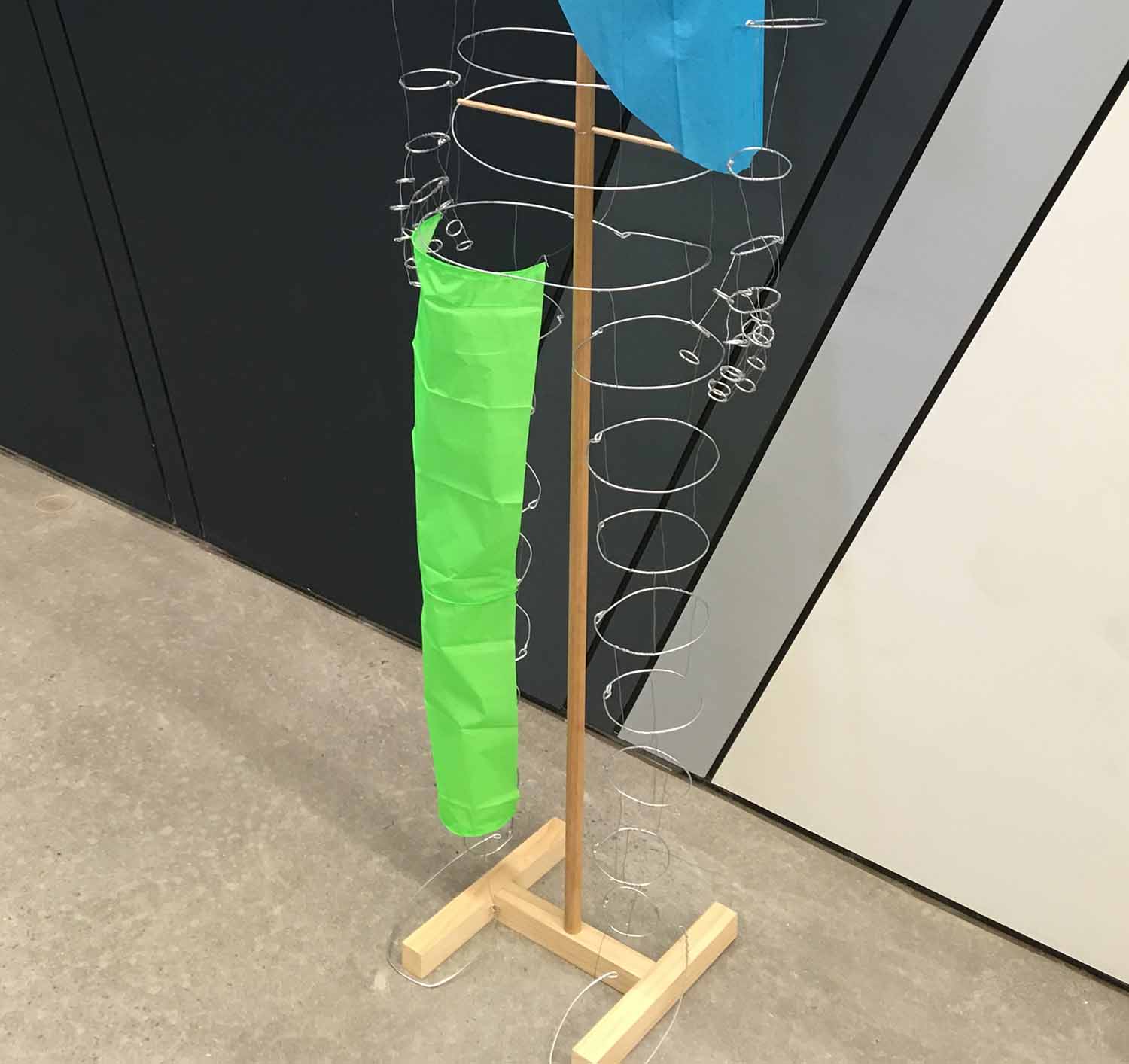

Detail of a modular panel affixed on a steel wire mannequin created for Computational Costume v0. Image is author's own.

Timber mast structure used for Computational Costume v0. Image is author's own.

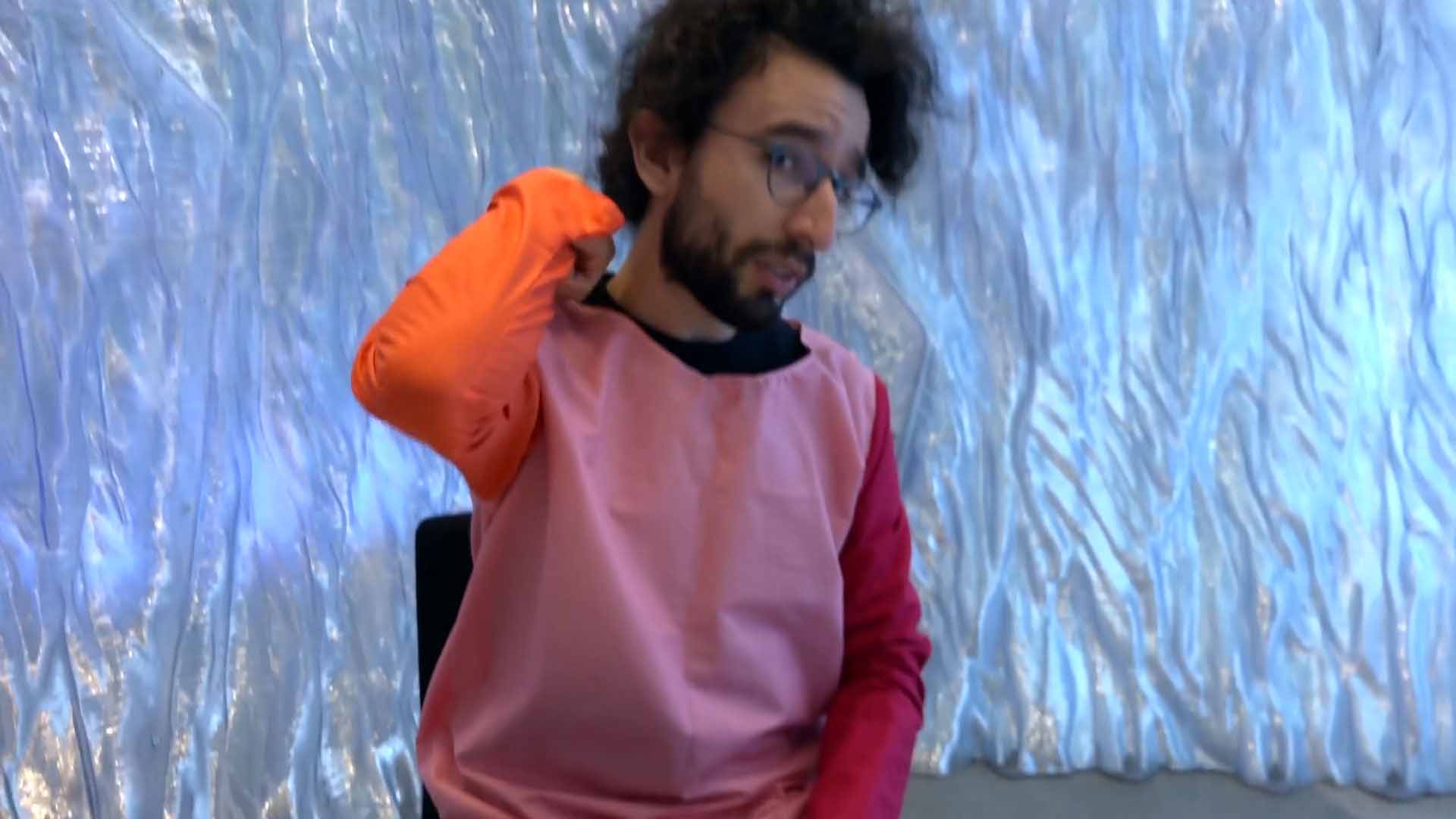

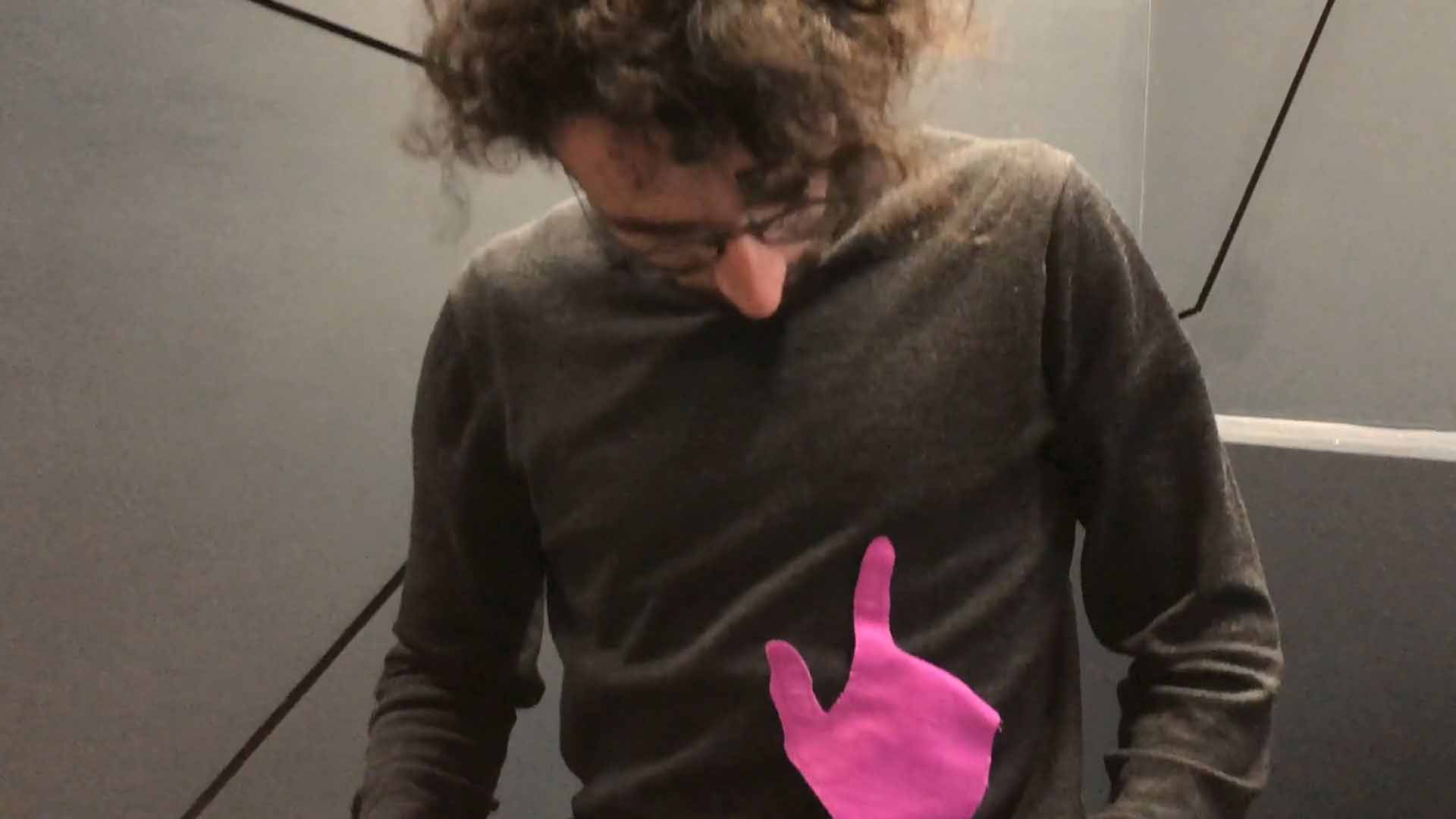

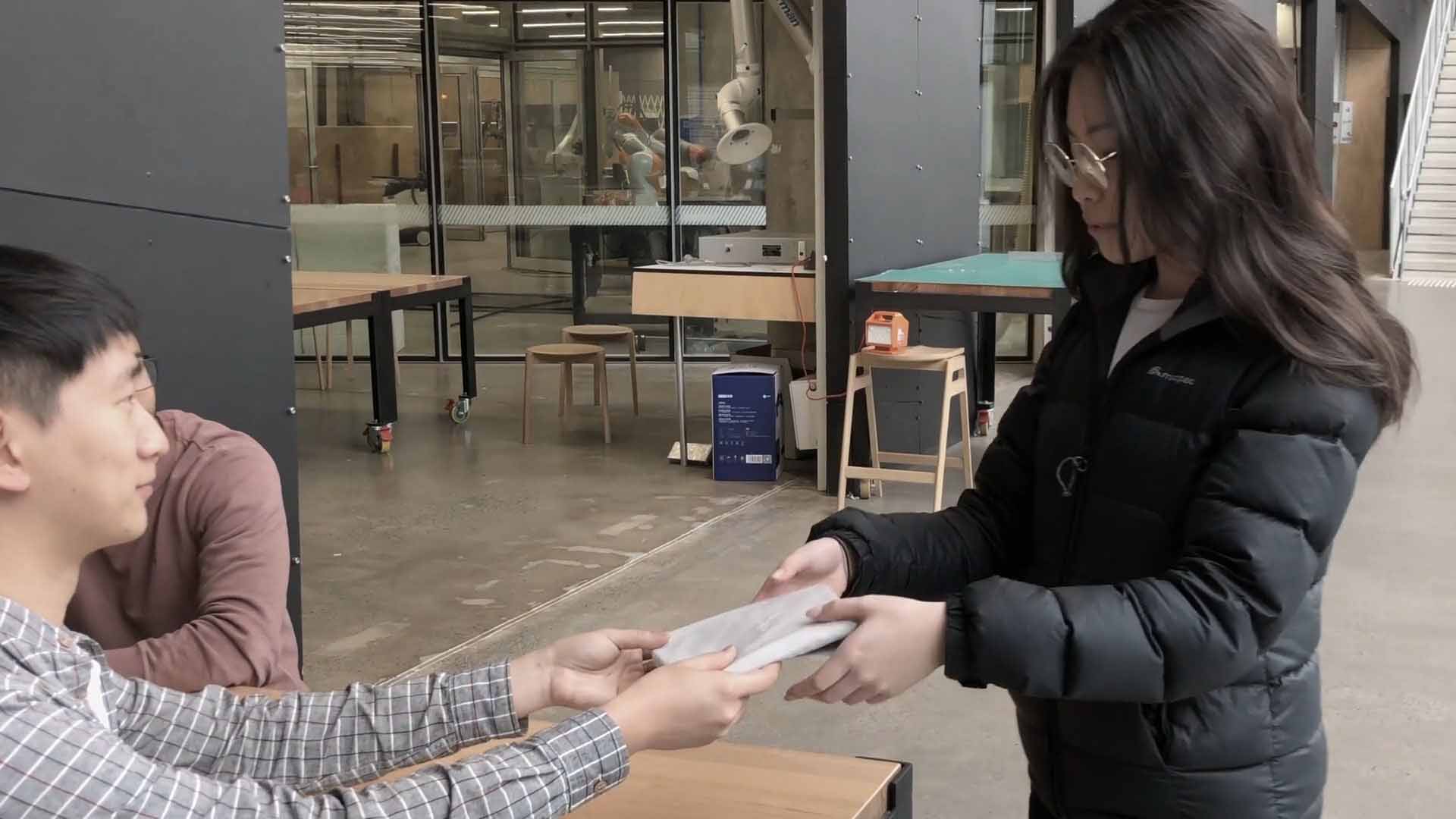

A suit for Computational Costume v2 intended for live performance is shown to audiences directly. Image courtesy of SensiLab, Monash University.

List of tables

An overview of where the four research investigations are located within this exegesis, with section links.

Interviewee specialisations and responses, coded into context, empathy, memory and method/process. Responses with reference to supporting people's memory and cognitive load have been highlighted in bold.

Interviewees' consistent design practice approaches, coded into context, empathy, memory and method/process.

Acknowledgements

I have numerous people to thank for supporting my PhD by sharing their skills and their support throughout my journey.

Tim Dwyer, Jon McCormack and Vince Dziekan: for supervising my research with great thoughtfulness, a bit of humour in most meetings, and so much of your time. Collectively, you all helped shape the direction of my PhD from day one, up until the end. You gave me the freedom to pursue my visual design practice in a technical field. You all encouraged me to push into the unknown. Because of this, I was able to come out the other end with valuable lessons, and exciting results, that I could never have anticipated.

Julie Holden: for your great work in editing my writing, and teaching me how to elucidate ideas and outcomes, with greater care and intent. It was a real pleasure to go through different writing options and learn how they work. It was like learning to design again, but through text. I treasure the writing skills I have learned with you, because they have been so valuable—in both reflecting on and articulating my own logic, and communicating my work.

Lizzie Crouch: for your encouragement and valuable advice to share my work with the world. This has put my research in the places and minds of people I would otherwise have not reached.

Elliott, Pat, Dilpreet, Lizzie, Sojung, Yalong, Yingchen, Matt, Leona, Lora, Su-Yiin, Mike, Toby, Shelly and Nina: you have been the most wonderful friends. Even when your companionship and conversations were enough—a few of you even offered bags of organic fruit or vegetables, shared homemade cakes, drinks and sourdough bread starter and lovely gifts, and gave me a hand to install and take down my work.

Simone: sometimes you matched the feedback my supervisors gave me. You even came to my mid-candidature talk of your own accord. You also had the clout to crush my doubts when I thought settling on my costume idea was silly. Your generosity was tremendous.

Nick and Jesse: it did not matter how many times I said I needed to write, you kept trying to distract me. I love you both. You have been the best friends I could ask for—you have given me perspective on all manner of things, many a time. You both made the hard times in life less so, through your kindness.

My mother and father: you have been my greatest supporters for almost 27 and a half years. You have provided for me what you did not have access to. In addition, your great qualities have been infectious: Ma, your abundance of care for those around you; and Pa, your concern for justice and culture—and the fortitude you both possess. For this, I am so fortunate.

Also, there are a number of people to thank for their direct support of my research. In particular:

- Jon McCormack, Elliott Wilson and Lizzie Crouch: for providing and running SensiLab—the wonderful and near-limitless multidisciplinary place which has nurtured both me and my work

- Allison Mitchell: for her diligent support behind the scenes to ensure myself and the supervisory team did what we needed to do, in addition to facilitating all of my reviews and my final exhibition

- The PhD milestone review panels: chaired by Michael Morgan and, over the years, attended by Michael Wybrow, Mark Guglielmetti, Gene Bawden and Indae Hwang; their fresh input has been invaluable for reflecting on my research and determining the best direction forward

- Allison Mitchell, Ammie Julai and Elena Galimberti: for facilitating my final exhibition with such care—as the first practice-based research exhibition for the faculty

- Illustrator Janelle Barone: for bringing my vision of Computational Costume to life with a wonderful illustration.

- Professional accredited editor Mary-Jo O’Rourke AE: for providing copyediting and proofreading services according to the national university-endorsed ‘Guidelines for thesis editing’ (Institute of Professional Editors, 2019)

- Andrew Maher and Stewart Bird (while incumbent) and Alexandra Sinickas at Arup, Melbourne: for their encouragement, conversations and time they allowed me to engage with their colleagues to inspire my research

- All individuals who participated in my Memory Menu study or interviews, in total 108 people who generously gave their time for my research

Finally, I thank the organisations that have generously funded my research, in particular: the Australian Government for its Research Training Program (RTP) scholarship; Monash University for its resources, as well as travel and equipment funding; and Arup's Melbourne office for its additional investment. These investments facilitated the cultivation of the original ideas presented through my research.

Introduction

When people use mainstream digital devices such as a smartphone, television or computer, there is a divide between the reality presented through the device and their surrounding physical reality. This divide matters because physical reality gives purpose and meaning to the applications on a digital device. This notion of a divide draws upon the perspective of embodied interaction. Embodied interaction defines a connection between people's lived experiences and their experiences through digital devices.

Take, for example, two people talking across a long distance through a video call. Through the windows of an on-screen video call, each person has enough information to hear the other and gauge facial expressions, alongside a peek into their surrounding environment.

Valuable information faithfully presented in a physical conversation, such as objects, events and movements in a shared physical space, are lost because of the cropping of the video camera. While this lost information may seem minor, it contributes to the lack of faithfulness of the ambience presented and thereby the interpretation of the session. A compromise is made here on valuable information normally available when communicating, in order to allow today's mainstream digital device to overcome the physical barrier of distance.

Losing information that people are accustomed to is problematic because the surrounding physical reality gives purpose to an activity such as a video call. This problem extends to other actions performed through digital devices, actions that are ultimately grounded in the physical reality outside of the device. Such actions include organising and finding content, and visualising information that references the surrounding world.

In my research, I explore what details can be designed into digital experiences to bring them on par with physical and virtual experiences in the world. Imagine how we as humans live and draw understanding from our experiences in physical reality. People who engage in activities through digital devices experience only a subset of possible experiences in the physical world. I divide these experiences into virtual and physical practices that are engaged across digital devices and in physical reality:

- Virtual practices that encompass people's imagination consisting of perspectives and ideas

- Physical practices that encompass people's spatial understandings and actions

People's virtual and physical practices are endlessly rich and detailed. Yet today's average smartphone or computer use people's senses in limited ways. Such devices are configured to reduce information of vast scale, such as the totality of one's work or environmental surroundings, into the confined boundaries of a screen through windows, files and maps. These confined boundaries are not representative of the world's complexity as experienced outside of the computer screen.

Returning to the example of the video call, there are several issues with the cropping presented by the video camera and video-calling application on-screen:

- Communication between the callers is restricted to windows that cut out information from their ambient environments. This design removes distractions, yet prevents engagement with anything else that could be significant to a conversation.

- As a substitute for a surrounding physical environment, it is possible to collaborate through sharing files and screens via a video-call application, although these collaborations have limitations. Actions such as drawing are inferred from the movement of a cursor disconnected visually from the interactor performing the action with their body.

- Shared on-screen surfaces for video calling today lack information, as they are either blank or a digital wallpaper with some recognisable application windows and icons generally used by only one person. This acts as a poor substitute for a surface with objects or a space with even more objects and perhaps more than one inhabitant conducting their activities—all contributing meaning to what is visible.

The aforementioned issues in relation to current mainstream digital media affect the ability of today's digital media designers to present information in a way that is adequately grounded in physical reality. These issues have prompted my research to address how future digital media might be designed to adequately ground interactions with respect to people's lived experiences.

In my research, I investigate four areas to explore the limitations of physical and virtual practices with digital media. They take into consideration today's digital technology, approaches across mainstream media, emerging digital media and imagined digital media. The four areas involve:

- Simplifying physical and virtual practices on-screen: what information can be added to simplify interaction on-screen by creating connections to activities in physical reality?

- Reviewing physical and virtual practice support across media: how are practitioners across communication and design disciplines working to support people through different media?

- Reviewing new physical and virtual practices: how is emerging digital media bridging today's physical–digital divide?

- Creating new physical and virtual practices: how might we imagine a way forward based on identifiable gaps in current emerging media to bridge the physical–digital divide?

Background

The purpose of the four investigations ahead is to remediate the limitations imposed by current mainstream digital media using the perspective of embodied interaction. The perspective suggests paying attention to the connection between people's interactions through digital media and their lived experiences of the world. This can be accomplished through a range of design approaches across media and technologies. In order to cover this wide base, as part of my investigations I:

- Reviewed and studied a novel interface design approach through mainstream digital media on-screen

- Interviewed a range of experienced practitioners and researchers about their design approaches and feedback in response to work completed up until this point

- Reviewed relevant emerging digital media designs

- Engaged a speculative design process to imagine improvements to mainstream digital media in a probable future free of today's technological limitations

Table 1.1 provides an overview of where the four research investigations are located within this exegesis.

The investigations have been influenced by the concept of the physical–digital divide [25] proposed by Pelle Ehn and Per Linde, which is based on an embodied interaction perspective. The authors' divide takes a paradoxical look at the virtual and physical, based upon combating demassification. Demassification is the loss of material and social properties as physical artefacts evolve into digital artefacts, as proposed by Brown and Duguid [16]. For example, a physical book presented as an e-book on a digital device loses meaning which might be attached to the wear on a book's cover or its pages, or the positioning of a book on a desk alongside other objects.

Brown and Duguid suggest that demassification is paradoxical because supposed improvements in technology that shed physical mass carry repercussions. There are two problems that contribute to the demassification paradox: digital technology has stripped away social practices which once depended on physical form (physical demassification); and consequentially the social practices that relied on congregating around a physical format have disappeared (social demassification) [16, pp.22–25].

Brown and Duguid suggest an awareness of latent border resources [16, pp.6–20] to counter demassification in digital media [16, pp.21–31]. They suggest that digital media is not the sole cause of demassification, but merely a place where demassification can be observed because latent border resources have been overlooked by designers. Designers can correct digital media applications with an awareness of latent border resources. Latent border resources are qualities of an artefact that lie dormant but contain socially shared significance. These dormant qualities lie at the border between direct attention and peripheral attention. As an example, if reading a physical book, we are directly attuned to the pages while peripherally a worn spine may have some personal significance to the reader; however, sitting at the border is the thickness of the pages of an open book clenched with both hands that provides an indirect feeling of progression through the book. These latent border resources are important for designers to recognise, because they can be taken for granted when designing for new media.

In the e-book example, latent border resources have been lost, yet new physical and virtual practices have been allowed which can be iterated on. Now a reader can instantly skip between texts and follow links from one text to another without physically moving. The designer has new latent border resources to discover and provide to the reader, such as simplifying the search of texts by presenting a history of what has been navigated. In this case the new latent border resource provides an advantage over the physical library because using a physical library would require the reader to walk about and carry a stack of books or a reference list to accomplish the same task. This exemplifies how problems from losing mass as the physical becomes virtual can be recovered through careful consideration of the latent border resources available to people.

The example of the e-book gaining new latent border resources despite demassification highlights that physical and virtual practices determine one another or are co-dependent, rather than only working in opposition as suggested by the paradox of demassification. It is therefore important to understand that new latent border resources emerge from new digital media. This is the fundamental reason why the embodied interaction perspective adopted in my research extends from applications of today's technologies to applications of imagined probable technologies.

Simplifying physical and virtual practices on-screen

In the first of the four investigations, I explore how physical and virtual practices can be simplified for on-screen interactions with an awareness of latent border resources. First I acknowledge the variety of interaction design approaches for solving problems on-screen today and develop a novel interface design based on these design approaches.

This section of my research investigates:

- What do a variety of interaction design approaches reveal about solving common on-screen interface design problems? This is explored in On-screen interaction design approaches.

- How can latent border resources be supported on-screen? This is explored in Supporting on-screen spatial memory through use-wear.

Reviewing physical and virtual practice support across media

As discussed, virtual and physical practices are paradoxical and dependent on one another. This dynamic moves forward as new capabilities are added, refined and replaced in media. Adding new capabilities to digital media requires a look beyond people's practices supported by on-screen interfaces.

In the second of the four investigations: Design and communication practices across domains, I interview a variety of design and communication practitioners about my research conducted so far and what consistent and unique approaches they adopt to support people engaging with media. The culmination of these responses provide an indication of best practice approaches to follow in my research.

This section of my research investigates:

- What do a variety of design and communication practitioners have to say about the design approaches adopted in the research?

- What consistent and unique approaches exist in designers' practices to supporting people's activities across design and communication domains?

Reviewing new physical and virtual practices

In seeking to resolve the physical–digital divide by tackling demassification, Ehn and Linde (2004) turn to the perspective of embodied interaction to employ new kinds of physical and virtual digital media interactions. Embodied interaction assists designers to reflect on what physical and virtual practices are useful to people. Embodied interaction is a perspective in the field of human–computer interaction (HCI) popularised by Paul Dourish in the seminal book Where The Action Is (2001) [22]. The perspective suggests the meaning we derive from the interfaces of digital devices is largely influenced through having a physical manifestation in the world as experienced through our bodies [22, pp.100–103]. Rather than people being seen by digital media designers as machines that respond in a predictable fashion to familiar metaphors and instructions through digital artefacts, meaning obtained through digital artefacts is intertwined with people's unique lived experiences of the world as a whole, through metaphor and concepts [54] or other media [11].

Ehn and Linde (2004) explored embodied interaction through the ATELIER design research project for physical–digital studio environments

for design students [25], shown in Figure 1.1. The studio environment allowed people designing an interactive installation to explore the qualities of a physical space through a physical model design, ambient sound and light projections, before designing a 3D model design. The environment enriched people's conceptualisation of design ideas by providing a wider range of necessary virtual and physical practices to work with, rather than limiting practices only to virtual 3D object creation, sketches and mental visualisations.

The work produced by Ehn and Linde (2004) fits into a pattern of designs that can be referred to as the Material Turn [102][83] in HCI. Outcomes of the The Material Turn in HCI demonstrate how designers can support people by intertwining digital media interactions into the world, as done with ATELIER [25].

In the third of the four investigations: I focus on Ubiquitous and tangible computing, which has been instrumental in supporting the Material Turn in HCI, with particular attention to Whole-body interaction to coalesce interactions.

Ubiquitous computing, a proposal championed by Mark Weiser in 1991, suggests personal computers are a transitional step towards information technology that will one day be as invisible and ubiquitous as the text on signs and candy wrappers [101]. This direction has encouraged the proliferation of devices we experience today. Tangible computing is a subset of ubiquitous computing and attempts to break the many functions of screen-based interactions into standalone artefacts. As an example, the process of sculpting can be achieved through physical platforms and communicated digitally, such as in Physical Telepresence [63] by Leithinger et al. (2014). We find the emergence of this kind of computing today in the mainstream network-connected devices of the internet of things (IoT) (or enchanted objects [85] as described by David Rose) and dynamic materials of the radical atoms research program [48] led by Hiroshi Ishii. In the mainstream, ubiquitous and tangible computing falls back to screen-based devices. Screen-based devices allow the necessary management and networking of IoT devices.

Whole-body interaction presents an alternative to screen-based devices, because it shows how interactions through digital media can be attached to the body and the world instead. With the promise of augmented reality in the future, whole-body interaction devices could offer the ability to substitute screen-based devices with interaction through bodily and physical surfaces, and in mid-air.

This section of my research investigates:

- What new physical and virtual practices are offered by the Material Turn in HCI through ubiquitous and tangible computing to address people's dependence on screen-based devices?

- How might designers use whole-body interaction as part of the Material Turn in HCI in the future to replace people's dependence on screen-based devices?

Creating new physical and virtual practices

Designers need to be able work beyond the constraints of today's technology to fill the gaps between the promise of outcomes of the Material Turn in HCI and their application in the mainstream. Speculative design is a process which allows designers to work beyond technological constraints to propose alternatives. Anthony Dunne and Fiona Raby in their book Speculative Everything [23] discuss the role of speculative design proposals in opening new perspectives to societal challenges. They argue that such designs add onto the public's vision of reality, challenge it and provide alternatives to it [23, p.189].

In the last of the four investigations: Computational Costume design, speculative design is used as a tool to address identified gaps without subscribing to today's technological constraints, for instance, the requirement for screen-based devices to manage computer networking. Through this investigation I seek to stimulate discussion around what would be both probable and desirable through whole-body interaction supported by augmented reality. Speculative design offers the ability to comment on how the technology has been developed so far and where it can be taken purely for the benefit of people.

A language to communicate and reflect upon speculative designs of whole-body interaction supported by augmented reality is explored in Computational Costume prototyping and presentation. Accessible materials and techniques are engaged to create the necessary illusion to support speculative designs. This is needed because the technology to realise the speculative designs is not yet available. It is not the responsibility of interaction designers to create technologies. It is more valuable for this designer to put forward the design ideas for evaluation and development. This position draws upon the success of interaction designers using paper and cardboard prototyping techniques [24].

This section of my research investigates:

- What technology and functionality can be expected in a speculative design for whole-body interaction supported by augmented reality for new physical and virtual practices?

- How can designers economically prototype and present speculative designs for whole-body interaction supported by augmented reality for new physical and virtual practices?

Supporting practices with screen-based digital devices

Engagement with digital devices today predominantly involves on-screen interaction. Screen-based devices provide the main means of creating, communicating and looking at digital content. For this reason, designing for screen-based devices is the starting point of my research to understand how people's practices can be better supported through digital devices.

In my research I build upon how people's activities could be supported on mainstream screen-based devices in On-screen interaction design approaches and Supporting on-screen spatial memory through use-wear. I also pay attention to the wide range of design and communication practices through interviews as a counterpoint to my research, in Design and communication practices across domains.

On-screen interaction design approaches

Unravelling interaction design approaches in the real world encouraged the beginning of my practical design research. I worked with engineers at a consulting engineering firm to determine where they could improve their digital media designs. These engineers made a variety of on-screen tools and visualisations to assist their own work and to communicate information with clients. My task was to use the issues I discovered in their design work to come up with generalisable design solutions.

What became visible through talking with a variety of engineers about their designs was both the domain specificity of the content and the use of off-the-shelf interface and visualisation frameworks. The difficulties of this situation were: the content could be quite complex; and visual or interaction design skills were not being engaged to make experiences more palatable.

I found several examples that illustrate this in geographic information system (GIS) visualisations and interfaces. Anecdotally, these systems were more favourable than their predecessors: large printed volumes and maps. However, it was common to see simple design problems—like the one shown in Figure 2.1—which could be easily solved, such as: layout issues where information could be divided into sections to make it easier to navigate; and adding iconography to make common features stand out.

These issues were made clear to the designers of the software, but it was not possible to fix them immediately. The presence of the issues was a symptom of how resources were allocated. There was no expectation to have well-versed designers on-board fulltime. Instead, alongside engineering work, engineers also designed their own on-screen tools when needed. Creating tools like the one shown in Figure 2.1 followed the path of least resistance—it gave the engineers control over the presentation of content that was deeply integrated into their work. This design practice avoided having to adopt ill-fitting tools or to outsource work.

While experiencing this inertia, I reflected upon the different design practices applicable to the situation at hand. In order to contribute, I reflected on four relevant kinds of design practitioners:

- Visual designers, who are adept at applying tacit knowledge of the elements and principles of visual design to interaction design problems. This professional practice is well documented in Designing Visual Interfaces [77] by Mullet and Sano (1995).

- Interaction designers, who work similarly to visual designers, with the ability to recognise interaction design principles and perform user evaluations to validate the effectiveness of designs. This professional practice is well documented in About Face: the Essentials of Interaction Design [19] by Cooper et al. (2014).

- Unspecialised visual/interaction designers, who apply established visual layouts and interfaces to projects without formal training in order to save expending resources to engage professional designers. This approach comes with mixed results, as shown in Figure 2.1 and experienced throughout the design work I observed.

- HCI researchers, who carry the work of visual and interaction designers forward into novel design spaces with rigorous evaluations to determine the validity of designs. An example of this kind of approach can be found in ISOTYPE Visualization – Working Memory, Performance, and Engagement with Pictographs [41] by Haroz et al. (2015), where the researchers formally evaluate the effectiveness of ISOTYPE (International System of Typographic Picture Education) pictorial symbols applied to information visualisations.

Supporting on-screen spatial memory through use-wear

Supporting spatial memory on-screen through use-wear is analogous to using bookmarks in physical books. Use-wear (also known as computational wear, read wear, visit wear or patina), see Figure 2.2, provides a visual signal over parts of the interface which have been interacted with, along with an indication of how frequently those parts have been used [43][92][47][1][66]. People can use this information to pick up where they left off when coming back to an interface, or when exploring a new interface to quickly identify familiar and unfamiliar areas. It is also a signal that can be read by others, because the progress made through the interface, like a bookmark in a book, is openly visible. These kinds of signals present useful latent border resources, as discussed in the Background. Supporting spatial memory is one direct way of supporting latent border resources.

Supporting spatial memory on-screen is a well-explored area [88]. Use-wear fits within a variety of novel and established strategies to support the location of objects on-screen. These strategies include, but are not limited to: laying out information as maps; traces and scents; obscuring information; and mnemonics. I explain each in detail below to provide a context for adopting use-wear.

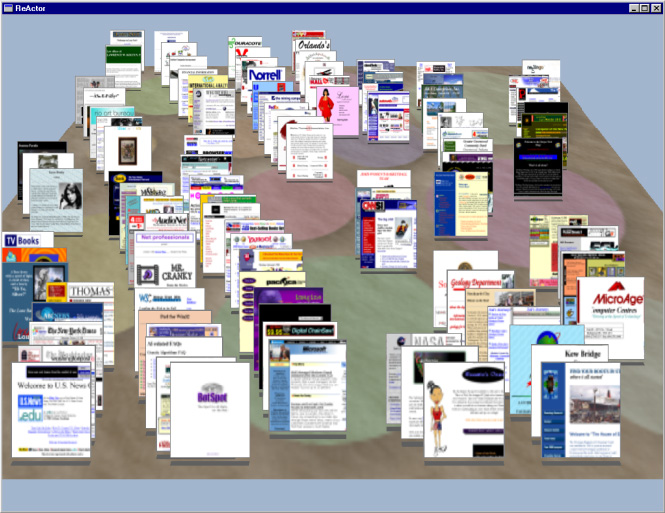

- Maps provide more effective representations of information than lists or ribbon command interfaces [89], especially when revisited [40]. Data Mountain [82] by Robertson et al. (1998), shown in Figure 2.3, advantageously replaces web browser bookmarks in a list with user-arrangeable stacks of thumbnails on an inclined plane.

- Traces and scents, like use-wear, involve leaving behind useful information, such as showing a trail of pages which have been navigated in the form of breadcrumb navigation, or providing a hint about information behind a hyperlink in the form of compact summaries or scents, such as scented widgets [103]. Animations also leave behind useful information by signifying different actions associated with on-screen windows through mnemonic rendering [10] and with graphics and input areas through afterglow effects [7] or by revealing the most popular choices in a menu ahead of other items through gradual onset [29].

- Obscuring information induces people to learn where information is. Such an example is a frost-brushing interface [18], where people are forced to recall spatial information by brushing away a frost effect from the interface to reveal the information. The effect supports spatial learning [18]. The frost effect performs the opposite of a use-wear effect.

- Mnemonics require people to practise an easily recallable pattern. An example is the method of loci (or memory palace) technique. This technique traces back to antiquity as a way to recall vast tracts of information by assigning chunks of information to mentally visualisable objects placed within a sequence of physical spaces known as loci [13]. The technique does not directly support spatial memory on-screen; however, it does allow people deliberate access to their spatial memory abilities, which can be used to recall commands. The technique has been used in the Physical Loci system [79], where it has been shown that commands can be recalled and invoked more effectively than traditional menus, with the added ability to share the commands with others.

Of the spatial memory supporting strategies, use-wear lacks concrete results to suggest that a subtle application on menu interfaces would be effective. Implementations of use-wear have been evaluated at a small scale and shown to be favourable [43][47][1][66]. However, conclusive benefits have only been demonstrated where visibility is obscured in fisheye views [92]. There is also a known benefit when highlighting popular menu items to work with a bubble cursor (or area cursor) designed to capture popular menu items within a widened region around the cursor, as shown in bubbling menus [96]. So far, a subtle use-wear effect on a standard menu has not been validated. Use-wear is also the easiest of the spatial memory strategies to apply in practice. The effect does not require having to restructure an interface, add animations or make people learn additional information such as a mnemonic.

A mixed-methods approach has been used to quantitatively and qualitatively evaluate use-wear for use in practice through the Memory Menu. The Memory Menu study detailed in Memory Menu presents the design and evaluation of a subtle use-wear effect for large menus. The work attempts to support people's spatial memory while using an interface. The work was motivated by its simplicity and applicability in practice. A rigorous online evaluation with 100 participants, and 99 valid results, showed no statistically significant results in favour of the use-wear effect. The null hypothesis H0 was validated and H1 and H2, as detailed in Memory Menu hypotheses, were disqualified. In summary, the use-wear effect did not affect selection times or the memorability of items selected. I did not find a significant effect on participant performance in the use-wear condition. Therefore I was unable to reject the null hypothesis. Qualitative responses for menu difficulty suggest participants had a stronger preference for the use-wear effect after using the baseline menu first. Overall, participants' attraction to the use-wear effect was polarised.

The results are therefore inconclusive as to whether the Memory Menu provides an improvement over traditional non-use-wear menu interfaces. The results illustrate that spatial memory issues are not easily solvable by placing information on top of an interface. As shown at the beginning of this section, alternative ways of supporting spatial memory are generally more involved. In light of this, spatial memory support needs to be carefully designed into an interface from its conception, with consideration of its content, presentation and audience. From this point, I sought a broader line of enquiry.

Design and communication practices across domains

To counterpoint my research, I reflected upon the design and communication practices of practitioners inside and outside of interaction and HCI design. Practitioners outside interaction and HCI design also deal with engaging audiences and supporting their practices. The importance of this process was to learn from practitioners who deal with mediums other than digital devices.

In the previous sections I have looked at how latent border resources on-screen might be supported by directly targeting people's spatial memory. This presents a narrow perspective through a single medium, providing a narrow means to bridge the divide between physical and virtual practices through digital media. Interviews with professionals who collectively engage with a variety of media can provide a valuable source of broader guidance in supporting people's activities through digital media.

Interviews were conducted with a variety of researchers and practitioners from backgrounds based in modern and traditional art, design and communication practices, on and off digital media, with established and senior experience. The interview motivation, design and results are detailed in Interviews. The 8 interviewees provided their insights into supporting audiences' practices beyond digital devices by responding to the work conducted in Supporting on-screen spatial memory through use-wear, framed around the HCI language of supporting people's memory and reducing their cognitive load.

Open coding of the interviewees' responses reveals a standard pattern of dealing with the audience's context and showing empathy towards them when making design considerations through an iterative process. This stood in contrast to dealing with supporting memory and cognitive load, which were dealt with directly by 5 of 8 and 3 of 8 interviewees, respectively. Interviewees also provided constructive comments to improve the Memory Menu.

Beyond supporting spatial memory and reducing cognitive load as explored with the Memory Menu, interviewees revealed four unique approaches: supporting people's modalities; working within a relatable cultural context and history; avoiding didacticism; and moving away from cultural constraints and what is culturally acceptable. The interviewees' four unique approaches can be seen as contradictory on the topic of culture, as they both rely on culture and defy it. However, as a whole the approaches provide direction for accommodating people's activities with respect to their vast capabilities and environments, as explored in the next section.

Conclusion

In this chapter I have explored how digital media designers might support people's virtual and physical practices through mainstream screen-based devices. I began by investigating the support of spatial memory for on-screen interfaces through a subtle use-wear effect. This approach was initiated on the back of real-world practical experience where it was not ideal to re-design interfaces already used in practice. Experimental results did not find a benefit to the effect as applied in the Memory Menu study. This result encouraged a broader look at designers' practices to support people's activities.

Through a series of interviews a broad range of design and communication practitioners were asked to provide feedback on the work so far conducted on use-wear. They were asked about their own practices to support people's activities. The interviewees revealed ways to improve the work conducted on use-wear and also suggested accommodating people's vast capabilities and environments, while challenging and accommodating the culture which surrounds interaction. Based on the interviewees' advice, I put aside a narrow focus on spatial memory and re-framed my research to target new ways of supporting people's activities based outside of screen-based devices.

Designing for a wider range of interactions beyond the screen

The narrow scope of Supporting on-screen spatial memory through use-wear and consideration of Design and communication practices across domains encouraged a movement towards the kind of design practice engaged in by Ehn and Linde, as discussed in Reviewing new physical and virtual practices. In this design practice, digital and physical devices take on new material expressions, and people take on board new kinds of practices. This involves incorporating a wider range of interactions that come from engagement with the world outside of the screen-based device into people's activities conducted through digital media.

On-screen interface design reconceptualisation

To begin accommodating a wider range of people's lived experiences in my design practice, I applied the concerns of demassification and embodied interaction, as Ehn and Linde did for their design research project ATELIER, to translate a screen-based interface design to a more engaging physical environment, as discussed in Reviewing new physical and virtual practices. As a trial, I began by reconceptualising the design of a commonly used word processing application. My redesign, shown in Figure 3.1, imagined for a large screen surface, caters to the human ability to work outside of a traditional keyboard, pointer and screen size. My design illustrates how it might be possible to conduct the range of activities involved in the process of authoring a document without a screen-based device. The design includes inserting data into templates for fine-grained control of the document design.

The design process involved cutting the application into its constituent menus and rearranging them to have a neater procedural flow without the constraint of a fixed-size screen. The interface in Figure 3.1 was then divided so different practices occupied their own areas, shown in Figure 3.2. These areas were positioned in a way that relates to their place in the process of writing, from conception to export. As the writer proceeds through the process, they can occupy an area as necessary. The positioning of the areas relates to their relationship in creating a document e.g. the document elements on the left and the finished outcome on the right. Areas specific to formatting were placed as close as possible to where the associated formatting practices take place—on the document itself. Overall, the areas were ready at hand when needed, rather than being concealed. However, the activity is still concentrated on a single surface, when it could have a deeper connection with the surrounding environment.

In extending the design proposal shown in Figure 3.1 and Figure 3.2 to integrate it with the surrounding environment, I then imagined how some of the areas might manifest themselves on the bodies of people in a poster design shown in Figure 4.3, to act as extensions of people's hands and arms. This allows the practices to be carried with people when they need them, enabling the interface to feel more like a portable tool to use when needed rather than a fixed surface with options cluttered around a document. This idea has been explored and explained through the design and creation of a 3D cardboard poster, detailed ahead in Cardboard poster and interface.

To ground the design practice defined here and provide direction for it, I reflect on three relevant areas that have contributed to ways in which designers have accommodated interactions beyond screen-based devices:

- The Material Turn, defined by Robles and Wiberg (2010) as a movement in HCI towards bringing together physical and digital qualities [83]

- Ubiquitous and tangible computing, which presents research towards prolific devices that privilege engagement with physical materials and objects, over screens; these devices have been explored in the radical atoms research program [48] and are emerging in the mainstream network-connected devices of the IoT (or enchanted objects [85])

- Whole-body interaction research [26], which leverages greater use of human movement and senses for interaction through computers, and presents an alternative to dependence on prolific devices

The Material Turn

The Material Turn as defined by Robles and Wiberg (2010), presents how physical and digital qualities can come together for the benefit of digital media interaction design [83]. The Material Turn can be seen as a concentrated effort to produce work in a similar way to what Ehn and Linde pioneered in 2004 through their design research project ATELIER, as introduced in Reviewing new physical and virtual practices. The Material Turn reveals the potential in blending traditionally non-digital material qualities and physical experiences into digital artefacts.

The Material Turn is distinct from other design directions in HCI to remediate the connection between the physical and digital, by focusing in on the materiality of interactions. This focus on materiality indicates a desire to reconcile the divide between the physical and the digital through new materials and new relationships between materials [83, p.137]. This can be contrasted with standard design approaches that build upon graphical user interfaces (GUI), which rely on metaphorical relations between physical and digital such as files and documents, or tangible computing, which presents physical analogues to digital information [83, p.137]. Materiality presents a broader view of what is possible that extends outside the constraints of the physical affordances of established digital media.

Within the discourse of the Material Turn, it has been encouraged to move away from an allegiance to kinds of materials (e.g. digital devices or non-computational media) and to concentrate instead on the experiences and interactions afforded by any material:

- Material experiences: Giaccardi and Karana (2015) [33] define a framework for material experiences in HCI which has grown out of the Material Turn. Material experiences consider the experiences people have with and through materials, rather than focusing purely on the physical qualities of materials [33]. The authors look at: people's interpretations; affective and sensorial experiences; and the performative abilities afforded by various media.

- Materiality of interaction: Wiberg (2016) argues for a focus on the materiality of interaction and not the material status of the computer [102]. Material status in the sense described is a bias that privileges one kind of digital device over another or thinks of non-computational materials as more authentic or real, whereas a focus on the materiality of interaction privileges a focus on interaction—an understanding of emerging experiences as part of a larger history of interaction.

The Material Turn in HCI, as described, shines a light on the virtual and physical practices enabled by digital media that moves beyond screen-based devices. Discourse in the area provides a means to critique the contemporary directions of ubiquitous and tangible computing. I now explore how well this computing bridges the divide in terms of new kinds of experiences afforded and whether material status has been overcome.

Ubiquitous and tangible computing

Ubiquitous computing was proposed by Mark Wiser in 1991 [101] as a future to be achieved where computing is invisible. Ubiquitous computing provides us with a perspective from which to imagine computing that is as ubiquitous as everyday objects and thereby more readily available to the different kinds of situations people experience. The proposal for ubiquitous computing best serves as a device to provoke new design concepts, rather than being an actual kind of digital media. It is argued that the future put forward by ubiquitous computing is proximate [9]; in other words: it's always just out of reach. Ubiquitous computing has arrived through access to all form of screens today from watches to televisions connected to the cloud. However, there is always room to make interaction through computers more and more ubiquitous—we are yet to create advanced artificial agents to converse with in order to perform tasks.

I specifically explore tangible computing, which provides a focus for designers to explore new physical and virtual practices beyond screen-based devices. Tangible computing, as explored in places such as the radical atoms research program [48], shows, for example, in Physical Telepresence [63] by Leithinger et al. (2014), shown in Figure 3.3, how communication can take place over network-connected dynamic surfaces that people can sculpt. Physical Telepresence allows people to share physical forms across long distances with greater sensorial qualities than alternatives such as videos or photographs.

Screen-based devices are a locus for digital media interactions that run counter to people's full range of sensorimotor abilities—or what humans can achieve with their senses and physical abilities. People can carry screen-based devices with them practically anywhere, but these do not encourage direct engagement with the world that surrounds them. This matters because surrounding contexts give the representations on screen meaning—such as: a conversation between people that can involve locations, other people and objects; or a virtual model of an object set made for physical interaction.

With respect to the Material Turn discussed, I explore how tangible computing extends digital media into new physical and virtual practices, but also highlight a continued attachment to the material status of digital devices. I explore how tangible computing:

- Enables Physical objects with virtual overlay, rather than translating physical objects to screens

- Enables Manipulable physical and virtual surfaces and objects, to relieve the limitations of using physical objects and screen-based devices

- Enables Enhanced manual processes by combining virtual tools with physical objects

- Enables the use of Ambient perception, instead of direct attention to devices

- Relies on the material status of common physical objects, resulting in people's Dependence on many devices, which can only be managed by screen-based devices

Following the review, I propose ways of Relieving dependence on screens.

Physical objects with virtual overlay

Tangible computing allows designers to present information from the physical world through physical objects with virtual overlays, rather than translating physical objects to be presented completely on-screen. Urp [98] by Underkoffler and Ishii (1999), shown in Figure 3.4, (using the I/O Bulb and Luminous Room system by Underkoffler and Ishii (1998) [97]) demonstrates how people can visualise the shadows and reflections cast by built structures and the airflows travelling around them. Physical tools can be used to measure distances, apply materials like glass and direct wind effects. Information that might be lost in a 2D representation is gained.

However, in Urp the physical objects used are not mutable like virtual objects on-screen. There is no possibility to modify the models or rearrange them into new views, for instance, by slicing them. I explore ahead how tangible computing presents physical materials which can be manipulated physically and virtually.

Manipulable physical and virtual surfaces and objects

Tangible computing allows for physically and virtually manipulating objects and surfaces. This extends the ability of physical models, as presented in physical objects with virtual overlay, so they can be treated in a similar way to virtual objects on-screen while also accommodating different physical uses. This interaction extends across large surfaces, as well as objects at room scale and hand scale:

- Tangible CityScape [94] by Tang et al. (2013) is a room-scale surface that uses dynamic actuators to present physical cityscapes and associated data such as traffic moving through a city.

- Physical Telepresence [63] by Leithinger et al. (2014) (using the inForm system [30] by Follmer et al. (2013)), shown in Figure 3.3, allows people to see and move objects from a distance using a projected surface which is actuated and able to sense depressions.

- Proxemic Transitions [37] by Grønbæk et al. (2017) shows how furniture with projected information can be adapted to suit a person who is standing or sitting.

- ChainFORM [78] by Nakagaki et al. (2016) shows how a handheld modular actuated hardware device can transform into different tools for drawing and displaying information.

- inSide [95] by Tang et al. (2014), shown in Figure 3.5, shows how layers and structural qualities of an object can be revealed through a virtual overlay. Hand gestures can be used to slice open objects or make them transparent. Touches can depress surfaces visually.

These works illustrate how different kinds of objects can be manipulated physically and virtually, rather than purely physically as a model or purely virtually as an object on-screen.

Enhanced manual processes

Being able to modify objects physically and virtually, as explored in the previous section, can enhance manual processes. Virtual tools that allow operations such as instantly copying and moving objects or generating accurate geometry can be applied to physical operations. In addition, physical actions can be communicated across digital networks.

- For sculpting, Perfect Red [48, pp.47–48], a speculative design by Bonanni et al. (2012), shown in Figure 3.6, presents a clay like material that allows sculpting by hand and with hand tools. The sculpting is enhanced with features found in computer-aided design (CAD). Like creating a form with CAD, the clay can be snapped to primary geometries (e.g. a circle or rectangle) or cut perfectly by drawing a line. In addition, forms can be cloned and fused perfectly.

- For communication and remote activities, Physical Telepresence [63] by Leithinger et al. (2014), shown in Figure 3.3, allows people to see and move objects from a distance using a projected surface which is actuated and able to sense depressions.

Ambient perception

The tangible computing explored so far comes with the benefit of alleviating direct attention, by utilising people's ambient perception. Screen-based devices traditionally require direct focus and are otherwise not intended to be easily visible. Tangible computing, which occupies a 3D presence, is visible peripherally and from afar, provided it is large enough and contrasts with surrounding objects.

The sculpting and movement of 3D forms are given greater expression in: surfaces like those of Urp, explored in Physical objects with virtual overlay; the works explored in Manipulable physical and virtual surfaces and objects; and materials like Perfect Red, explored in the previous section. Greater expression comes from the movement of bodies to perform direct physical actions, rather than the movement of hands and arms across a trackpad, keyboard or screen, in relation to flat representations. The greater expressions serve as rich latent border resources (see Background) in the form of prominent movements which can be mentally attached in order to form making and collaborative activities.

Below I explore a few ways that designers have deliberately leveraged people's ambient perception in a discreet fashion to relieve the need for direct attention in order to use devices:

- The Good Night Lamp by Alexandra Deschamps-Sonsino (2005) [21] is a small, house-shaped lamp that serves to indicate presence across a distance by acting like a shared light switch. Your own lamp can be switched on in order to switch on a lamp far away, sending a signal that acts as a less obtrusive substitute for sending a text message. The signal can be observed in a way that is akin to being in the same physical space as someone else.

- In a more intimate fashion than the Good Night Lamp, Pillow Talk [76] by Joanna Montgomery (2010) (first canvassed as a concept in Interactive Pillows [73] by Christina von Dorrien et al. (2005)), shown in Figure 3.7, shows how pillows can be used in long-distance relationships to signal presence by glowing when a partner rests on their pillow. This conventional activity is fashioned by tangible computing into something more powerful and simpler than communication through screen-based alternatives.

Dependence on many devices

So far, I have explored how tangible computing brings many benefits by bringing together physical and virtual practices. However, an issue which has been glossed over in the development of tangible computing is that interactions rest upon a range of physical devices. Tangible computing rests upon the material status (see Materiality of interaction in the The Material Turn) of common household or office objects and furniture. The issue is, activities possible through applications on screen-based devices shed themselves into a range of individual devices that may or may not cooperate.

In practice, dependence on the presence of many devices is not ideal in that it requires people to furnish their homes and offices with the right kind of devices and to maintain them, rather than relying on a few powerful screen-based devices. In practice, these tangible devices have not presented true freedom from screen-based devices because they need to be centrally managed by a screen-based device. This is evidenced in the mainstream adoption of tangible computing through the IoT (or enchanted objects [85]). IoT is not as complex as the tangible computing covered here, yet it presents the closest mainstream generation of computing beyond screen-based devices. Examples of the IoT are network-connected lights, toys, home appliances and blinds or doors—which can perform automated actions and communicate their status. An IoT device today may not be a fully actuated and sensing surface, as shown in Manipulable physical and virtual surfaces and objects, due to high cost and proof-of-concept status. However, at a fundamental level the IoT and tangible computing allow information to be captured and shared between physical devices to enable new kinds of interactions with digital media. The IoT is beginning to realise some of the vision of tangible computing by bringing computational ability to objects in our environment.

Despite tangible computing being an apparent antithesis to screen-based devices, IoT devices are dependent on screen-based devices to work. The earliest developments in tangible computing have also hinted at this dependence. In 2000, the HandSCAPE [62] digital tape measure, shown in Figure 3.8, showed how the distance and orientation of measurements could be gathered by a network-connected tape measure to generate a virtual 3D solid of the object being measured. The measurements were displayed on a screen because it is the most economical format to do so, even by today's standards, using a smartphone.

The continued dependence of tangible computing and the IoT on screen-based devices is attributable to unrivalled convenience through providing:

- Dynamic controls, which are readily available through screens, as opposed to embedding a standard interface in every tangible computing device, for connectivity and maintenance

- Sensor information, which can be captured and shared from screen-based devices like smartphones and smartwatches; this allows inference of a person's presence or absence without additional sensing devices in tangible computing

- Ubiquity—screen-based devices are common and usually ready at hand with many functions, whereas a tangible computing device takes a specific role and may remain in a particular place.

By recognising these conveniences, designers can conceive new methods to apply the same effects without depending on screen-based devices.

Relieving dependence on screens

It is possible to relieve dependence on screens by replacing the conveniences of screen-based devices that support dependence on many devices. As described, conveniences that need to be factored in are: dynamic controls; sensor information; and ubiquity. Technology for Augmented reality see-through devices, explored ahead, shows promise in achieving this by allowing the portable superimposition of visuals through wearable glasses.

Dynamic controls: augmented reality devices can extend the presentation of dynamic controls outside of screen-based devices and the fixed areas provided by projectors. Tangible computing works as shown in Physical objects with virtual overlay and Manipulable physical and virtual surfaces and objects rely on projectors to overlay dynamic information and controls on any surface within a fixed area. Augmented reality could extend this to any surface by providing the necessary ubiquitous display.

Sensor information: augmented reality wearables can also allow the same supportive sensing technologies found in screen-based devices.

Ubiquity: it should be noted that augmented reality, as proposed, only allows the management of tangible computing and the IoT to be ubiquitous. This does not target the heart of the matter, which lies in the material status of tangible computing and the IoT.

Despite their advantages, it can be argued that tangible computing and the IoT have never been designed to function as independently as screen-based devices do, because tangible computing and IoT devices have been designed in a world where screen-based devices are already able to simplify the networking and management of devices. For this reason, I now investigate how interactions outside of screen-based devices can be as ubiquitous as interactions are on-screen by exploring the specific area of whole-body interaction, which allows people's own bodies and surrounding environments to act as the primary surfaces for people's activities through digital devices.

Whole-body interaction

Whole-body interaction [26] is a specific subset of the ubiquitous and tangible computing explored already. Whole-body interaction involves both input from and feedback through: physical motion; the normal five senses plus the senses of balance and proprioception; cognitive state; emotional state; and social context [26, p.1]. The central tenet of whole-body interaction is to utilise a greater range of human abilities for the use of digital media.